-

Notifications

You must be signed in to change notification settings - Fork 120

GSoC '25 Week 02 Report - Mebin Thattil + Added Author Info #204

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

pikurasa

merged 5 commits into

sugarlabs:main

from

mebinthattil:blogs/update_with_authors

Jun 16, 2025

Merged

Changes from all commits

Commits

Show all changes

5 commits

Select commit

Hold shift + click to select a range

b4ac851

Added an image that shows FT model chat responses

mebinthattil d539139

GSoC Week 2 report by Mebin Thattil

mebinthattil 9a84ac1

Added mebin-thattil to authors page

mebinthattil 5f98066

Updated the blogs of previous weeks to include the reference to the a…

mebinthattil c1e3df0

Merge branch 'main' of github.com:sugarlabs/www-v2 into blogs/update_…

mebinthattil File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

Loading

Sorry, something went wrong. Reload?

Sorry, we cannot display this file.

Sorry, this file is invalid so it cannot be displayed.

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,27 @@ | ||

| --- | ||

| name: "Mebin Thattil" | ||

| slug: "mebin-thattil" | ||

| title: "GSoC'25 Contributor" | ||

| organization: "SugarLabs" | ||

| description: "GSoC'25 Contributor at SugarLabs" | ||

| avatar: "https://mebin.shop/mebin-380.png" | ||

| --- | ||

|

|

||

| <!--markdownlint-disable--> | ||

|

|

||

| # About Mebin Thattil | ||

|

|

||

| Hey, I'm Mebin 👋🏻! I'm a first year student at PES University, Bangalore, India, currently pursuing a BTech in Computer Science. I’ve had a deep passion for tech ever since I was 10, when I first learned in a CS class that you could write a couple of lines of code and build a (barely functional) website. That simple idea sparked something in me, and over the years, my love for computer science has only grown—especially while building a bunch of cool things along the way. | ||

|

|

||

| I'm going to spend my summer working on the [Speak Activity](https://github.com/sugarlabs/speak). I will be refactoring the chatbot in the speak activity to use Gen-AI. | ||

|

|

||

| About a month ago, I launched my personal portfolio website: [mebin.in](https://mebin.in/). It runs on an 8-year-old Raspberry Pi sitting at home 🤩, so apologies in advance if the site is occasionally slow or down (power cuts are a real pain). I ocassionally write blogs there. | ||

|

|

||

| I'm also building a Bluesky client in the Nim programming language. I'm a strong advocate for education in technology. In the past, I built a web application aimed at connecting students in rural areas with those in urban areas to help foster a free and open peer-to-peer learning ecosystem. | ||

|

|

||

| ## Connect with Me | ||

|

|

||

| - **GitHub**: [@mebinthattil](https://github.com/mebinthattil) | ||

| - **Website**: [mebin.in](https://mebin.in/) | ||

| - **Email**: [[email protected]](mailto:[email protected]) | ||

| - **LinkedIn**: [Mebin Thattil](https://www.linkedin.com/in/mebin-thattil/) |

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -4,8 +4,7 @@ excerpt: "Experimenting, Benchmarking and Researching" | |

| category: "DEVELOPER NEWS" | ||

| date: "2025-06-06" | ||

| slug: "2025-06-06-gsoc-25-mebinthattil-week1" | ||

| author: "Mebin J Thattil" | ||

| description: "GSoC'25 Contributor at SugarLabs - Speak Activity" | ||

| author: "@/constants/MarkdownFiles/authors/mebin-thattil.md" | ||

| tags: "gsoc25,sugarlabs,week01,mebinthattil,speak_activity" | ||

| image: "assets/Images/GSOCxSpeak.png" | ||

| --- | ||

|

|

@@ -84,11 +83,3 @@ Thank you to my mentors, the Sugar Labs community, and fellow GSoC contributors | |

|

|

||

| --- | ||

|

|

||

| ## Connect with Me | ||

|

|

||

| - Website: [mebin.in](https://mebin.in/) | ||

| - GitHub: [@mebinthattil](https://github.com/mebinthattil) | ||

| - Gmail: [[email protected]](mailto:[email protected]) | ||

| - LinkedIn: [Mebin Thattil](https://www.linkedin.com/in/mebin-thattil/) | ||

|

|

||

| --- | ||

131 changes: 131 additions & 0 deletions

131

src/constants/MarkdownFiles/posts/2025-06-14-gsoc-25-MebinThattil-week02.md

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,131 @@ | ||

| --- | ||

| title: "GSoC ’25 Week 02 Update by Mebin J Thattil" | ||

| excerpt: "Fine-Tuning, Deploying, Testing & Evaluations" | ||

| category: "DEVELOPER NEWS" | ||

| date: "2025-06-14" | ||

| slug: "2025-06-14-gsoc-25-mebinthattil-week2" | ||

| author: "@/constants/MarkdownFiles/authors/mebin-thattil.md" | ||

| tags: "gsoc25,sugarlabs,week02,mebinthattil,speak_activity" | ||

| image: "assets/Images/GSOCxSpeak.png" | ||

| --- | ||

|

|

||

| <!-- markdownlint-disable --> | ||

|

|

||

| # Week 02 Progress Report by Mebin J Thattil | ||

|

|

||

| **Project:** [Speak Activity](https://github.com/sugarlabs/speak) | ||

| **Mentors:** [Chihurumnaya Ibiam](https://github.com/chimosky), [Kshitij Shah](https://github.com/kshitijdshah99) | ||

| **Assisting Mentors:** [Walter Bender](https://github.com/walterbender), [Devin Ulibarri](https://github.com/pikurasa) | ||

| **Reporting Period:** 2025-06-08 - 2025-06-14 | ||

|

|

||

| --- | ||

|

|

||

| ## Goals for This Week | ||

|

|

||

| - **Goal 1:** Setup AWS for Fine-Tuning. | ||

| - **Goal 2:** Fine-Tune a small model on a small dataset. | ||

| - **Goal 3:** Deploy the model on AWS and create an API endpoint. | ||

| - **Goal 4:** Test the endpoint using a python script. | ||

| - **Goal 5:** Evaluate the model responses and think about next steps. | ||

|

|

||

| --- | ||

|

|

||

| ## This Week’s Achievements | ||

|

|

||

| 1. **Setup AWS for Fine-Tuning** | ||

| - Setup AWS SageMaker. | ||

| - Provisioned GPUs on AWS SageMaker to fine-tune Llama3-1B foundation model. | ||

|

|

||

| 2. **Dataset & Cleaning** | ||

| - Used an open dataset. It was a dataset about conversations between a student and a teacher. | ||

| - The dataset was cleaned and converted into a format that Llama needed for fine-tuning. | ||

| - Wrote a small script to convert the dataset into a format that Llama can understand. | ||

| - The dataset along with the script is available [here](https://github.com/mebinthattil/Education-Dialogue-Dataset). | ||

|

|

||

| 3. **Fine-tuning** | ||

| - Fine-tuned the model on a small set of the dataset, just to see how it performs and to get familar with AWS SageMaker. | ||

| - The training job ran on a `ml.g5.2xlarge` instance. | ||

| - The hyperparameters that were set so as to reduce memory footprint and mainly to test things. I'll list the hyperparameters, hoping this would serve as documentation for future fine-tuning. | ||

|

|

||

| **Hyperparameters**: | ||

|

|

||

| | Name | Value | | ||

| |----------------------------------|----------------------------------------------------| | ||

| | add_input_output_demarcation_key | True | | ||

| | chat_dataset | True | | ||

| | chat_template | Llama3.1 | | ||

| | enable_fsdp | False | | ||

| | epoch | 5 | | ||

| | instruction_tuned | False | | ||

| | int8_quantization | True | | ||

| | learning_rate | 0.0001 | | ||

| | lora_alpha | 8 | | ||

| | lora_dropout | 0.08 | | ||

| | lora_r | 2 | | ||

| | max_input_length | -1 | | ||

| | max_train_samples | -1 | | ||

| | max_val_samples | -1 | | ||

| | per_device_eval_batch_size | 1 | | ||

| | per_device_train_batch_size | 4 | | ||

| | preprocessing_num_workers | None | | ||

| | sagemaker_container_log_level | 20 | | ||

| | sagemaker_job_name | jumpstart-dft-meta-textgeneration-l-20250607-200133| | ||

| | sagemaker_program | transfer_learning.py | | ||

| | sagemaker_region | ap-south-1 | | ||

| | sagemaker_submit_directory | /opt/ml/input/data/code/sourcedir.tar.gz | | ||

| | seed | 10 | | ||

| | target_modules | q_proj,v_proj | | ||

| | train_data_split_seed | 0 | | ||

| | validation_split_ratio | 0.2 | | ||

|

|

||

| 4. **Saving the model** | ||

| - The safetensors and other model files were saved in an AWS S3 bucket. The URI of the bucket is: ``` s3://sagemaker-ap-south-1-021891580293/jumpstart-run2/output/model/ ``` | ||

|

|

||

| 5. **Deploying the model** | ||

| - The model was deployed on AWS SageMaker and an API endpoint was created. | ||

|

|

||

| 6. **Testing the model** | ||

| - A python script was written to test the model using the API endpoint. | ||

|

|

||

| 7. **Evaluation** | ||

| - The model responses were tested using the same questions used in my [benchmark](https://llm-benchmarking-sugar.streamlit.app/) done before. | ||

|

|

||

|

|

||

| --- | ||

|

|

||

| ## Unexpected Model Output | ||

|

|

||

| - After fine-tuning the model, I noticed that the model was producing some unexpected output. I expected the model to behave like general chatbot but in a more friendly and teacher-like manner. While the model's responses did sound like a teacher, the model would often try to create an entire chain of conversations generating the next response from a students perspective and then proceeed to answer itself. | ||

| - This behaviour was becaues of the way the dataset was strucutred. The dataset was enssentially a list of back and forth conversations between a student and a teacher. So it makes sense that the model would try to create a chain of conversations. But this is not what we need from the model. | ||

| - The next step is to change the strucutre of the dataset to make it just answer questions, but also to make it more conversational and understand the nuaces of a chain of conversations. | ||

| - The temporary fix was the add a stop statement while generating responses and also tweaking the system prompt. But again, this is not the right way to go about it. The right was is to change the dataset structure. | ||

|

|

||

| --- | ||

|

|

||

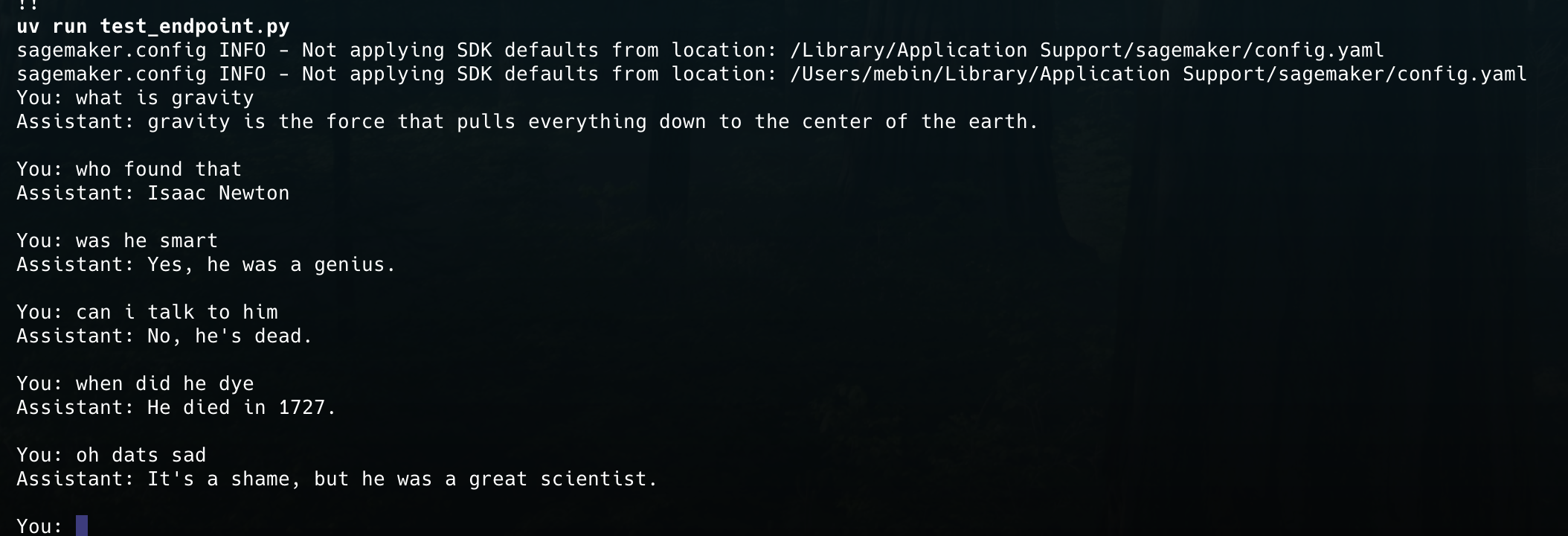

| ## Sample model output with stop condition | ||

|

|

||

|  | ||

|

|

||

| --- | ||

|

|

||

| ## Key Learnings | ||

|

|

||

| - Structure of dataset needs to be changed, in order to make it more conversational and understand the nuances of a chain of conversations. | ||

|

|

||

| --- | ||

|

|

||

| ## Next Week’s Roadmap | ||

|

|

||

| - Re-structure the dataset | ||

| - Re-Train and Fine-Tunethe model on the new dataset | ||

| - Deploy, create endpoint and test the model on the new dataset | ||

| - Evaluate the model on the new dataset and add to benchmarks | ||

|

|

||

| --- | ||

|

|

||

| ## Acknowledgments | ||

|

|

||

| Thank you to my mentors, the Sugar Labs community, and fellow GSoC contributors for ongoing support. | ||

|

|

||

| --- | ||

|

|

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Uh oh!

There was an error while loading. Please reload this page.