-

Notifications

You must be signed in to change notification settings - Fork 12

addressing issue 11, added support for exponential backoff #12

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

|

Thanks for your contribution @DrClick , we'll take a look at this during the day and/or next week. |

|

Hi @DrClick We were wondering what was the reason behind needing an exponential backoff here? given that only 1 request makes it through every So every Also having an unbounded exponential backoff would make the Unless you have really short configured |

| val retryAt: Long = System.currentTimeMillis() + cb.retryDelay.toMillis | ||

| class BrokenState(cb: CircuitBreaker, retryCount: Int=0) extends State { | ||

| val retryDelay: Long = cb.retryDelay.toMillis * Math.pow(2, | ||

| if (cb.isExponentialBackoff) retryCount else 1).toLong |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

when isExponentialBackoff is false, this will make the cb.retryDelay.toMillis to be multiplied by 2 as Math.pow(2.0, 1.0) == 2.0

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yup, good catch, I changed this. Will address.

|

The reason behind this is when we run 1000s these processes, this allows the failure to be backed off where just the process of trying to allocate the resource causes a load on the resource. For instance Cassandra goes down, You have 1000s of clients connected, everyone is retrying every 2seconds causing massive load on other resources, I can agree on the unbounded I also thnk a more standard implementation adding jitter is probably reasonable. I propose adding the following, jitter to the exponential delay (so not all clients try at the same time) If maximum retry is reached, I wonder what state you would like the CB left in? Also see http://docs.aws.amazon.com/general/latest/gr/api-retries.html |

| @@ -345,6 +349,7 @@ case class CircuitBreakerBuilder( | |||

| name: String, | |||

| failLimit: Int, | |||

| retryDelay: FiniteDuration, | |||

| isExponentialBackoff: Boolean = false, | |||

| isResultFailure: PartialFunction[Any, Boolean] = { case _ => false }, | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should probably add a maxRetryDelay: FiniteDuration that bounds the exponential growth.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would also be nice if there were a withExponentialBackoff(maxRetryDelay: FiniteDuration): CircuitBreakerBuilder convenience method on the builder

|

I think if the max retry is reached, you would just continue using that max value rather than continually increasing it. Having a random jitter added/removed to the calculated retry sounds like a good idea to avoid thundering herds |

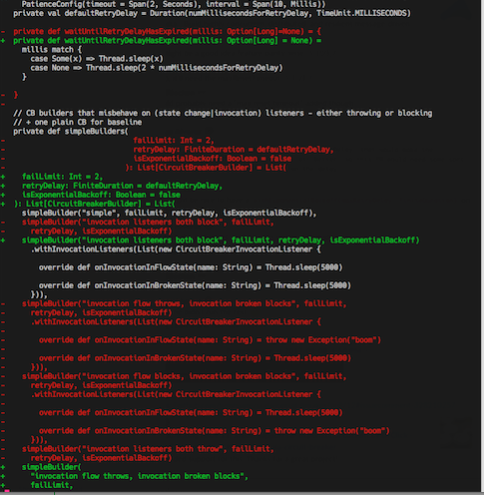

| private val defaultRetryDelay = Duration(numMillisecondsForRetryDelay, TimeUnit.MILLISECONDS) | ||

|

|

||

| private def waitUntilRetryDelayHasExpired() = Thread.sleep(2 * numMillisecondsForRetryDelay) | ||

| private def waitUntilRetryDelayHasExpired(millis: Option[Long]=None) = { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

🤔 why scalafmt didn't fix this...

|

@DrClick what IDE are you using? as I run |

|

@diego-alvarez-hs I am using intellij. I noticed there were a few formatting differences. I am curious, I was consider refactoring the other tests as I did mine, wapping the repated calls in a method. Are you ok with this before I go forward, to be clear: taking this: and changing to this: everywhere. I think this makes the test a bit more readable. |

|

Sure! that would be great, this code base needs some ❤️ |

|

I updated the code for the retrycap and cleaned up the tests |

|

Thanks @DrClick , I'll review both PRs later today/tomorrow. |

| 0 | ||

| } | ||

|

|

||

| val retryCap = cb.exponentialRetryCap match { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Consider the following: val retryCap = cb.exponentialRetryCap.getOrElse(Int.MaxValue)

|

|

||

| object SimpleOperation { | ||

|

|

||

| def operation(x: Int, y: Int): Int = x / y |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because of division by zero, you are going to want this to be Try-able: def operation(x: Int, y: Int): Try[Int] = Try(x / y)

|

|

||

| def operation(x: Int, y: Int): Int = x / y | ||

|

|

||

| def asyncOperation(x: Int, y: Int): Future[Int] = Future { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Consider: def asyncOperation(x: Int, y: int): Future[Try[Int]] = Future(operation(x, y))

| simpleBuilders(retryDelay = Duration(2, TimeUnit.SECONDS)).map(_.build()).foreach { x => | ||

| implicit val cb: CircuitBreaker = x | ||

|

|

||

| (1 to 2).foreach { i => assertArithException(s"failure #$i") } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For such a small range, I would just duplicate:

assertArithException("failure #$1")

assertArithException("failure #$2")

I added support for this. Thanks for a great project!