Deep OrderBook is an advanced cryptocurrency order book analysis toolkit that transforms order book data into temporally and spatially local-correlated representations for quantitative analysis and deep learning applications.

While conventional technical analysis often relies on price-derived indicators like moving averages, order books contain rich organic information about market microstructure and supply/demand dynamics. Deep OrderBook captures this information by:

- Processing real-time and historical order book data

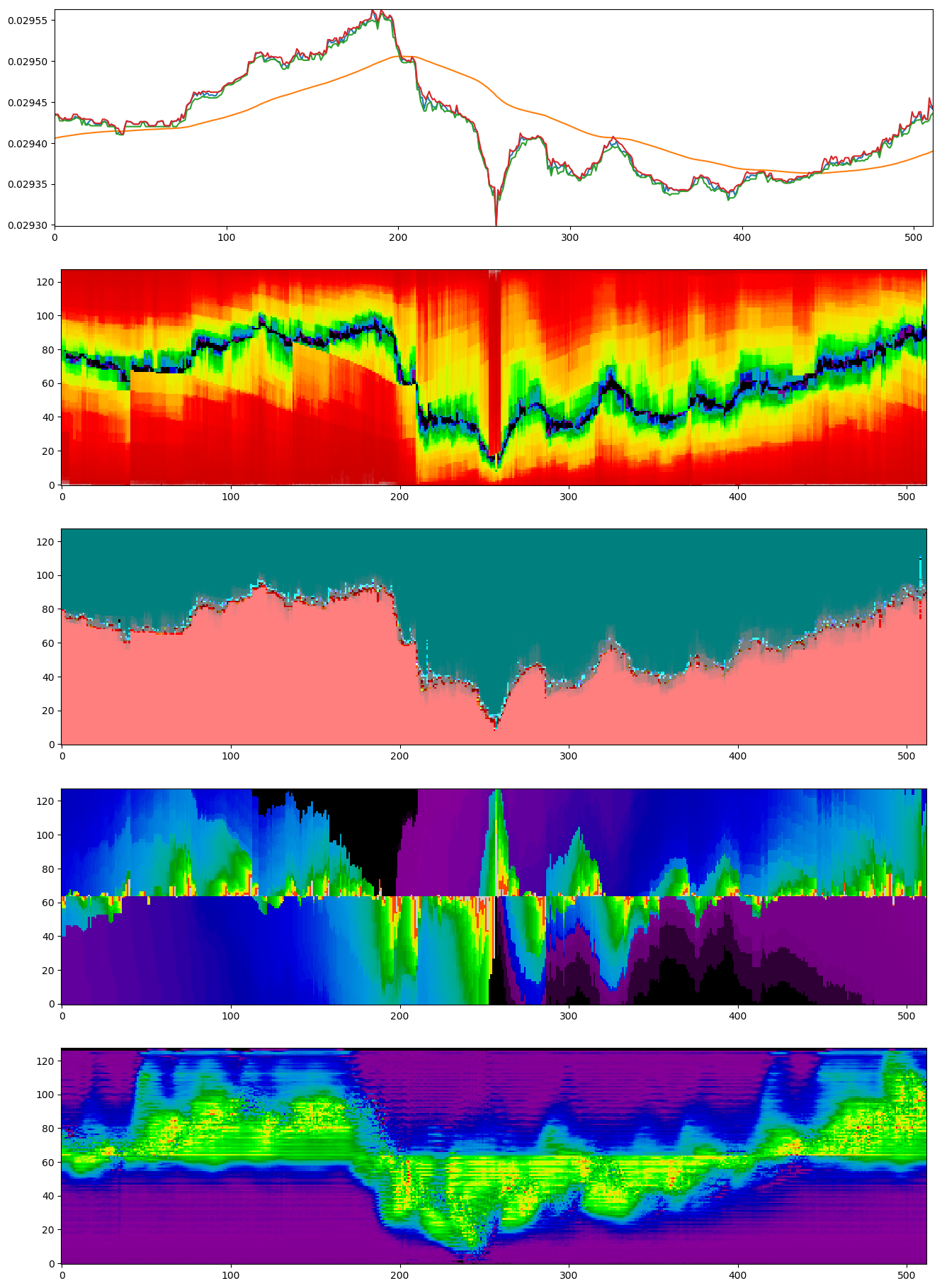

- Converting multi-dimensional order book states into feature-rich representations

- Enabling visualization and machine learning on these representations

Check out our short demo video:

- Live Data Collection: Connect to cryptocurrency exchanges (Coinbase, etc.) for real-time order book data

- Historical Replay: Replay and analyze historical order book data with precise timing

- Visualization Tools: Rich visualizations of order book dynamics and patterns

- Machine Learning Integration: Pre-process order book data for ML applications

- Asyncio-based Architecture: Non-blocking I/O for efficient data processing

- Type-Safe Implementation: Fully type-annotated codebase with Pydantic data validation

- Python 3.12 or higher

- API credentials (for live data)

-

Clone the repository:

git clone https://github.com/gQuantCoder/deep_orderbook.git cd deep_orderbook -

Set up API credentials: Create a file

credentials/coinbase.txtwith your API details:api_key="organizations/xxxxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/apiKeys/xxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx" api_secret="-----BEGIN EC PRIVATE KEY-----\xxxxxxxxxxxxxxxxx...xxxxxxxxxxxxxxxxxxx\n-----END EC PRIVATE KEY-----\n" -

Install the package:

pip install -r requirements.txt pip install -e .

To capture live order book data from exchanges, use the recorder module:

# Start the recorder with default settings

python -m deep_orderbook.consumers.recorder

# Alternatively, you can run it directly

python deep_orderbook/consumers/recorder.pyThe recorder will:

- Connect to the configured exchanges

- Save order book updates and trades to parquet files

- Store files in the configured data directory

- Automatically rotate files at midnight

The project includes several Jupyter notebooks for different analysis scenarios:

- Live Analysis:

live.ipynb- Connect to exchange and visualize live order book - Historical Replay:

replay.ipynb- Replay and analyze historical order book data - Machine Learning:

learn.ipynb- Examples of applying ML to order book features

Deep OrderBook is built on an event-driven architecture:

┌─────────────┐ ┌───────────┐ ┌─────────┐ ┌──────────────┐

│ Data Sources│────►│ Processors│────►│ Shapers │────►│ Visualizers/ │

│ (Exchanges) │ │ │ │ │ │ ML Models │

└─────────────┘ └───────────┘ └─────────┘ └──────────────┘

- Data Sources: Exchange APIs, historical data files

- Processors: Convert raw data to standard format

- Shapers: Transform order book snapshots into feature matrices

- Consumers: Visualization tools, ML models, trading signals

Run the test suite:

pytestContributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the MIT License - see the LICENSE file for details.

- Thanks to all contributors and the quant trading community