This quickstart, for the most part, consists of two of Hasura's microservices:

-

quandl: Fetches and stores data from Quandl and stores them in Hasura

-

metabase: Runs Metabase on this service which can be used to visualise the data fetched from quandl

Follow along below to get the setup working on your cluster and also to understand how this quickstart works.

- Ensure that you have the hasura cli tool installed on your system.

$ hasura versionOnce you have installed the hasura cli tool, login to your Hasura account

$ # Login if you haven't already

$ hasura login- You should have Node.js installed on your system, you can check this by:

# To check the version of node installed

$ node -v

# Node comes with npm. To check the version of npm installed

$ npm -v- You should also have git installed.

$ git --version$ # Run the quickstart command to get the project

$ hasura quickstart anirudhm/quandl-metabase-time-series

$ # Navigate into the Project

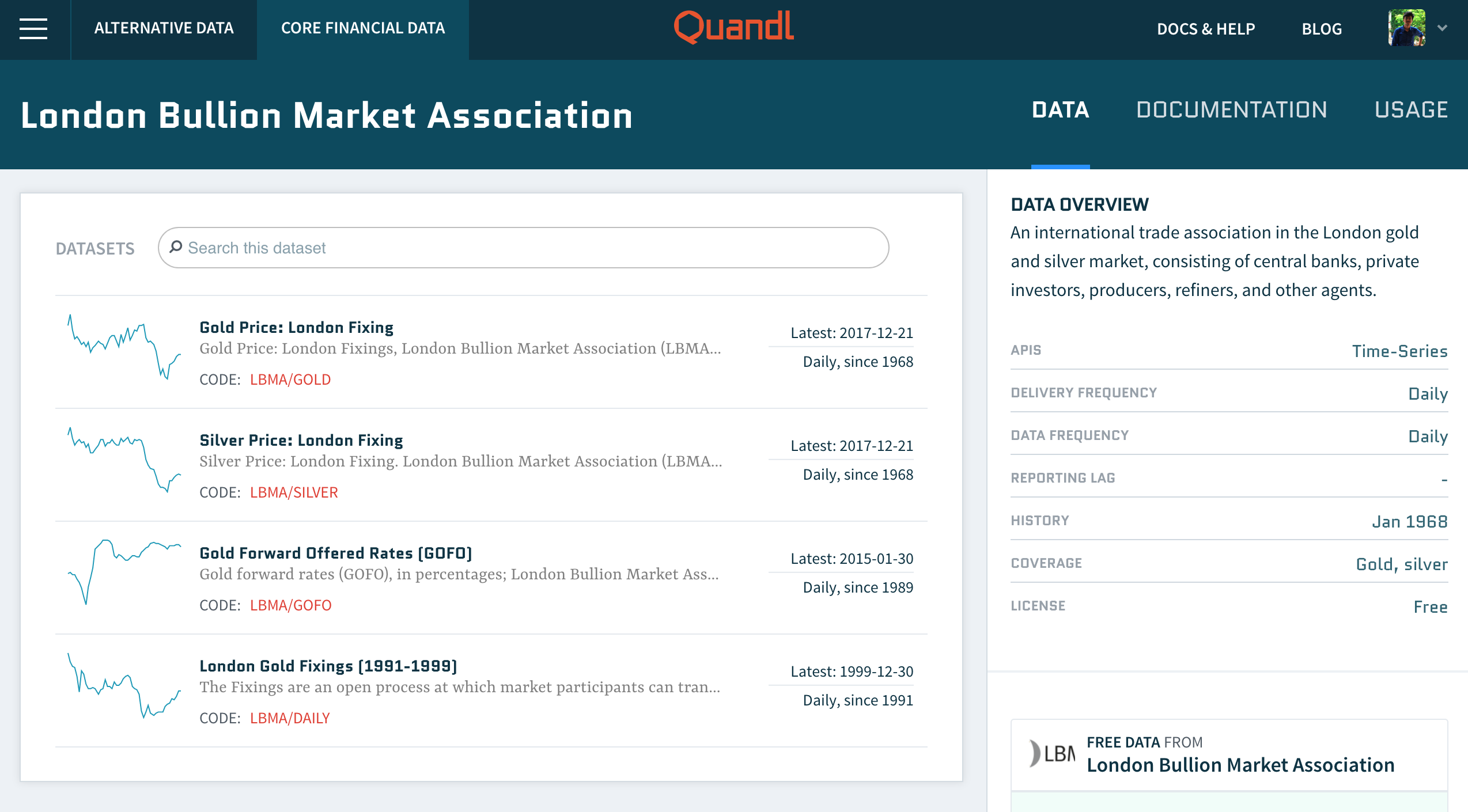

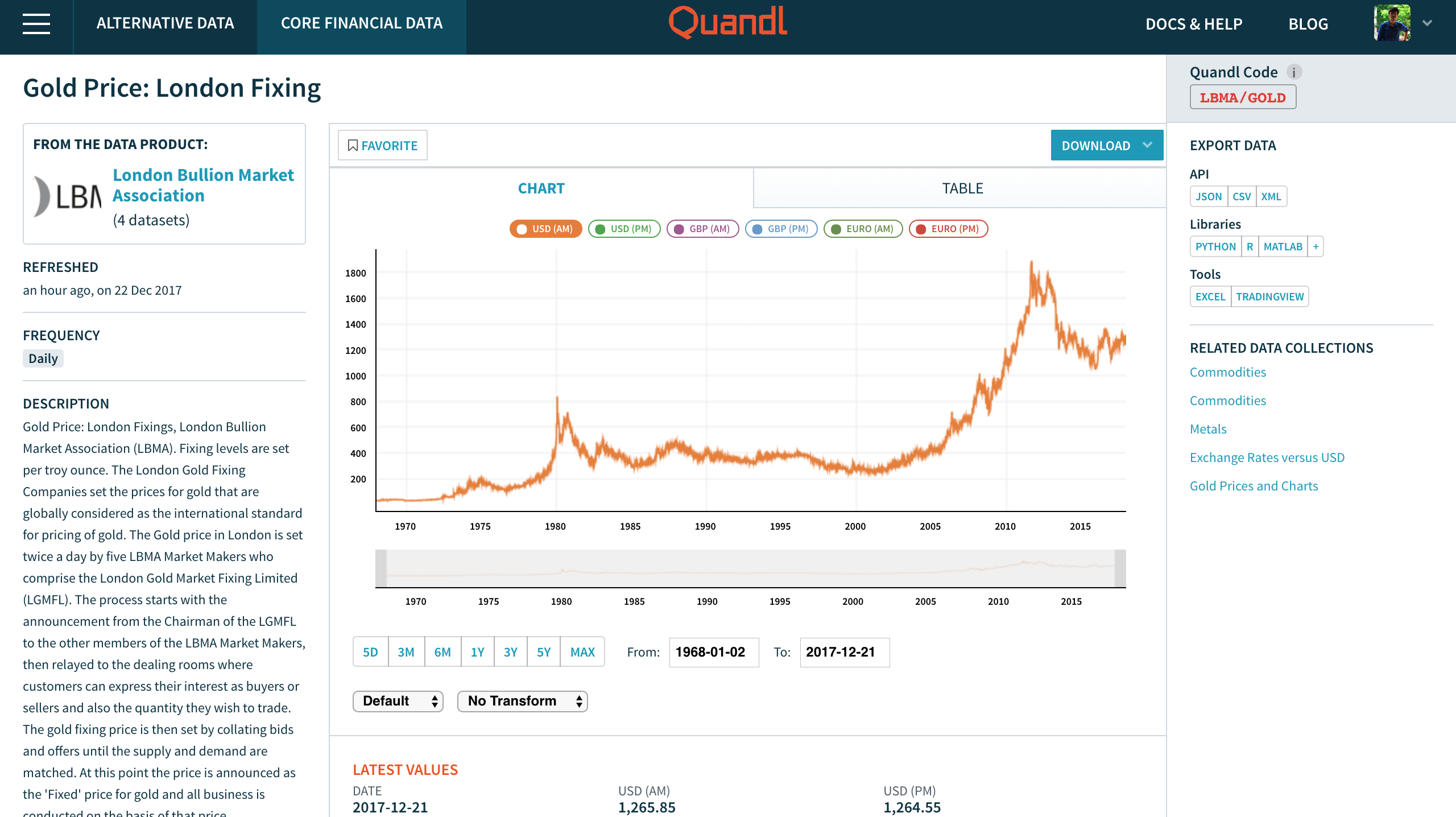

$ cd quandl-metabase-time-seriesBefore you begin, head over to Quandl and select the dataset you would like to use. In this case, we are going with the Gold Price: London Fixing from London Bullion Market Association dataset. Keep in mind the Vendor Code (In this case it is, LBMA) and Datatable Code (GOLD in this case) for the dataset.

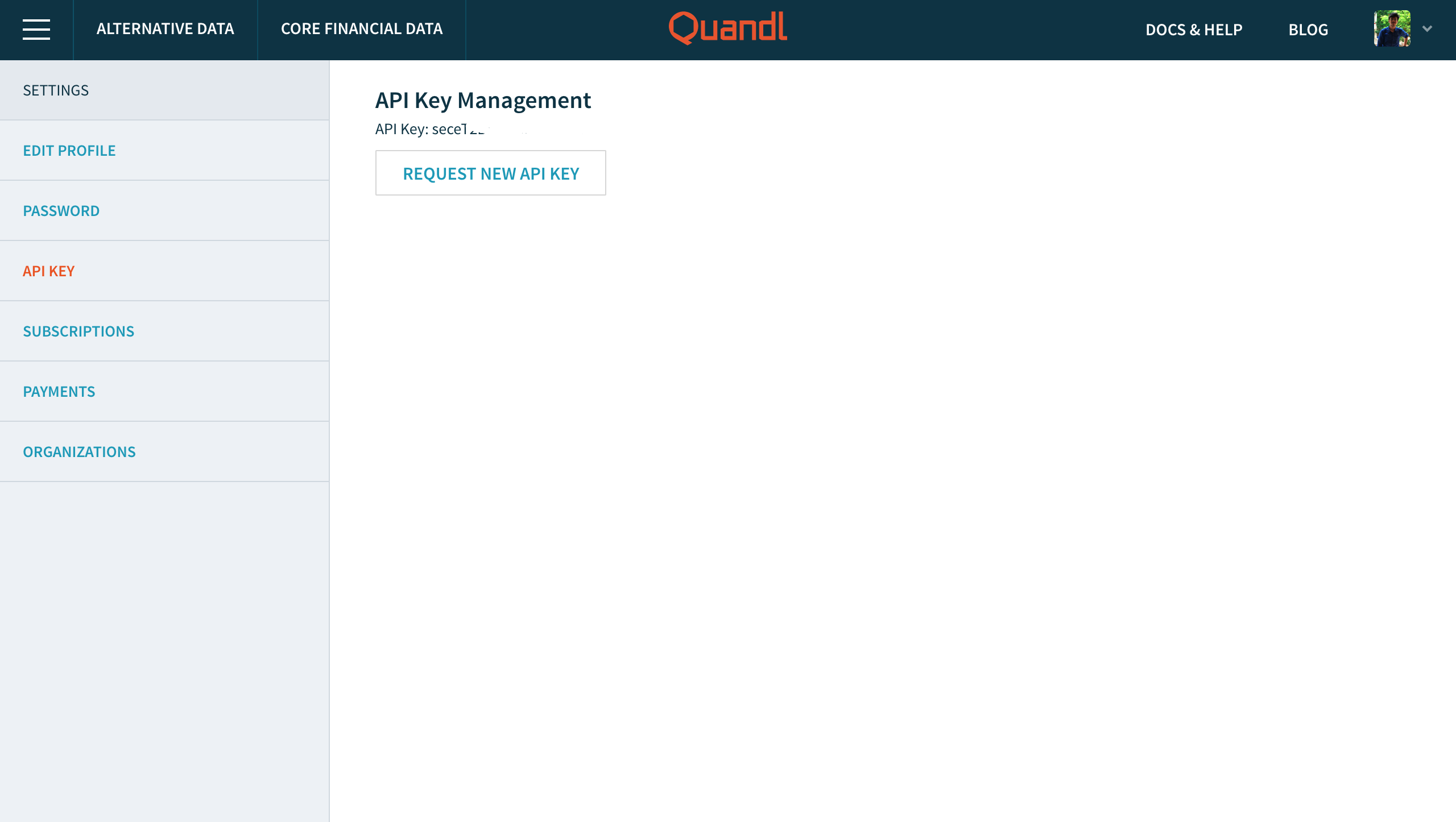

To fetch the data you need to have an API Key which you can get by getting an account with Quandl.

Keep a note of your API Key.

Sensitive data like API keys, tokens etc should be stored in Hasura secrets and then accessed as an environment variable in your app. Do the following to add your Quandl API Key to Hasura secrets.

$ # Paste the following into your terminal

$ # Replace <API-KEY> with the API Key you got from Quandl

$ hasura secret update quandl.api.key <API-KEY>This value is injected as an environment variable (QUANDL_API_KEY) to the quandl service like so:

env:

- name: QUANDL_API_KEY

valueFrom:

secretKeyRef:

key: quandl.api.key

name: hasura-secretsCheck your k8s.yaml file inside microservices/quandl/app to check out the whole file.

Next, let's deploy the app onto your cluster.

Note: Deploy will not work if you have not followed the previous steps correctly

$ # Ensure that you are in the quandl-metabase directory

$ # Git add, commit & push to deploy to your cluster

$ git add .

$ git commit -m 'First commit'

$ git push hasura masterOnce the above commands complete successfully, your cluster will have two services metabase and quandl running. To get their URLs

$ # Run this in the quandl-metabase directory

$ hasura microservice list• Getting microservices...

• Custom microservices:

NAME STATUS INTERNAL-URL EXTERNAL-URL

metabase Running metabase.default http://metabase.boomerang68.hasura-app.io

quandl Running quandl.default http://quandl.boomerang68.hasura-app.io

• Hasura microservices:

NAME STATUS INTERNAL-URL EXTERNAL-URL

auth Running auth.hasura http://auth.boomerang68.hasura-app.io

data Running data.hasura http://data.boomerang68.hasura-app.io

filestore Running filestore.hasura http://filestore.boomerang68.hasura-app.io

gateway Running gateway.hasura

le-agent Running le-agent.hasura

notify Running notify.hasura http://notify.boomerang68.hasura-app.io

platform-sync Running platform-sync.hasura

postgres Running postgres.hasura

session-redis Running session-redis.hasura

sshd Running sshd.hasuraYou can access the services at the EXTERNAL-URL for the respective service.

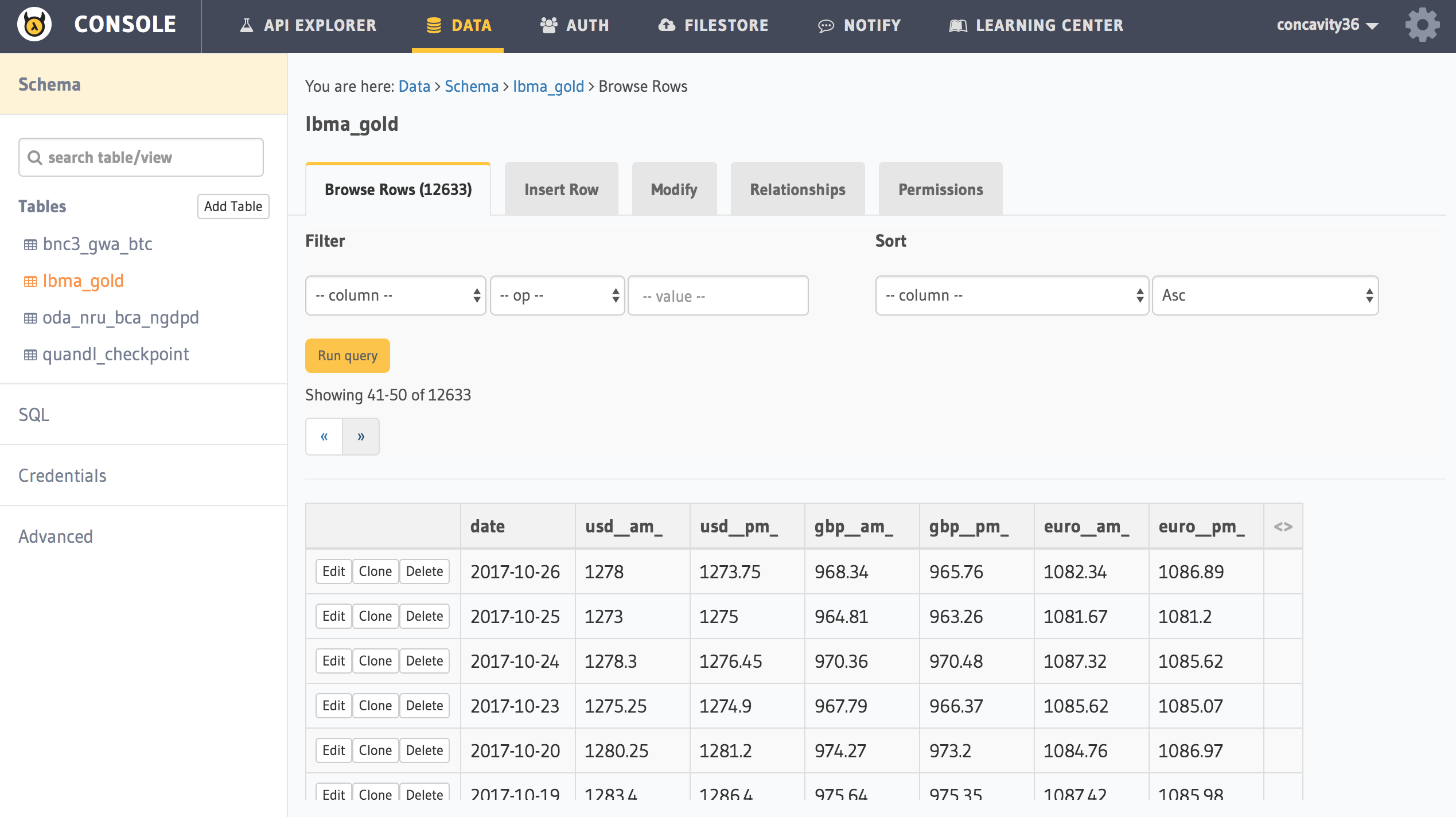

Currently our database has not gotten any data from quandl. You can head over to your api console to check this out. It will have one table called quandl_checkpoint which stores the current offset at which the data in Hasura is stored.

$ # Run this in the quandl-metabase directory

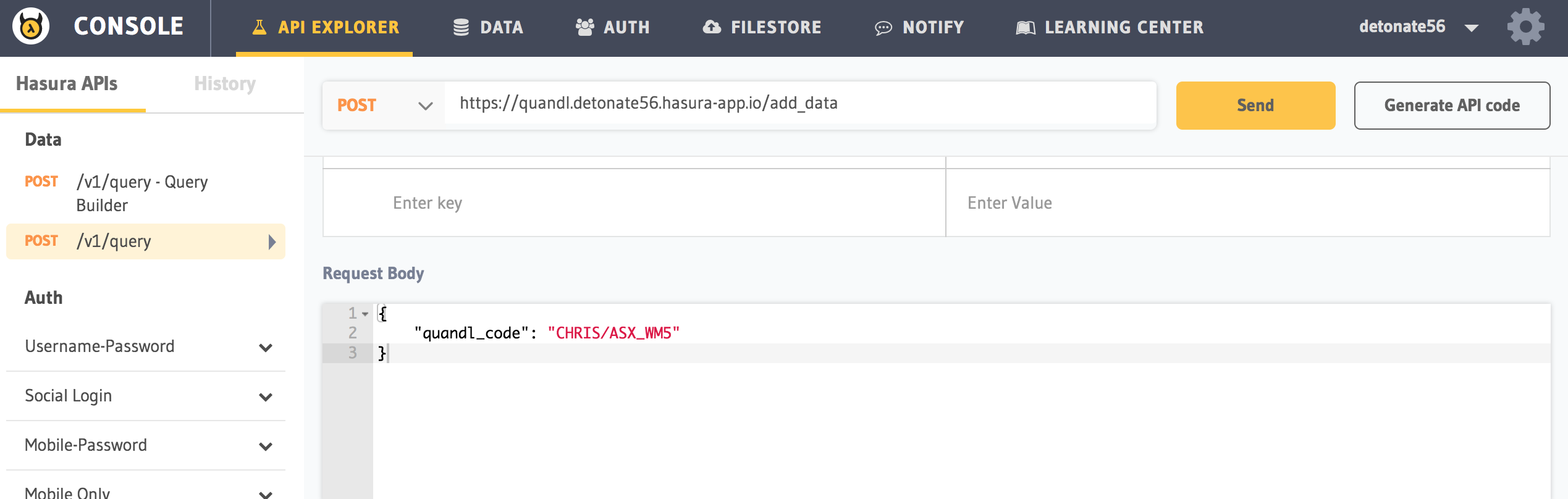

$ hasura api-consoleLet's use our quandl service to insert some data. To do this:

POST https://quandl.<CLUSTER-NAME>.hasura-app.io/add_data // remember to replace <CLUSTER-NAME> with your own cluster name (In this case, http://quandl.boomerang68.hasura-app.io/add_data)

{

"quandl_code": "LBMA/GOLD"

}

You can use a HTTP client of your choosing to make this request. Alternatively, you can also use the API Explorer provided by the Hasura api console to do this.

Once you have successfully made the above API call. Head back to your API console and you will see a new table called lbma_gold with about 12600 rows of data in it.

Head over to the EXTERNAL-URL of your metabase service.

Enter your details. Click on Add my data later in Step 2 and complete the sign up process

You will now reach your Dashboard

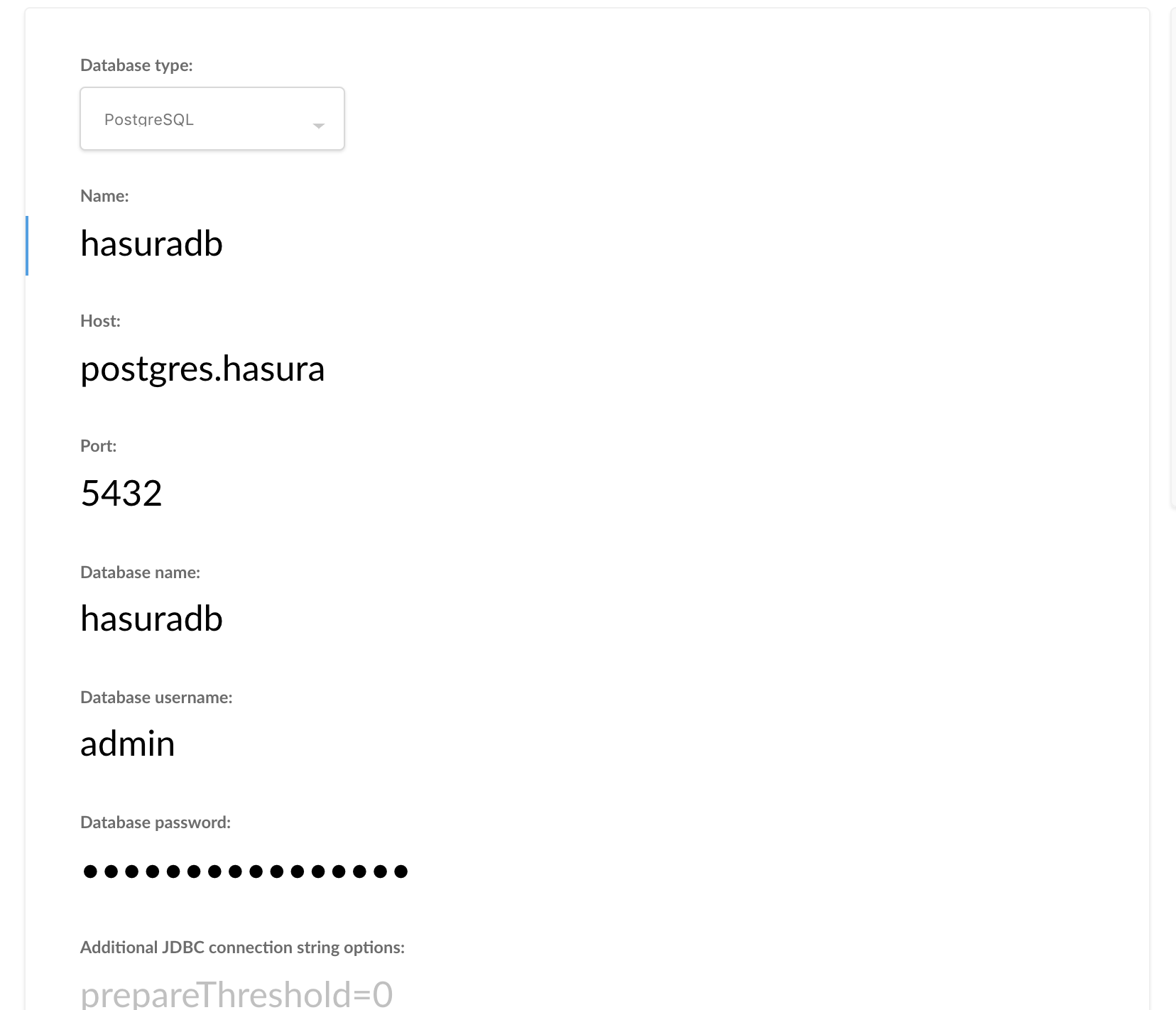

Now, let's connect our Hasura database to metabase

To get your Database password, go to your terminal:

$ # Run this in the quandl-metabase directory

$ hasura secret listIn the list that comes up, the value for postgres.password is your Database password. Paste this in the form and click on Save.

Your database is now integrated.

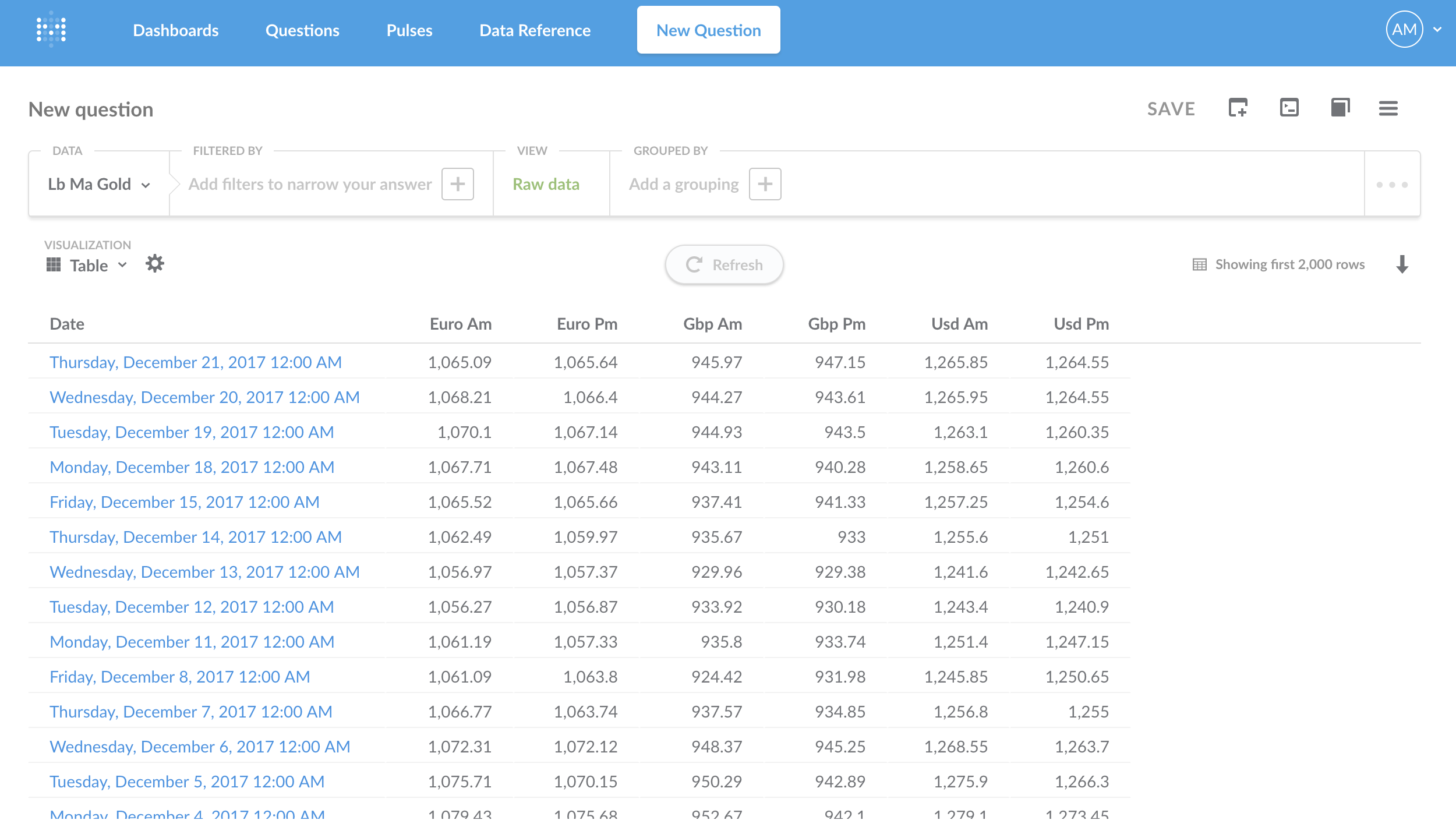

Click on New question and select Custom. In the Data dropdown, select hasuradb Public and then search for Lb Ma Gold

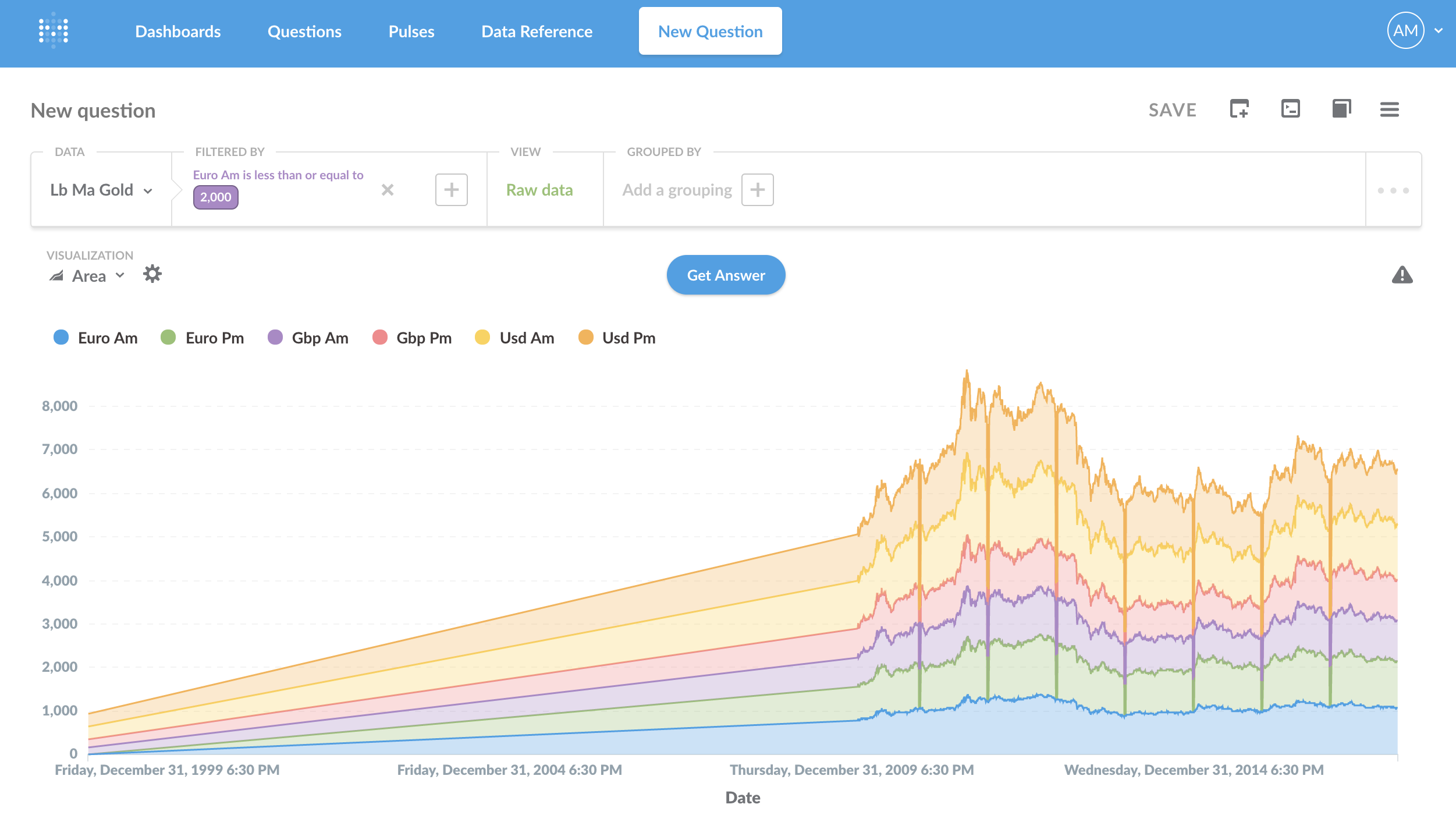

Play with different types of visualizations to change the data display type:

And that's it, get more time-series datasets from Quandl, add to the database and draw conclusions from the visualizations!