|

| 1 | +--- |

| 2 | +title: "GSoC ’25 Week 02 Update by Mebin J Thattil" |

| 3 | +excerpt: "Fine-Tuning, Deploying, Testing & Evaluations" |

| 4 | +category: "DEVELOPER NEWS" |

| 5 | +date: "2025-06-14" |

| 6 | +slug: "2025-06-14-gsoc-25-mebinthattil-week2" |

| 7 | +author: "@/constants/MarkdownFiles/authors/mebin-thattil.md" |

| 8 | +tags: "gsoc25,sugarlabs,week02,mebinthattil,speak_activity" |

| 9 | +image: "assets/Images/GSOCxSpeak.png" |

| 10 | +--- |

| 11 | + |

| 12 | +<!-- markdownlint-disable --> |

| 13 | + |

| 14 | +# Week 02 Progress Report by Mebin J Thattil |

| 15 | + |

| 16 | +**Project:** [Speak Activity](https://github.com/sugarlabs/speak) |

| 17 | +**Mentors:** [Chihurumnaya Ibiam](https://github.com/chimosky), [Kshitij Shah](https://github.com/kshitijdshah99) |

| 18 | +**Assisting Mentors:** [Walter Bender](https://github.com/walterbender), [Devin Ulibarri](https://github.com/pikurasa) |

| 19 | +**Reporting Period:** 2025-06-08 - 2025-06-14 |

| 20 | + |

| 21 | +--- |

| 22 | + |

| 23 | +## Goals for This Week |

| 24 | + |

| 25 | +- **Goal 1:** Setup AWS for Fine-Tuning. |

| 26 | +- **Goal 2:** Fine-Tune a small model on a small dataset. |

| 27 | +- **Goal 3:** Deploy the model on AWS and create an API endpoint. |

| 28 | +- **Goal 4:** Test the endpoint using a python script. |

| 29 | +- **Goal 5:** Evaluate the model responses and think about next steps. |

| 30 | + |

| 31 | +--- |

| 32 | + |

| 33 | +## This Week’s Achievements |

| 34 | + |

| 35 | +1. **Setup AWS for Fine-Tuning** |

| 36 | + - Setup AWS SageMaker. |

| 37 | + - Provisioned GPUs on AWS SageMaker to fine-tune Llama3-1B foundation model. |

| 38 | + |

| 39 | +2. **Dataset & Cleaning** |

| 40 | + - Used an open dataset. It was a dataset about conversations between a student and a teacher. |

| 41 | + - The dataset was cleaned and converted into a format that Llama needed for fine-tuning. |

| 42 | + - Wrote a small script to convert the dataset into a format that Llama can understand. |

| 43 | + - The dataset along with the script is available [here](https://github.com/mebinthattil/Education-Dialogue-Dataset). |

| 44 | + |

| 45 | +3. **Fine-tuning** |

| 46 | + - Fine-tuned the model on a small set of the dataset, just to see how it performs and to get familar with AWS SageMaker. |

| 47 | + - The training job ran on a `ml.g5.2xlarge` instance. |

| 48 | + - The hyperparameters that were set so as to reduce memory footprint and mainly to test things. I'll list the hyperparameters, hoping this would serve as documentation for future fine-tuning. |

| 49 | + |

| 50 | + **Hyperparameters**: |

| 51 | + |

| 52 | + | Name | Value | |

| 53 | + |----------------------------------|----------------------------------------------------| |

| 54 | + | add_input_output_demarcation_key | True | |

| 55 | + | chat_dataset | True | |

| 56 | + | chat_template | Llama3.1 | |

| 57 | + | enable_fsdp | False | |

| 58 | + | epoch | 5 | |

| 59 | + | instruction_tuned | False | |

| 60 | + | int8_quantization | True | |

| 61 | + | learning_rate | 0.0001 | |

| 62 | + | lora_alpha | 8 | |

| 63 | + | lora_dropout | 0.08 | |

| 64 | + | lora_r | 2 | |

| 65 | + | max_input_length | -1 | |

| 66 | + | max_train_samples | -1 | |

| 67 | + | max_val_samples | -1 | |

| 68 | + | per_device_eval_batch_size | 1 | |

| 69 | + | per_device_train_batch_size | 4 | |

| 70 | + | preprocessing_num_workers | None | |

| 71 | + | sagemaker_container_log_level | 20 | |

| 72 | + | sagemaker_job_name | jumpstart-dft-meta-textgeneration-l-20250607-200133| |

| 73 | + | sagemaker_program | transfer_learning.py | |

| 74 | + | sagemaker_region | ap-south-1 | |

| 75 | + | sagemaker_submit_directory | /opt/ml/input/data/code/sourcedir.tar.gz | |

| 76 | + | seed | 10 | |

| 77 | + | target_modules | q_proj,v_proj | |

| 78 | + | train_data_split_seed | 0 | |

| 79 | + | validation_split_ratio | 0.2 | |

| 80 | + |

| 81 | +4. **Saving the model** |

| 82 | + - The safetensors and other model files were saved in an AWS S3 bucket. The URI of the bucket is: ``` s3://sagemaker-ap-south-1-021891580293/jumpstart-run2/output/model/ ``` |

| 83 | + |

| 84 | +5. **Deploying the model** |

| 85 | + - The model was deployed on AWS SageMaker and an API endpoint was created. |

| 86 | + |

| 87 | +6. **Testing the model** |

| 88 | + - A python script was written to test the model using the API endpoint. |

| 89 | + |

| 90 | +7. **Evaluation** |

| 91 | + - The model responses were tested using the same questions used in my [benchmark](https://llm-benchmarking-sugar.streamlit.app/) done before. |

| 92 | + |

| 93 | + |

| 94 | +--- |

| 95 | + |

| 96 | +## Unexpected Model Output |

| 97 | + |

| 98 | +- After fine-tuning the model, I noticed that the model was producing some unexpected output. I expected the model to behave like general chatbot but in a more friendly and teacher-like manner. While the model's responses did sound like a teacher, the model would often try to create an entire chain of conversations generating the next response from a students perspective and then proceeed to answer itself. |

| 99 | +- This behaviour was becaues of the way the dataset was strucutred. The dataset was enssentially a list of back and forth conversations between a student and a teacher. So it makes sense that the model would try to create a chain of conversations. But this is not what we need from the model. |

| 100 | +- The next step is to change the strucutre of the dataset to make it just answer questions, but also to make it more conversational and understand the nuaces of a chain of conversations. |

| 101 | +- The temporary fix was the add a stop statement while generating responses and also tweaking the system prompt. But again, this is not the right way to go about it. The right was is to change the dataset structure. |

| 102 | + |

| 103 | +--- |

| 104 | + |

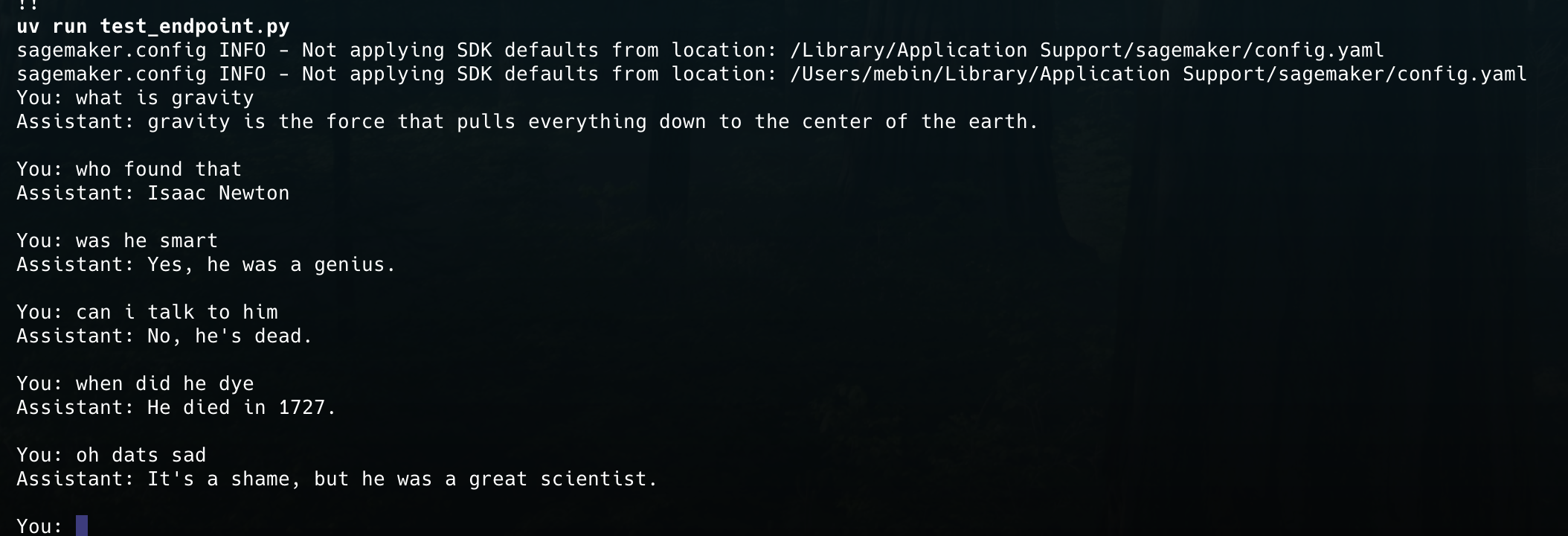

| 105 | +## Sample model output with stop condition |

| 106 | + |

| 107 | + |

| 108 | + |

| 109 | +--- |

| 110 | + |

| 111 | +## Key Learnings |

| 112 | + |

| 113 | +- Structure of dataset needs to be changed, in order to make it more conversational and understand the nuances of a chain of conversations. |

| 114 | + |

| 115 | +--- |

| 116 | + |

| 117 | +## Next Week’s Roadmap |

| 118 | + |

| 119 | +- Re-structure the dataset |

| 120 | +- Re-Train and Fine-Tunethe model on the new dataset |

| 121 | +- Deploy, create endpoint and test the model on the new dataset |

| 122 | +- Evaluate the model on the new dataset and add to benchmarks |

| 123 | + |

| 124 | +--- |

| 125 | + |

| 126 | +## Acknowledgments |

| 127 | + |

| 128 | +Thank you to my mentors, the Sugar Labs community, and fellow GSoC contributors for ongoing support. |

| 129 | + |

| 130 | +--- |

| 131 | + |

0 commit comments