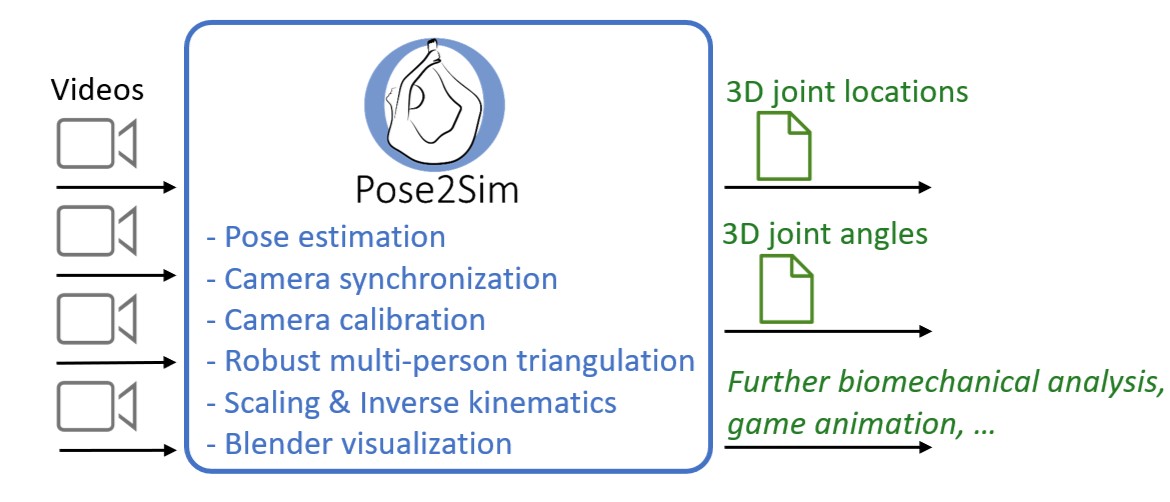

Welcome to Pose2Sim

+Pose2Sim provides a workflow for 3D markerless kinematics, as an alternative to traditional marker-based motion capture methods.

+ + + + +

+  +

+ Other more or less challenging tasks and conditions

+Multiple Cameras

+Use phones, webcams, or GoPros - any combination works

+Research-Grade Accuracy

+Validated accuracy with low-cost hardware

+Multi-Person Support

+Track multiple people simultaneously

+Full 3D Kinematics

+Complete OpenSim skeletal analysis with joint angles

+⚠️ Important Note

+Please set undistort_points and handle_LR_swap to false for now since it currently leads to inaccuracies. This will be fixed soon.

🎬 Perfect For

+-

+

- Sports Analysis: Field-based 3D motion capture +

- Clinical Assessment: Gait analysis in doctor's office +

- Animation: Outdoor 3D capture with fully clothed subjects +

- Research: Biomechanics studies with multiple participants +

⚠️ Key Requirements

+-

+

- Multiple cameras: Minimum 2 cameras (4+ recommended) +

- Camera calibration: Cameras must be calibrated +

- Synchronization: Cameras should be synchronized (or sync in post) +

- Single camera? Use Sports2D for 2D analysis +

📦 Version History

+-

+

- v0.10 (09/2024): OpenSim integration in pipeline +

- v0.9 (07/2024): Integrated pose estimation +

- v0.8 (04/2024): New synchronization tool +

- v0.7 (03/2024): Multi-person analysis +

- v0.6 (02/2024): Marker augmentation & Blender visualizer +

- v0.5 (12/2023): Automatic batch processing +

✅ What You'll Learn

+This comprehensive guide will take you through:

+-

+

- Complete installation and setup +

- Running demos (single & multi-person) +

- Setting up your own projects +

- 2D pose estimation from videos +

- Camera calibration techniques +

- Multi-camera synchronization +

- 3D triangulation and filtering +

- OpenSim kinematic analysis +

- Performance optimization +

Complete Installation

+Full installation with OpenSim support for complete 3D kinematic analysis.

+ +1. Install Anaconda or Miniconda

+Anaconda creates isolated environments for different projects, preventing package conflicts and ensuring reproducibility.

+Download Miniconda (recommended - lightweight version)

+Once installed, open an Anaconda prompt and create a virtual environment:

+conda create -n Pose2Sim python=3.9 -y

+conda activate Pose2Sim

+ 2. Install OpenSim

+OpenSim provides biomechanical modeling toolkit for accurate skeletal analysis with physical constraints:

+conda install -c opensim-org opensim -y

+ Alternative methods: OpenSim documentation

+3. Install Pose2Sim

+ +Option A: Quick Install (Recommended)

+pip install pose2sim

+ Option B: Install from Source

+For developers who want the latest unreleased features:

+git clone --depth 1 https://github.com/perfanalytics/pose2sim.git

+cd pose2sim

+pip install .

+ 4. Optional: GPU Acceleration

+GPU support dramatically speeds up pose estimation (3-5x faster) but requires 6 GB additional disk space.

+ +Check GPU Compatibility:

+nvidia-smi

+ Note the CUDA version - this is the latest your driver supports

+Install PyTorch with CUDA:

+Visit PyTorch website and install compatible version. For example:

+pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

+ Adjust cu124 based on your CUDA version

+Install ONNX Runtime GPU:

+pip install onnxruntime-gpu

+ Verify Installation:

+python -c "import torch; import onnxruntime as ort; print(torch.cuda.is_available(), ort.get_available_providers())"

+ Should print: True ['CUDAExecutionProvider', ...]

+✅ Installation Complete!

+Pose2Sim is now ready. Remember to activate your environment before use:

+conda activate Pose2Sim

+ 💾 Storage Requirements

+-

+

- Minimal install: ~3 GB (without GPU, minimal models) +

- Standard install: ~4.75 GB (without GPU) +

- Full install with GPU: ~10.75 GB +

You can save 1.3 GB by uninstalling TensorFlow if you skip marker augmentation: pip uninstall tensorflow

Single Person Demo

+Test your installation with a demo of a person balancing on a beam, filmed with 4 calibrated cameras.

+ +1. Locate Demo Folder

+Find where Pose2Sim is installed:

+pip show pose2sim

+ Copy the location path and navigate to demo:

+cd <path>\Pose2Sim\Demo_SinglePerson

+ 2. Run Complete Workflow

+Launch Python and execute the full pipeline:

+ipython

+ from Pose2Sim import Pose2Sim

+

+Pose2Sim.calibration()

+Pose2Sim.poseEstimation()

+Pose2Sim.synchronization()

+Pose2Sim.personAssociation()

+Pose2Sim.triangulation()

+Pose2Sim.filtering()

+Pose2Sim.markerAugmentation()

+Pose2Sim.kinematics()

+ 💡 Quick Tip

+Run all steps at once with:

+Pose2Sim.runAll()

+ 3. Understanding the Synchronization GUI

+When the synchronization GUI appears, select a keypoint showing clear vertical motion for best results.

+The GUI helps you choose which keypoint to use for camera synchronization based on vertical speed.

+📁 Output Files Created

+-

+

- pose-3d/*.trc: 3D marker coordinates for each trial +

- kinematics/*.mot: 3D joint angles over time +

- kinematics/*.osim: Scaled OpenSim models +

- logs.txt: Processing details and statistics +

4. Visualize Results

+ +Option A: OpenSim GUI

+-

+

- Download OpenSim GUI +

- File → Open Model: Load scaled model from

kinematicsfolder

+ - File → Load Motion: Load .mot file from

kinematicsfolder

+ - File → Preview Experimental Data: Load .trc file to see 3D markers +

Option B: Blender (More Visual)

+Install Pose2Sim_Blender add-on for beautiful 3D visualization with camera overlay and animation capabilities.

+⚙️ Configuration

+Default parameters are in Config.toml - all parameters are documented. Feel free to experiment!

📝 Important Notes

+-

+

- Marker Augmentation: Doesn't always improve results. +

- Save space: If you skip marker augmentation, uninstall tensorflow to save 1.3 GB:

pip uninstall tensorflow

+

Multi-Person Demo

+Discover how Pose2Sim tracks multiple people simultaneously - a hidden person appears when multi-person analysis is activated!

+ +1. Navigate to Multi-Person Demo

+cd <path>\Pose2Sim\Demo_MultiPerson

+ 2. Verify Configuration

+Ensure multi_person = true is set in your Config.toml file.

3. Run Multi-Person Workflow

+ipython

+ from Pose2Sim import Pose2Sim

+

+Pose2Sim.calibration()

+Pose2Sim.poseEstimation()

+Pose2Sim.synchronization()

+Pose2Sim.personAssociation()

+Pose2Sim.triangulation()

+Pose2Sim.filtering()

+Pose2Sim.markerAugmentation()

+Pose2Sim.kinematics()

+ Or simply:

+Pose2Sim.runAll()

+ 📊 Multi-Person Output

+Pose2Sim generates separate files for each detected person:

+-

+

- pose-3d/: One .trc file per participant +

- kinematics/: One scaled .osim model per participant +

- kinematics/: One .mot angle file per participant +

4. How Multi-Person Tracking Works

+Pose2Sim uses sophisticated algorithms to:

+-

+

- Associate persons across views: Matches people across different camera angles using epipolar geometry +

- Track over time: Maintains consistent IDs by analyzing movement speed and displacement +

- Handle occlusions: Robust to temporary occlusions or people entering/leaving frame +

⚠️ Important Configuration

+When using marker augmentation and kinematics with multiple people, ensure the order matches:

+-

+

markerAugmentation > participant_heightvalues

+ participant_massvalues

+

Must correspond to person IDs in the same order!

+# Example in Config.toml

+participant_height = [1.72, 1.65] # Person 0, Person 1

+participant_mass = [70, 65] # Person 0, Person 1

+ 💡 Visualization Tips

+Use Blender visualization (as explained in Step 2) to see both people simultaneously in 3D space with their respective skeletons!

+✅ Multi-Person Success!

+You've now mastered both single and multi-person analysis. Ready to process your own data!

+Batch Processing Demo

+Process multiple trials with different parameters efficiently using batch processing structure.

+ +1. Navigate to Batch Demo

+cd <path>\Pose2Sim\Demo_Batch

+ 2. Understanding Batch Structure

+Batch processing uses a hierarchical configuration system:

+BatchSession/

+├── Config.toml # Global parameters

+├── Calibration/

+├── Trial_1/

+│ ├── Config.toml # Trial-specific overrides

+│ └── videos/

+├── Trial_2/

+│ ├── Config.toml # Different parameters for this trial

+│ └── videos/

+└── Trial_3/

+ ├── Config.toml

+ └── videos/

+ 🔧 How It Works

+-

+

- Global Config: BatchSession/Config.toml sets defaults for all trials +

- Trial Overrides: Each Trial/Config.toml can override specific parameters +

- Inheritance: Uncommented keys in trial configs override global settings +

3. Run Batch Processing

+ipython

+ from Pose2Sim import Pose2Sim

+

+# Run from BatchSession folder to process all trials

+Pose2Sim.runAll()

+

+# Or run from specific Trial folder to process only that trial

+ 4. Experiment with Parameters

+Try modifying Trial_2/Config.toml:

Example: Different Time Range

+Uncomment and set in Trial_2/Config.toml:

+[project]

+frame_range = [10, 99] # Process only frames 10-99

+ Example: Lightweight Mode

+Uncomment and set in Trial_2/Config.toml:

+[pose]

+mode = 'lightweight' # Faster pose estimation

+ 📊 Batch Processing Benefits

+-

+

- Consistency: Same calibration and global settings across trials +

- Flexibility: Trial-specific customization when needed +

- Efficiency: Process entire sessions with one command +

- Experimentation: Compare different parameter sets easily +

✅ Batch Processing Mastered!

+You now understand how to efficiently process multiple trials with varying parameters. This structure scales from research studies to production pipelines!

+Setting Up Your Project

+Organize your own data for analysis using Pose2Sim's structured project format.

+ +1. Find Your Pose2Sim Installation

+pip show pose2sim

+ Note the location path and navigate to it:

+cd <path>\pose2sim

+ 2. Copy Template Folder

+Copy the appropriate demo folder as your project template:

+-

+

- Demo_SinglePerson: For single person analysis +

- Demo_MultiPerson: For multiple people +

- Demo_Batch: For batch processing multiple trials +

Copy it to your preferred location and rename as desired.

+3. Project Structure

+Your project should follow this structure:

+MyProject/

+├── Config.toml # Main configuration

+├── Calibration/

+│ ├── intrinsics/

+│ │ ├── cam01/ # Videos/images for intrinsic calibration

+│ │ ├── cam02/

+│ │ └── ...

+│ └── extrinsics/

+│ ├── cam01/ # Videos/images for extrinsic calibration

+│ ├── cam02/

+│ └── ...

+├── videos/

+│ ├── cam01.mp4 # Your capture videos

+│ ├── cam02.mp4

+│ └── ...

+├── pose/ # Created automatically - 2D poses

+├── pose-3d/ # Created automatically - 3D coordinates

+└── kinematics/ # Created automatically - OpenSim results

+ 4. Edit Configuration

+Open Config.toml and customize key parameters:

Project Settings:

+[project]

+project_dir = 'path/to/MyProject' # Absolute path to your project

+frame_range = [] # Empty for all frames, or [start, end]

+multi_person = false # true for multiple people

+ Participant Info:

+[markerAugmentation]

+participant_height = [1.72] # Height in meters

+participant_mass = [70] # Mass in kg

+ 5. Add Your Videos

+-

+

- Place all camera videos in the

videos/folder

+ - Name them clearly (e.g., cam01.mp4, cam02.mp4, etc.) +

- Ensure all videos capture the same action +

- Videos don't need to be perfectly synchronized (we'll sync in post) +

⚠️ Important Tips

+-

+

- Camera placement: Position cameras to minimize occlusions +

- Coverage: Ensure the capture volume is covered by at least 2 cameras at all times +

- Lighting: Consistent lighting helps pose estimation +

- Background: Uncluttered backgrounds improve accuracy +

✅ Project Ready!

+Your project is now set up. Continue to the next steps to calibrate cameras and process your data!

+2D Pose Estimation

+Detect 2D keypoints from your videos using RTMPose or other pose estimation models.

+ + + +

+ Run Pose Estimation

+Navigate to your project folder and run:

+ipython

+ from Pose2Sim import Pose2Sim

+Pose2Sim.poseEstimation()

+ This will process all videos in your videos/ folder and save 2D keypoints to pose/.

Pose Models Available

+Configure in Config.toml under [pose]:

| Model | +Best For | +Speed | +

|---|---|---|

body_with_feet |

+ General body tracking (default) | +Balanced | +

whole_body |

+ Body + hands + face | +Slower | +

whole_body_wrist |

+ Detailed wrist motion | +Slower | +

Performance Modes

+Choose speed vs accuracy trade-off:

+[pose]

+mode = 'balanced' # Options: 'lightweight', 'balanced', 'performance'

+ -

+

- lightweight: Fastest, slightly less accurate +

- balanced: Good speed/accuracy balance (default) +

- performance: Most accurate, slower +

Advanced: Custom Models

+Use any RTMLib-compatible model with custom dictionary syntax:

+mode = """{'det_class':'YOLOX',

+ 'det_model':'https://download.openmmlab.com/mmpose/.../yolox_m.zip',

+ 'det_input_size':[640, 640],

+ 'pose_class':'RTMPose',

+ 'pose_model':'https://download.openmmlab.com/mmpose/.../rtmpose-m.zip',

+ 'pose_input_size':[192,256]}"""

+ 💡 Other Pose Solutions

+-

+

- DeepLabCut: For custom-trained models (animals, specific points) +

- OpenPose: Legacy support (BODY_25B recommended if using) +

- AlphaPose: Alternative to OpenPose +

- BlazePose: Fast inference, single person only +

Detection Frequency Optimization

+Speed up pose estimation by detecting people less frequently:

+[pose]

+det_frequency = 4 # Detect people every 4 frames, track in between

+ Person detection is slow; tracking between frames is fast. This can provide 5x speedup! However, it might impact accuracy.

+📊 Output Format

+2D poses saved as JSON files in pose/ folder:

-

+

- One JSON file per frame per camera +

- Contains keypoint coordinates and confidence scores +

- OpenPose-compatible format +

✅ Pose Estimation Complete!

+Your 2D keypoints are ready. Next step: calibrate your cameras to enable 3D triangulation!

+Camera Calibration

+Calibrate your cameras to determine their intrinsic properties (lens characteristics) and extrinsic parameters (position and orientation in space).

+ + + +

+ Intrinsic calibration with checkerboard

+ +

+ Extrinsic calibration

+Run Calibration

+from Pose2Sim import Pose2Sim

+Pose2Sim.calibration()

+ Method 1: Convert Existing Calibration

+If you already have a calibration file from another system:

+ +Set in Config.toml:

+[calibration]

+calibration_type = 'convert'

+convert_from = 'qualisys' # Options: qualisys, optitrack, vicon, opencap,

+ # easymocap, biocv, caliscope, anipose, freemocap

+ | System | +File Format | +Notes | +

|---|---|---|

| Qualisys | +.qca.txt | +Export from QTM | +

| Vicon | +.xcp | +Direct copy | +

| OpenCap | +.pickle | +Multiple files | +

| Caliscope | +.toml | +Native format | +

Method 2: Calculate from Scratch

+Calculate calibration using checkerboard or scene measurements.

+ +Set in Config.toml:

+[calibration]

+calibration_type = 'calculate'

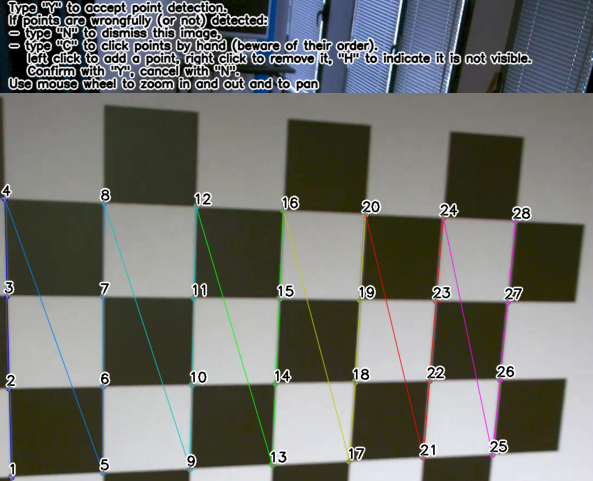

+ Step 1: Intrinsic Calibration

+Intrinsic parameters are camera-specific properties (focal length, distortion) - usually only need to calculate once per camera.

+ +-

+

- Create folder for each camera in

Calibration/intrinsics/

+ - Film a checkerboard with each camera (board OR camera can move) +

- Configure checkerboard parameters in Config.toml:

+ ++

[calibration.intrinsics] +overwrite_intrinsics = true +show_detection_intrinsics = true +intrinsics_corners_nb = [9, 6] # Internal corners (one less than visible) +intrinsics_square_size = 60 # Square size in mm+

+

📋 Checkerboard Requirements

+-

+

- Flat: Board must be completely flat +

- Asymmetric: Rows ≠ Columns (or rows odd if columns even) +

- Border: Wide white border around pattern +

- Focus: Sharp, in-focus images +

- Coverage: Film from multiple angles covering most of frame +

- No glare: Avoid reflections +

Generate checkerboard at calib.io

+✅ Target Error

+Intrinsic calibration error should be below 0.5 pixels

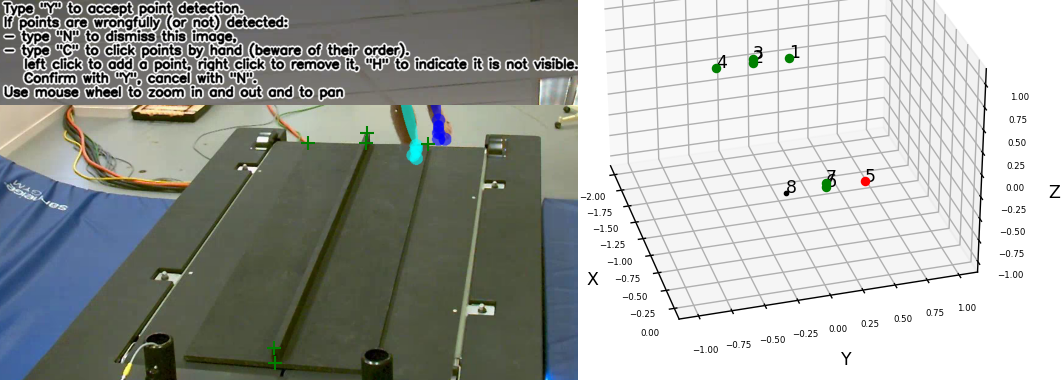

+Step 2: Extrinsic Calibration

+Extrinsic parameters are camera positions and orientations in space - must recalculate whenever cameras move.

+ +-

+

- Create folder for each camera in

Calibration/extrinsics/

+ - Film either:

+

-

+

- Checkerboard: Place on ground, visible to all cameras +

- Scene measurements: Measure 10+ point coordinates in 3D space +

+ - Configure in Config.toml:

+ ++

[calibration.extrinsics] +extrinsics_method = 'board' # or 'scene' +show_detection_extrinsics = true +extrinsics_corners_nb = [10, 7] +extrinsics_square_size = 60 + +# If using 'scene' method: +# object_coords_3d = [[0,0,0], [1,0,0], ...] # Measured 3D coordinates+

+

💡 Scene Measurement Tips

+-

+

- Use tiles, wall lines, boxes, or treadmill dimensions +

- Spread points throughout capture volume +

- More points = better accuracy +

- Can temporarily add then remove objects for calibration +

✅ Target Error

+Extrinsic calibration error should be below 1 cm (acceptable up to 2.5 cm depending on application)

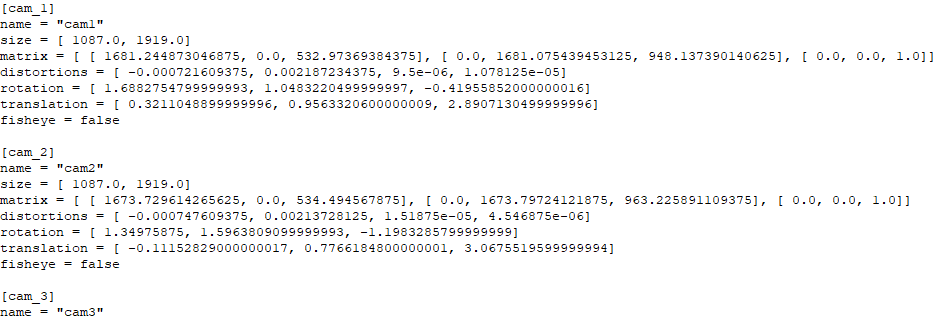

+Output: Calibration File

+Calibration creates Calib.toml in your Calibration folder containing:

-

+

- Camera matrix (intrinsics) for each camera +

- Distortion coefficients +

- Rotation and translation (extrinsics) +

- Calibration errors +

+

+ ✅ Calibration Complete!

+Your cameras are now calibrated and ready for 3D triangulation!

+Camera Synchronization

+Synchronize your cameras by finding optimal time offset based on keypoint movement correlation.

+ + + +

+ ⚠️ Skip This Step If...

+Your cameras are natively synchronized (hardware sync, genlock, or timecode).

+Run Synchronization

+from Pose2Sim import Pose2Sim

+Pose2Sim.synchronization()

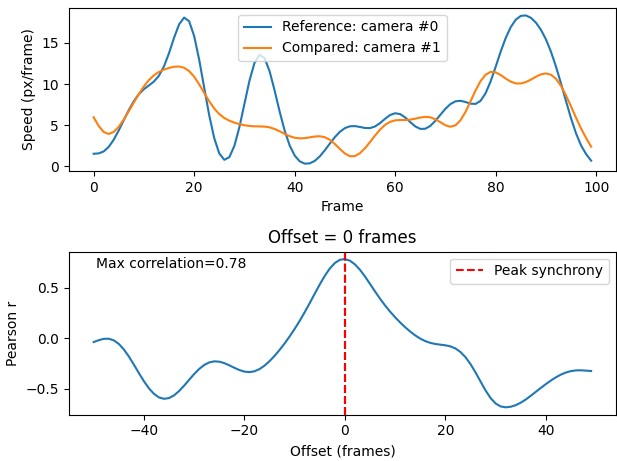

+ How It Works

+The algorithm:

+-

+

- Computes vertical speed of chosen keypoint(s) in each camera +

- Finds time offset that maximizes correlation between cameras +

- Applies offset to align all cameras to reference camera +

Synchronization GUI

+Enable interactive GUI for better control:

+[synchronization]

+synchronization_gui = true

+ The GUI allows you to:

+-

+

- Select which keypoint to use (e.g., RWrist, LAnkle) +

- Choose reference person (in multi-person scenes) +

- Adjust time window for analysis +

- Visualize correlation plots +

Configuration Options

+[synchronization]

+reset_sync = false # Start from scratch or refine existing

+frames_range = [] # Limit analysis to specific frames

+display_corr = true # Show correlation plots

+keypoints_to_consider = ['RWrist', 'LWrist'] # Keypoints for sync

+approx_time_maxspeed = 'auto' # Or specify time of max speed

+ 📊 Best Results When...

+-

+

- Person performs clear vertical movement (jump, wave, etc.) +

- Capture lasts 5+ seconds (enough data) + +

Alternative Sync Methods (Not included in Pose2Sim)

+If keypoint-based sync doesn't work well:

+ +Manual Sync Markers:

+-

+

- Flashlight: Flash visible to all cameras +

- Clap: Sync with audio (if available) +

- Clear event: Ball drop, jump, etc. +

Hardware Solutions:

+-

+

- GoPro timecode: Built-in sync feature +

- GPS sync: For outdoor captures (GoPro) +

- GoPro app: Sync via app (slightly less reliable) +

✅ Cameras Synchronized!

+Your videos are now time-aligned and ready for multi-view triangulation!

+Person Association

+Associate the same person across different camera views and track them over time.

+ +⚠️ Skip This Step If...

+Only one person is visible in your capture.

+Run Person Association

+from Pose2Sim import Pose2Sim

+Pose2Sim.personAssociation()

+ How It Works

+ +Single Person Mode (multi_person = false):

+Automatically selects the person with smallest reprojection error (best 3D reconstruction).

+Multi-Person Mode (multi_person = true):

+-

+

- Cross-view association: Uses epipolar geometry to match people across camera views +

- Temporal tracking: Tracks people across frames using displacement speed +

- Consistent IDs: Maintains identity even with brief occlusions +

Association Method

+The algorithm finds the best person associations by:

+-

+

- Triangulating all possible person combinations +

- Reprojecting 3D points back to image planes +

- Computing epipolar line distances +

- Choosing combination with minimum geometric error +

Configuration Parameters

+[personAssociation]

+likelihood_threshold_association = 0.3

+reproj_error_threshold_association = 20 # pixels

+min_cameras_for_triangulation = 2

+ 💡 Parameter Tuning

+-

+

- Increase thresholds if people are frequently lost +

- Decrease thresholds if wrong person associations occur +

- Monitor console output for association success rates +

Handling Occlusions

+Pose2Sim is robust to:

+-

+

- Temporary loss of person in some camera views +

- People entering/leaving the capture volume +

- Brief full occlusions (person behind object) +

If reprojection error is too high, cameras are progressively removed until threshold is met.

+📊 Check Results

+Review console output showing:

+-

+

- Number of people detected per frame +

- Association success rate +

- Average reprojection errors +

- Cameras excluded per frame +

If results aren't satisfying, adjust constraints in Config.toml.

+✅ Person Association Complete!

+People are now correctly identified across views and time. Ready for 3D triangulation!

+3D Triangulation

+Convert 2D keypoints from multiple views into robust 3D coordinates using weighted triangulation.

+ +Run Triangulation

+from Pose2Sim import Pose2Sim

+Pose2Sim.triangulation()

+ How It Works

+Robust triangulation process:

+-

+

- Weighted triangulation: Each 2D point weighted by detection confidence +

- Likelihood filtering: Only points above confidence threshold used +

- Reprojection check: Verify 3D point quality by reprojecting to cameras +

- Error-based refinement:

+

-

+

- If error high: swap left/right sides and retry +

- Still high: progressively remove cameras until error acceptable +

- Too few cameras: skip frame, interpolate later +

+ - Interpolation: Fill missing values with cubic spline interpolation(default, can be changed) +

Configuration Parameters

+[triangulation]

+reproj_error_threshold_triangulation = 15 # pixels

+likelihood_threshold_triangulation = 0.3

+min_cameras_for_triangulation = 2

+interpolation_kind = 'cubic' # cubic, linear, slinear, quadratic

+interp_if_gap_smaller_than = 10 # frames

+show_interp_indices = true # Show which frames were interpolated

+handle_LR_swap = false # KEEP FALSE - Correct left/right swaps (buggy)

+undistort_points = false # KEEP FALSE - Undistort before triangulation (buggy)

+make_c3d = false # Also save as .c3d format

+ 📊 Output Information

+Triangulation provides detailed statistics:

+-

+

- Mean reprojection error per keypoint (mm and px) +

- Cameras excluded on average per keypoint +

- Frames interpolated for each keypoint +

- Least reliable cameras identification +

Visualize Results

+Check your .trc file in OpenSim:

+# In OpenSim GUI:

+File → Preview Experimental Data → Open .trc file

+ Look for smooth, realistic trajectories. Jumps or jitter indicate issues.

+Troubleshooting

+| Issue | +Solution | +

|---|---|

| High reprojection errors | +Increase reproj_error_threshold_triangulation |

+

| Missing keypoints | +Decrease likelihood_threshold_triangulation |

+

| Jittery motion | +Increase min_cameras_for_triangulation |

+

| Left/right swaps | +Keep handle_LR_swap = false (currently buggy) |

+

⚠️ Important Notes

+-

+

- Undistortion: Currently causes inaccuracies, keep

undistort_points = false

+ - LR Swap: Currently causes issues, keep

handle_LR_swap = false

+ - Interpolation limit: Large gaps (>10 frames) won't be interpolated by default +

- Quality check: Always visualize .trc in OpenSim before proceeding +

✅ Triangulation Complete!

+Your 3D coordinates are ready! Next step: filter the data for smoother motion.

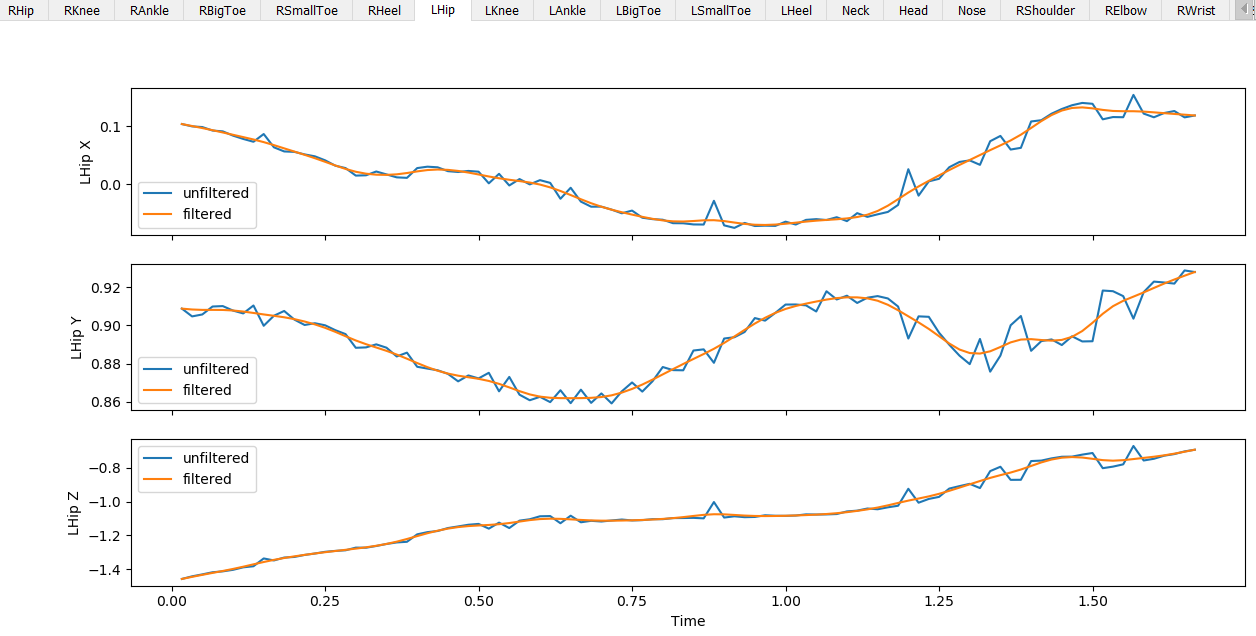

+3D Filtering

+Smooth your 3D coordinates to remove noise while preserving natural motion characteristics.

+ + + +

+ Run Filtering

+from Pose2Sim import Pose2Sim

+Pose2Sim.filtering()

+ Filtered .trc files are saved with _filt suffix.

Available Filter Types

+[filtering]

+type = 'butterworth' # Choose filter type

+ | Filter | +Best For | +Key Parameters | +

|---|---|---|

butterworth |

+ General motion (default) | +order, cut_off_frequency | +

kalman |

+ Noisy data with gaps | +trust_ratio, smooth | +

butterworth_on_speed |

+ Preserve sharp movements | +order, cut_off_frequency | +

gaussian |

+ Simple smoothing | +sigma_kernel | +

loess |

+ Local polynomial smoothing | +nb_values_used | +

median |

+ Remove outliers | +kernel_size | +

Butterworth Filter (Recommended)

+Most commonly used for motion capture data:

+[filtering]

+type = 'butterworth'

+butterworth_order = 4 # Filter order (2-4 typical)

+butterworth_cut_off_frequency = 6 # Hz - adjust based on motion speed

+ 💡 Cutoff Frequency Guide

+-

+

- 3-6 Hz: Walking, slow movements +

- 6-10 Hz: Running, moderate speed +

- 10-15 Hz: Fast movements, sports +

- 15+ Hz: Very fast, impulsive motions +

Kalman Filter

+Excellent for noisy data with missing values:

+[filtering]

+type = 'kalman'

+kalman_trust_ratio = 500 # Measurement trust vs process model

+kalman_smooth = true # Apply smoothing pass

+ Higher trust_ratio = trust measurements more; lower = trust motion model more

+Display Results

+Enable visualization to compare before/after filtering:

+[filtering]

+display_figures = true # Show plots comparing raw vs filtered

+ Plots show each keypoint's trajectory in X, Y, Z coordinates.

+Evaluate Filter Quality

+-

+

- Visual inspection: Check plots for smooth but realistic motion +

- OpenSim preview: Load filtered .trc in OpenSim

+ ++

File → Preview Experimental Data+

+ - Motion validation: Ensure filter doesn't remove real motion features +

⚠️ Filtering Cautions

+-

+

- Over-filtering: Too aggressive = removes real motion details +

- Under-filtering: Insufficient smoothing = noise remains +

- Cutoff frequency: Adjust based on motion speed - no one-size-fits-all +

- Can skip: Filtering is optional if data quality is already good +

✅ Filtering Complete!

+Your 3D data is now smoothed and ready for marker augmentation or kinematics!

+Marker Augmentation (Optional)

+Use Stanford's LSTM model to estimate 47 virtual marker positions, potentially improving inverse kinematics results.

+ +⚠️ Important: Not Always Better

+Marker augmentation doesn't necessarily improve results It's most beneficial when using fewer than 4 cameras.

+Recommendation: Run IK with and without augmentation, compare results.

+Run Marker Augmentation

+from Pose2Sim import Pose2Sim

+Pose2Sim.markerAugmentation()

+ Creates augmented .trc files with _LSTM suffix.

How It Works

+LSTM neural network trained on marker-based motion capture data:

+-

+

- Takes your detected keypoints as input +

- Predicts positions of 47 virtual markers +

- Outputs more stable but potentially less accurate motion +

Trade-off: More stability vs less precision

+Configuration Requirements

+[markerAugmentation]

+participant_height = [1.72] # Required - height in meters

+participant_mass = [70] # Optional - mass in kg (for kinetics only)

+make_c3d = false # Also save as .c3d format

+ ⚠️ Multi-Person Projects

+Order must match person IDs:

+participant_height = [1.72, 1.65, 1.80] # Person 0, 1, 2

+participant_mass = [70, 65, 85] # Same order!

+ Required Keypoints

+Marker augmentation requires these minimum keypoints (e.g., COCO won't work):

+["Neck", "RShoulder", "LShoulder", "RHip", "LHip", "RKnee", "LKnee",

+ "RAnkle", "LAnkle", "RHeel", "LHeel", "RSmallToe", "LSmallToe",

+ "RBigToe", "LBigToe", "RElbow", "LElbow", "RWrist", "LWrist"]

+ Limitations

+-

+

- No NaN values: Interpolation must fill all gaps before augmentation +

- Standing pose required: Model trained on standing/walking motions +

- Not suitable for: Sitting, crouching, lying down poses +

- Disk space: Requires TensorFlow (~1.3 GB) +

💾 Save Disk Space

+If you skip marker augmentation, uninstall TensorFlow:

+pip uninstall tensorflow

+ Saves ~1.3 GB of storage.

+When to Use

+| Use When | +Skip When | +

|---|---|

| Using 2-3 cameras | +Using 4+ cameras | +

| Noisy keypoint detection | +Clean keypoint detection | +

| Standing/walking motions | +Sitting/crouching/lying | +

| Need more stability | +Need maximum precision | +

✅ Decision Point

+You now have both regular and augmented .trc files. Compare both in the next step (Kinematics) to see which works better for your data!

+OpenSim Kinematics

+Scale an OpenSim skeletal model to your participant and compute biomechanically accurate 3D joint angles using inverse kinematics.

+ +Run Kinematics

+from Pose2Sim import Pose2Sim

+Pose2Sim.kinematics()

+ Automatic vs Manual

+ +Automatic (Recommended - Fully Integrated)

+Pose2Sim performs scaling and IK automatically with no static trial needed:

+-

+

- Intelligent scaling: Uses frames where person is standing upright +

- Outlier removal: Removes fastest 10%, stationary frames, crouching frames +

- Robust averaging: Mean of best segment measurements +

- Automatic IK: Runs inverse kinematics on all frames +

Manual (OpenSim GUI)

+For specific trials or fine-tuned control, use OpenSim GUI:

+-

+

- Open OpenSim GUI +

- Load model from

Pose2Sim/OpenSim_Setup/

+ - Tools → Scale Model → Load scaling setup .xml +

- Tools → Inverse Kinematics → Load IK setup .xml +

- Run and save results +

Configuration Options

+[opensim]

+use_augmentation = false # Use LSTM-augmented markers or not

+use_contacts_muscles = false # Include muscles and contact spheres

+right_left_symmetry = true # Enforce bilateral symmetry

+remove_scaling_setup = false # Keep scaling files for inspection

+remove_ik_setup = false # Keep IK files for inspection

+

+# Model selection

+use_simple_model = false # Simple model (10x faster, stiff spine)

+

+# Participant info

+participant_height = [1.72] # meters - must match marker augmentation

+participant_mass = [70] # kg - affects kinetics, not kinematics

+ Simple vs Full Model

+| Feature | +Simple Model | +Full Model | +

|---|---|---|

| Speed | +~0.7s per trial | +~9s per trial | +

| Spine | +Stiff/rigid | +Flexible | +

| Shoulders | +Ball joint | +Anatomical constraints | +

| Muscles | +None | +Full muscle set | +

| Best For | +Gait, running, basic motion | +Complex motion, research | +

Scaling Strategy

+Pose2Sim intelligently selects frames for scaling by removing:

+-

+

- 10% fastest frames: Potential detection outliers +

- Zero-speed frames: Person likely out of frame +

- Crouching frames: Hip/knee flexion > 45° (less accurate) +

- 20% extreme values: After above filtering +

Remaining frames averaged for robust segment lengths.

+ +# Adjust these in Config.toml if needed

+[opensim.scaling]

+fastest_frames_to_remove_percent = 10

+large_hip_knee_angles = 45

+trimmed_extrema_percent = 20

+ Output Files

+Created in kinematics/ folder:

-

+

- *_scaled.osim: Scaled OpenSim model for each person +

- *.mot: Joint angles over time (open with Excel or OpenSim) +

- *_scaling.xml: Scaling setup (if not removed) +

- *_ik.xml: IK setup (if not removed) +

Visualize Results

+In OpenSim GUI:

+-

+

- File → Open Model: Load *_scaled.osim +

- File → Load Motion: Load *.mot file +

- Play animation to verify realistic motion +

Or use Blender add-on for better visualization!

+⚠️ When Automatic Scaling May Fail

+Automatic scaling works best for standing/walking. Use manual scaling for:

+-

+

- Mostly sitting or crouching trials +

- Unusual body positions throughout +

- Extreme motions (gymnastics, dancing) +

In these cases, capture a separate standing trial for scaling.

+🚀 Further Analysis

+With scaled model and joint angles, you can proceed to:

+-

+

- Inverse Dynamics: Compute joint torques +

- Muscle Analysis: Estimate muscle forces +

- Moco: Trajectory optimization and prediction +

- Ground Reaction Forces: With contact spheres +

✅ Kinematics Complete!

+You now have biomechanically accurate 3D joint angles! Your complete 3D motion capture workflow is finished!

+All Parameters Reference

+Complete reference of all configuration parameters in Config.toml.

+ +📁 Project Settings

+| Parameter | +Description | +Default | +

|---|---|---|

project_dir |

+ Absolute path to project folder | +current directory | +

frame_range |

+ [start, end] or [] for all | +[] | +

frame_rate |

+ Video frame rate (auto-detected) | +auto | +

multi_person |

+ Track multiple people | +false | +

🎯 Pose Estimation

+| Parameter | +Description | +Default | +

|---|---|---|

pose_model |

+ body_with_feet, whole_body, whole_body_wrist, CUSTOM | +body_with_feet | +

mode |

+ lightweight, balanced, performance (or custom dict) | +balanced | +

det_frequency |

+ Run person detection every N frames | +1 | +

tracking_mode |

+ sports2d, deepsort, none | +sports2d | +

display_detection |

+ Show real-time detection | +true | +

save_video |

+ 'to_video', 'to_images', 'none' | +to_video | +

output_format |

+ openpose, mmpose, deeplabcut | +openpose | +

📐 Calibration

+| Parameter | +Description | +Default | +

|---|---|---|

calibration_type |

+ convert, calculate | +convert | +

convert_from |

+ qualisys, optitrack, vicon, opencap, etc. | +qualisys | +

binning_factor |

+ For Qualisys if filming in 540p | +1 | +

Intrinsic Calibration

+| Parameter | +Description | +Default | +

|---|---|---|

overwrite_intrinsics |

+ Recalculate or use existing | +false | +

intrinsics_corners_nb |

+ [rows, cols] internal corners | +[9, 6] | +

intrinsics_square_size |

+ Square size in mm | +60 | +

show_detection_intrinsics |

+ Display corner detection | +true | +

Extrinsic Calibration

+| Parameter | +Description | +Default | +

|---|---|---|

extrinsics_method |

+ board, scene, keypoints | +board | +

extrinsics_corners_nb |

+ [rows, cols] for board method | +[10, 7] | +

extrinsics_square_size |

+ Square size in mm | +60 | +

show_detection_extrinsics |

+ Display detection/points | +true | +

object_coords_3d |

+ For scene method: measured points | +[] | +

🔄 Synchronization

+| Parameter | +Description | +Default | +

|---|---|---|

synchronization_gui |

+ Use interactive GUI | +true | +

reset_sync |

+ Start fresh or refine existing | +false | +

frames_range |

+ [start, end] for sync analysis | +[] | +

display_corr |

+ Show correlation plots | +true | +

keypoints_to_consider |

+ List of keypoints for sync | +['RWrist'] | +

approx_time_maxspeed |

+ Time of max speed or 'auto' | +auto | +

👥 Person Association

+| Parameter | +Description | +Default | +

|---|---|---|

likelihood_threshold_association |

+ Min confidence for association | +0.3 | +

reproj_error_threshold_association |

+ Max reprojection error (pixels) | +20 | +

min_cameras_for_triangulation |

+ Minimum cameras needed | +2 | +

📐 Triangulation

+| Parameter | +Description | +Default | +

|---|---|---|

reproj_error_threshold_triangulation |

+ Max reprojection error (pixels) | +15 | +

likelihood_threshold_triangulation |

+ Min keypoint confidence | +0.3 | +

min_cameras_for_triangulation |

+ Minimum cameras required | +2 | +

interpolation_kind |

+ cubic, linear, slinear, quadratic | +cubic | +

interp_if_gap_smaller_than |

+ Max gap size for interpolation (frames) | +10 | +

show_interp_indices |

+ Display interpolated frames | +true | +

handle_LR_swap |

+ Correct left/right swaps (KEEP FALSE) | +false | +

undistort_points |

+ Undistort before triangulation (KEEP FALSE) | +false | +

make_c3d |

+ Also save as .c3d format | +false | +

🔄 Filtering

+| Parameter | +Description | +Default | +

|---|---|---|

type |

+ butterworth, kalman, gaussian, loess, median | +butterworth | +

display_figures |

+ Show before/after plots | +true | +

butterworth_order |

+ Filter order | +4 | +

butterworth_cut_off_frequency |

+ Cutoff frequency (Hz) | +6 | +

kalman_trust_ratio |

+ Measurement vs process trust | +500 | +

kalman_smooth |

+ Apply smoothing pass | +true | +

gaussian_sigma_kernel |

+ Gaussian kernel size | +5 | +

loess_nb_values_used |

+ Number of values for LOESS | +30 | +

median_kernel_size |

+ Median filter kernel | +5 | +

🎯 Marker Augmentation

+| Parameter | +Description | +Default | +

|---|---|---|

participant_height |

+ Height in meters (list for multi-person) | +[1.72] | +

participant_mass |

+ Mass in kg (list for multi-person) | +[70] | +

make_c3d |

+ Save as .c3d format | +false | +

🤸 OpenSim Kinematics

+| Parameter | +Description | +Default | +

|---|---|---|

use_augmentation |

+ Use LSTM-augmented markers | +false | +

use_simple_model |

+ Simple model (10x faster) | +false | +

use_contacts_muscles |

+ Include muscles and contact spheres | +false | +

right_left_symmetry |

+ Enforce bilateral symmetry | +true | +

remove_scaling_setup |

+ Delete scaling .xml after | +false | +

remove_ik_setup |

+ Delete IK .xml after | +false | +

📖 Full Documentation

+For complete details and examples, see the Pose2Sim GitHub repository.

+Performance Optimization

+Speed up processing for large projects and batch operations.

+ +1. Calibration - Run Once

+If cameras don't move between sessions:

+-

+

- Run

Pose2Sim.calibration()only once

+ - Copy

Calib.tomlto new project folders

+ - Skip calibration step entirely +

💡 Time Saved

+Calibration can take 5-15 minutes. Reusing saves this every session!

+2. Pose Estimation Optimization

+ +Use GPU (Biggest Speedup)

+GPU acceleration provides 3-10x speedup. See Step 1 for installation.

+⚡ Speed Comparison

+Processing 4 camera videos (500 frames each):

+-

+

- CPU: ~150 seconds +

- GPU: ~30 seconds +

Reduce Detection Frequency

+Huge speedup with minimal accuracy loss:

+[pose]

+det_frequency = 100 # Detect people every 100 frames instead of every frame

+ Result: 150s → 30s (5x faster)

+Use Lightweight Mode

+[pose]

+mode = 'lightweight' # Faster model, slightly less accurate

+ Result: 30s → 20s (1.5x faster)

+Disable Real-Time Display

+[pose]

+display_detection = false # Don't show video during processing

+ Result: 20s → 15s (1.3x faster)

+Skip Video Saving

+[pose]

+save_video = 'none' # Don't save annotated videos

+ Result: 15s → 9s (1.7x faster)

+Use Sports2D Tracker

+[pose]

+tracking_mode = 'sports2d' # Faster than deepsort for simple scenes

+ ✅ Cumulative Speedup

+Combining all optimizations:

+150s → 9s (17x faster!)

+3. Skip Unnecessary Steps

+| Step | +Skip If... | +

|---|---|

| Calibration | +Cameras haven't moved | +

| Synchronization | +Cameras natively synchronized | +

| Person Association | +Only one person in scene | +

| Filtering | +Data already clean | +

| Marker Augmentation | +Using 4+ cameras, not helpful | +

4. OpenSim Optimization

+[opensim]

+use_simple_model = true # 10x faster than full model

+ Result: 9s → 0.7s per trial

+Simple model accurate enough for most gait analysis

+5. Batch Processing Structure

+Efficient organization for processing multiple trials:

+-

+

- Single calibration for entire batch +

- Global parameters in session-level Config.toml +

- Trial-specific overrides only when needed +

- Run from session level to process all trials +

6. Frame Range Limitation

+Process only relevant portions:

+[project]

+frame_range = [100, 500] # Only process frames 100-500

+ Especially useful for long captures where action is brief.

+Maximum Speed Configuration

+For fastest processing (batch operations, prototyping):

+[pose]

+mode = 'lightweight'

+det_frequency = 100

+display_detection = false

+save_video = 'none'

+tracking_mode = 'sports2d'

+

+[filtering]

+display_figures = false

+

+[opensim]

+use_simple_model = true

+use_augmentation = false

+ 💡 Performance Tips Summary

+-

+

- GPU: Single biggest speedup (3-10x) +

- Detection frequency: Set to 50-100 for 5x speedup +

- Lightweight mode: Minimal accuracy loss for 1.5x speedup +

- Skip displays/videos: Another 2-3x cumulative +

- Simple OpenSim model: 10x faster for IK +

- Skip unnecessary steps: Don't run what you don't need +

⚠️ Speed vs Accuracy Trade-offs

+Some optimizations reduce accuracy:

+-

+

- Lightweight mode: Slightly less accurate pose detection +

- High det_frequency: May miss fast movements +

- Simple OpenSim model: Less anatomically detailed +

Recommendation: Use full accuracy for final analysis, optimized settings for testing/development.

+✅ Optimization Complete!

+You now know how to process data efficiently for any scale - from single trials to large research studies!

+