-

-

-

"a red cat playing with a (ball)1.5"

-

-

-

-

"a red cat playing with a (ball)0.6"

-

+

+

diff --git a/docs/source/en/using-diffusers/write_own_pipeline.md b/docs/source/en/using-diffusers/write_own_pipeline.md

index bdcd4e5d1307..283397ff3e9d 100644

--- a/docs/source/en/using-diffusers/write_own_pipeline.md

+++ b/docs/source/en/using-diffusers/write_own_pipeline.md

@@ -106,7 +106,7 @@ Let's try it out!

## Deconstruct the Stable Diffusion pipeline

-Stable Diffusion is a text-to-image *latent diffusion* model. It is called a latent diffusion model because it works with a lower-dimensional representation of the image instead of the actual pixel space, which makes it more memory efficient. The encoder compresses the image into a smaller representation, and a decoder to convert the compressed representation back into an image. For text-to-image models, you'll need a tokenizer and an encoder to generate text embeddings. From the previous example, you already know you need a UNet model and a scheduler.

+Stable Diffusion is a text-to-image *latent diffusion* model. It is called a latent diffusion model because it works with a lower-dimensional representation of the image instead of the actual pixel space, which makes it more memory efficient. The encoder compresses the image into a smaller representation, and a decoder converts the compressed representation back into an image. For text-to-image models, you'll need a tokenizer and an encoder to generate text embeddings. From the previous example, you already know you need a UNet model and a scheduler.

As you can see, this is already more complex than the DDPM pipeline which only contains a UNet model. The Stable Diffusion model has three separate pretrained models.

diff --git a/docs/source/ko/api/pipelines/stable_diffusion/stable_diffusion_xl.md b/docs/source/ko/api/pipelines/stable_diffusion/stable_diffusion_xl.md

index d7211d6b9471..d708dfa59dad 100644

--- a/docs/source/ko/api/pipelines/stable_diffusion/stable_diffusion_xl.md

+++ b/docs/source/ko/api/pipelines/stable_diffusion/stable_diffusion_xl.md

@@ -121,7 +121,7 @@ image = pipe(prompt=prompt, image=init_image, mask_image=mask_image, num_inferen

### 이미지 결과물을 정제하기

-[base 모델 체크포인트](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0)에서, StableDiffusion-XL 또한 고주파 품질을 향상시키는 이미지를 생성하기 위해 낮은 노이즈 단계 이미지를 제거하는데 특화된 [refiner 체크포인트](huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0)를 포함하고 있습니다. 이 refiner 체크포인트는 이미지 품질을 향상시키기 위해 base 체크포인트를 실행한 후 "두 번째 단계" 파이프라인에 사용될 수 있습니다.

+[base 모델 체크포인트](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0)에서, StableDiffusion-XL 또한 고주파 품질을 향상시키는 이미지를 생성하기 위해 낮은 노이즈 단계 이미지를 제거하는데 특화된 [refiner 체크포인트](https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0)를 포함하고 있습니다. 이 refiner 체크포인트는 이미지 품질을 향상시키기 위해 base 체크포인트를 실행한 후 "두 번째 단계" 파이프라인에 사용될 수 있습니다.

refiner를 사용할 때, 쉽게 사용할 수 있습니다

- 1.) base 모델과 refiner을 사용하는데, 이는 *Denoisers의 앙상블*을 위한 첫 번째 제안된 [eDiff-I](https://research.nvidia.com/labs/dir/eDiff-I/)를 사용하거나

@@ -215,7 +215,7 @@ image = refiner(

#### 2.) 노이즈가 완전히 제거된 기본 이미지에서 이미지 출력을 정제하기

-일반적인 [`StableDiffusionImg2ImgPipeline`] 방식에서, 기본 모델에서 생성된 완전히 노이즈가 제거된 이미지는 [refiner checkpoint](huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0)를 사용해 더 향상시킬 수 있습니다.

+일반적인 [`StableDiffusionImg2ImgPipeline`] 방식에서, 기본 모델에서 생성된 완전히 노이즈가 제거된 이미지는 [refiner checkpoint](https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0)를 사용해 더 향상시킬 수 있습니다.

이를 위해, 보통의 "base" text-to-image 파이프라인을 수행 후에 image-to-image 파이프라인으로써 refiner를 실행시킬 수 있습니다. base 모델의 출력을 잠재 공간에 남겨둘 수 있습니다.

diff --git a/docs/source/ko/training/controlnet.md b/docs/source/ko/training/controlnet.md

index afdd2c8e0004..ce83cab54e8b 100644

--- a/docs/source/ko/training/controlnet.md

+++ b/docs/source/ko/training/controlnet.md

@@ -66,12 +66,6 @@ from accelerate.utils import write_basic_config

write_basic_config()

```

-## 원을 채우는 데이터셋

-

-원본 데이터셋은 ControlNet [repo](https://huggingface.co/lllyasviel/ControlNet/blob/main/training/fill50k.zip)에 올라와있지만, 우리는 [여기](https://huggingface.co/datasets/fusing/fill50k)에 새롭게 다시 올려서 🤗 Datasets 과 호환가능합니다. 그래서 학습 스크립트 상에서 데이터 불러오기를 다룰 수 있습니다.

-

-우리의 학습 예시는 원래 ControlNet의 학습에 쓰였던 [`stable-diffusion-v1-5/stable-diffusion-v1-5`](https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5)을 사용합니다. 그렇지만 ControlNet은 대응되는 어느 Stable Diffusion 모델([`CompVis/stable-diffusion-v1-4`](https://huggingface.co/CompVis/stable-diffusion-v1-4)) 혹은 [`stabilityai/stable-diffusion-2-1`](https://huggingface.co/stabilityai/stable-diffusion-2-1)의 증가를 위해 학습될 수 있습니다.

-

자체 데이터셋을 사용하기 위해서는 [학습을 위한 데이터셋 생성하기](create_dataset) 가이드를 확인하세요.

## 학습

diff --git a/docs/source/ko/training/create_dataset.md b/docs/source/ko/training/create_dataset.md

index 6987a6c9d4f0..401a73ebf237 100644

--- a/docs/source/ko/training/create_dataset.md

+++ b/docs/source/ko/training/create_dataset.md

@@ -1,7 +1,7 @@

# 학습을 위한 데이터셋 만들기

[Hub](https://huggingface.co/datasets?task_categories=task_categories:text-to-image&sort=downloads) 에는 모델 교육을 위한 많은 데이터셋이 있지만,

-관심이 있거나 사용하고 싶은 데이터셋을 찾을 수 없는 경우 🤗 [Datasets](hf.co/docs/datasets) 라이브러리를 사용하여 데이터셋을 만들 수 있습니다.

+관심이 있거나 사용하고 싶은 데이터셋을 찾을 수 없는 경우 🤗 [Datasets](https://huggingface.co/docs/datasets) 라이브러리를 사용하여 데이터셋을 만들 수 있습니다.

데이터셋 구조는 모델을 학습하려는 작업에 따라 달라집니다.

가장 기본적인 데이터셋 구조는 unconditional 이미지 생성과 같은 작업을 위한 이미지 디렉토리입니다.

또 다른 데이터셋 구조는 이미지 디렉토리와 text-to-image 생성과 같은 작업에 해당하는 텍스트 캡션이 포함된 텍스트 파일일 수 있습니다.

diff --git a/docs/source/ko/training/lora.md b/docs/source/ko/training/lora.md

index 6b905951aafc..85ed1dda0b81 100644

--- a/docs/source/ko/training/lora.md

+++ b/docs/source/ko/training/lora.md

@@ -36,7 +36,7 @@ specific language governing permissions and limitations under the License.

[cloneofsimo](https://github.com/cloneofsimo)는 인기 있는 [lora](https://github.com/cloneofsimo/lora) GitHub 리포지토리에서 Stable Diffusion을 위한 LoRA 학습을 최초로 시도했습니다. 🧨 Diffusers는 [text-to-image 생성](https://github.com/huggingface/diffusers/tree/main/examples/text_to_image#training-with-lora) 및 [DreamBooth](https://github.com/huggingface/diffusers/tree/main/examples/dreambooth#training-with-low-rank-adaptation-of-large-language-models-lora)을 지원합니다. 이 가이드는 두 가지를 모두 수행하는 방법을 보여줍니다.

-모델을 저장하거나 커뮤니티와 공유하려면 Hugging Face 계정에 로그인하세요(아직 계정이 없는 경우 [생성](hf.co/join)하세요):

+모델을 저장하거나 커뮤니티와 공유하려면 Hugging Face 계정에 로그인하세요(아직 계정이 없는 경우 [생성](https://huggingface.co/join)하세요):

```bash

huggingface-cli login

diff --git a/docs/source/ko/tutorials/basic_training.md b/docs/source/ko/tutorials/basic_training.md

index f34507b50c9d..5b08bb39d602 100644

--- a/docs/source/ko/tutorials/basic_training.md

+++ b/docs/source/ko/tutorials/basic_training.md

@@ -76,7 +76,7 @@ huggingface-cli login

... output_dir = "ddpm-butterflies-128" # 로컬 및 HF Hub에 저장되는 모델명

... push_to_hub = True # 저장된 모델을 HF Hub에 업로드할지 여부

-... hub_private_repo = False

+... hub_private_repo = None

... overwrite_output_dir = True # 노트북을 다시 실행할 때 이전 모델에 덮어씌울지

... seed = 0

diff --git a/docs/source/zh/_toctree.yml b/docs/source/zh/_toctree.yml

index 41d5e95a4230..6416c468a8e9 100644

--- a/docs/source/zh/_toctree.yml

+++ b/docs/source/zh/_toctree.yml

@@ -5,6 +5,8 @@

title: 快速入门

- local: stable_diffusion

title: 有效和高效的扩散

+ - local: consisid

+ title: 身份保持的文本到视频生成

- local: installation

title: 安装

title: 开始

diff --git a/docs/source/zh/consisid.md b/docs/source/zh/consisid.md

new file mode 100644

index 000000000000..2f404499fc69

--- /dev/null

+++ b/docs/source/zh/consisid.md

@@ -0,0 +1,100 @@

+

+# ConsisID

+

+[ConsisID](https://github.com/PKU-YuanGroup/ConsisID)是一种身份保持的文本到视频生成模型,其通过频率分解在生成的视频中保持面部一致性。它具有以下特点:

+

+- 基于频率分解:将人物ID特征解耦为高频和低频部分,从频域的角度分析DIT架构的特性,并且基于此特性设计合理的控制信息注入方式。

+

+- 一致性训练策略:我们提出粗到细训练策略、动态掩码损失、动态跨脸损失,进一步提高了模型的泛化能力和身份保持效果。

+

+

+- 推理无需微调:之前的方法在推理前,需要对输入id进行case-by-case微调,时间和算力开销较大,而我们的方法是tuning-free的。

+

+

+本指南将指导您使用 ConsisID 生成身份保持的视频。

+

+## Load Model Checkpoints

+模型权重可以存储在Hub上或本地的单独子文件夹中,在这种情况下,您应该使用 [`~DiffusionPipeline.from_pretrained`] 方法。

+

+

+```python

+# !pip install consisid_eva_clip insightface facexlib

+import torch

+from diffusers import ConsisIDPipeline

+from diffusers.pipelines.consisid.consisid_utils import prepare_face_models, process_face_embeddings_infer

+from huggingface_hub import snapshot_download

+

+# Download ckpts

+snapshot_download(repo_id="BestWishYsh/ConsisID-preview", local_dir="BestWishYsh/ConsisID-preview")

+

+# Load face helper model to preprocess input face image

+face_helper_1, face_helper_2, face_clip_model, face_main_model, eva_transform_mean, eva_transform_std = prepare_face_models("BestWishYsh/ConsisID-preview", device="cuda", dtype=torch.bfloat16)

+

+# Load consisid base model

+pipe = ConsisIDPipeline.from_pretrained("BestWishYsh/ConsisID-preview", torch_dtype=torch.bfloat16)

+pipe.to("cuda")

+```

+

+## Identity-Preserving Text-to-Video

+对于身份保持的文本到视频生成,需要输入文本提示和包含清晰面部(例如,最好是半身或全身)的图像。默认情况下,ConsisID 会生成 720x480 的视频以获得最佳效果。

+

+```python

+from diffusers.utils import export_to_video

+

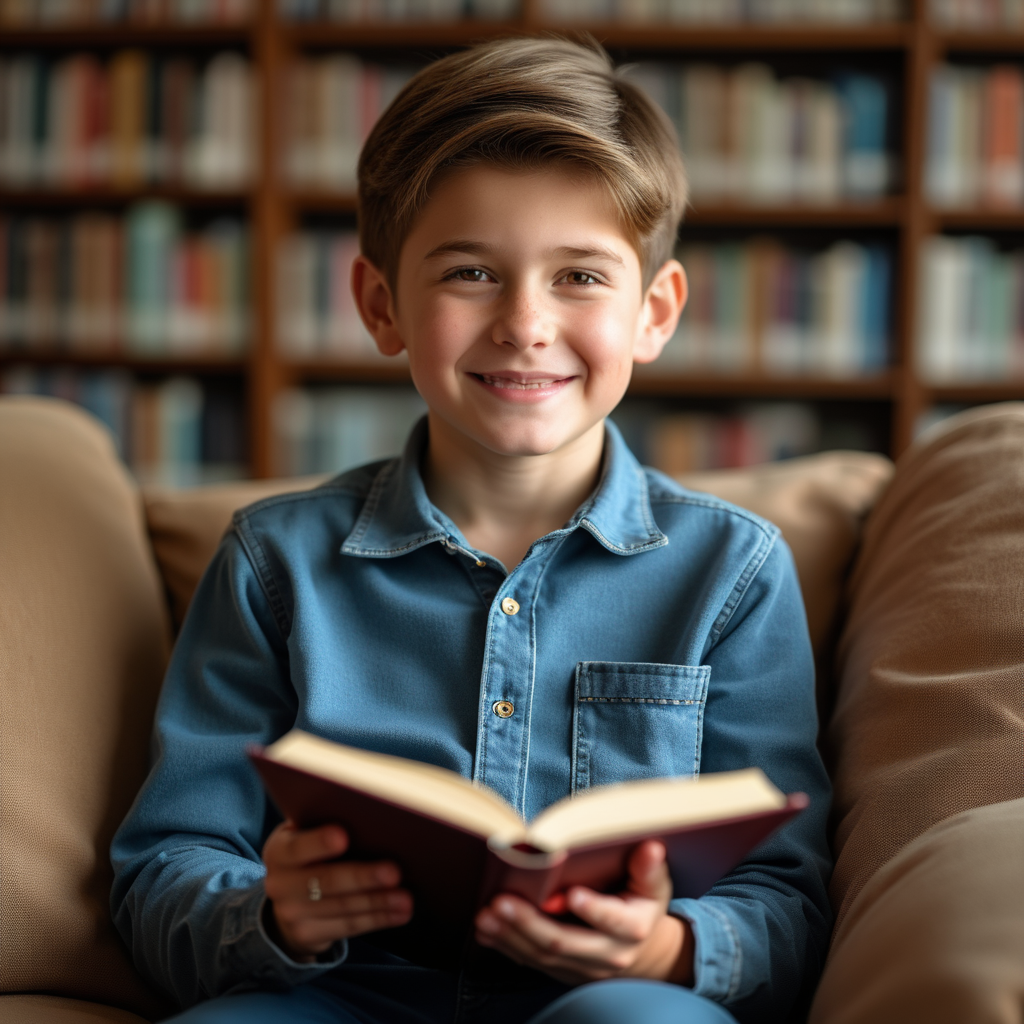

+prompt = "The video captures a boy walking along a city street, filmed in black and white on a classic 35mm camera. His expression is thoughtful, his brow slightly furrowed as if he's lost in contemplation. The film grain adds a textured, timeless quality to the image, evoking a sense of nostalgia. Around him, the cityscape is filled with vintage buildings, cobblestone sidewalks, and softly blurred figures passing by, their outlines faint and indistinct. Streetlights cast a gentle glow, while shadows play across the boy's path, adding depth to the scene. The lighting highlights the boy's subtle smile, hinting at a fleeting moment of curiosity. The overall cinematic atmosphere, complete with classic film still aesthetics and dramatic contrasts, gives the scene an evocative and introspective feel."

+image = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/consisid/consisid_input.png?download=true"

+

+id_cond, id_vit_hidden, image, face_kps = process_face_embeddings_infer(face_helper_1, face_clip_model, face_helper_2, eva_transform_mean, eva_transform_std, face_main_model, "cuda", torch.bfloat16, image, is_align_face=True)

+

+video = pipe(image=image, prompt=prompt, num_inference_steps=50, guidance_scale=6.0, use_dynamic_cfg=False, id_vit_hidden=id_vit_hidden, id_cond=id_cond, kps_cond=face_kps, generator=torch.Generator("cuda").manual_seed(42))

+export_to_video(video.frames[0], "output.mp4", fps=8)

+```

+

+

+ Face Image

+ Video

+ Description

+

+ The video, in a beautifully crafted animated style, features a confident woman riding a horse through a lush forest clearing. Her expression is focused yet serene as she adjusts her wide-brimmed hat with a practiced hand. She wears a flowy bohemian dress, which moves gracefully with the rhythm of the horse, the fabric flowing fluidly in the animated motion. The dappled sunlight filters through the trees, casting soft, painterly patterns on the forest floor. Her posture is poised, showing both control and elegance as she guides the horse with ease. The animation's gentle, fluid style adds a dreamlike quality to the scene, with the woman’s calm demeanor and the peaceful surroundings evoking a sense of freedom and harmony.

+

+

+ The video, in a captivating animated style, shows a woman standing in the center of a snowy forest, her eyes narrowed in concentration as she extends her hand forward. She is dressed in a deep blue cloak, her breath visible in the cold air, which is rendered with soft, ethereal strokes. A faint smile plays on her lips as she summons a wisp of ice magic, watching with focus as the surrounding trees and ground begin to shimmer and freeze, covered in delicate ice crystals. The animation’s fluid motion brings the magic to life, with the frost spreading outward in intricate, sparkling patterns. The environment is painted with soft, watercolor-like hues, enhancing the magical, dreamlike atmosphere. The overall mood is serene yet powerful, with the quiet winter air amplifying the delicate beauty of the frozen scene.

+

+

+ The animation features a whimsical portrait of a balloon seller standing in a gentle breeze, captured with soft, hazy brushstrokes that evoke the feel of a serene spring day. His face is framed by a gentle smile, his eyes squinting slightly against the sun, while a few wisps of hair flutter in the wind. He is dressed in a light, pastel-colored shirt, and the balloons around him sway with the wind, adding a sense of playfulness to the scene. The background blurs softly, with hints of a vibrant market or park, enhancing the light-hearted, yet tender mood of the moment.

+

+

+ The video captures a boy walking along a city street, filmed in black and white on a classic 35mm camera. His expression is thoughtful, his brow slightly furrowed as if he's lost in contemplation. The film grain adds a textured, timeless quality to the image, evoking a sense of nostalgia. Around him, the cityscape is filled with vintage buildings, cobblestone sidewalks, and softly blurred figures passing by, their outlines faint and indistinct. Streetlights cast a gentle glow, while shadows play across the boy's path, adding depth to the scene. The lighting highlights the boy's subtle smile, hinting at a fleeting moment of curiosity. The overall cinematic atmosphere, complete with classic film still aesthetics and dramatic contrasts, gives the scene an evocative and introspective feel.

+

+

+ The video features a baby wearing a bright superhero cape, standing confidently with arms raised in a powerful pose. The baby has a determined look on their face, with eyes wide and lips pursed in concentration, as if ready to take on a challenge. The setting appears playful, with colorful toys scattered around and a soft rug underfoot, while sunlight streams through a nearby window, highlighting the fluttering cape and adding to the impression of heroism. The overall atmosphere is lighthearted and fun, with the baby's expressions capturing a mix of innocence and an adorable attempt at bravery, as if truly ready to save the day.

+

+

+

+## Resources

+

+通过以下资源了解有关 ConsisID 的更多信息:

+

+- 一段 [视频](https://www.youtube.com/watch?v=PhlgC-bI5SQ) 演示了 ConsisID 的主要功能;

+- 有关更多详细信息,请参阅研究论文 [Identity-Preserving Text-to-Video Generation by Frequency Decomposition](https://hf.co/papers/2411.17440)。

diff --git a/examples/README.md b/examples/README.md

index c27507040545..7cdf25999ac3 100644

--- a/examples/README.md

+++ b/examples/README.md

@@ -40,9 +40,9 @@ Training examples show how to pretrain or fine-tune diffusion models for a varie

| [**Text-to-Image fine-tuning**](./text_to_image) | ✅ | ✅ |

| [**Textual Inversion**](./textual_inversion) | ✅ | - | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb)

| [**Dreambooth**](./dreambooth) | ✅ | - | [](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb)

-| [**ControlNet**](./controlnet) | ✅ | ✅ | -

-| [**InstructPix2Pix**](./instruct_pix2pix) | ✅ | ✅ | -

-| [**Reinforcement Learning for Control**](./reinforcement_learning) | - | - | coming soon.

+| [**ControlNet**](./controlnet) | ✅ | ✅ | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/controlnet.ipynb)

+| [**InstructPix2Pix**](./instruct_pix2pix) | ✅ | ✅ | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/InstructPix2Pix_using_diffusers.ipynb)

+| [**Reinforcement Learning for Control**](./reinforcement_learning) | - | - | [Notebook1](https://github.com/huggingface/notebooks/blob/main/diffusers/reinforcement_learning_for_control.ipynb), [Notebook2](https://github.com/huggingface/notebooks/blob/main/diffusers/reinforcement_learning_with_diffusers.ipynb)

## Community

diff --git a/examples/advanced_diffusion_training/README.md b/examples/advanced_diffusion_training/README.md

index cd8c5feda9f0..504ae1471f44 100644

--- a/examples/advanced_diffusion_training/README.md

+++ b/examples/advanced_diffusion_training/README.md

@@ -67,6 +67,17 @@ write_basic_config()

When running `accelerate config`, if we specify torch compile mode to True there can be dramatic speedups.

Note also that we use PEFT library as backend for LoRA training, make sure to have `peft>=0.6.0` installed in your environment.

+Lastly, we recommend logging into your HF account so that your trained LoRA is automatically uploaded to the hub:

+```bash

+huggingface-cli login

+```

+This command will prompt you for a token. Copy-paste yours from your [settings/tokens](https://huggingface.co/settings/tokens),and press Enter.

+

+> [!NOTE]

+> In the examples below we use `wandb` to document the training runs. To do the same, make sure to install `wandb`:

+> `pip install wandb`

+> Alternatively, you can use other tools / train without reporting by modifying the flag `--report_to="wandb"`.

+

### Pivotal Tuning

**Training with text encoder(s)**

diff --git a/examples/advanced_diffusion_training/README_flux.md b/examples/advanced_diffusion_training/README_flux.md

index 8817431bede5..f2a571d5eae4 100644

--- a/examples/advanced_diffusion_training/README_flux.md

+++ b/examples/advanced_diffusion_training/README_flux.md

@@ -65,16 +65,27 @@ write_basic_config()

When running `accelerate config`, if we specify torch compile mode to True there can be dramatic speedups.

Note also that we use PEFT library as backend for LoRA training, make sure to have `peft>=0.6.0` installed in your environment.

+Lastly, we recommend logging into your HF account so that your trained LoRA is automatically uploaded to the hub:

+```bash

+huggingface-cli login

+```

+This command will prompt you for a token. Copy-paste yours from your [settings/tokens](https://huggingface.co/settings/tokens),and press Enter.

+

+> [!NOTE]

+> In the examples below we use `wandb` to document the training runs. To do the same, make sure to install `wandb`:

+> `pip install wandb`

+> Alternatively, you can use other tools / train without reporting by modifying the flag `--report_to="wandb"`.

+

### Target Modules

When LoRA was first adapted from language models to diffusion models, it was applied to the cross-attention layers in the Unet that relate the image representations with the prompts that describe them.

More recently, SOTA text-to-image diffusion models replaced the Unet with a diffusion Transformer(DiT). With this change, we may also want to explore

-applying LoRA training onto different types of layers and blocks. To allow more flexibility and control over the targeted modules we added `--lora_layers`- in which you can specify in a comma seperated string

+applying LoRA training onto different types of layers and blocks. To allow more flexibility and control over the targeted modules we added `--lora_layers`- in which you can specify in a comma separated string

the exact modules for LoRA training. Here are some examples of target modules you can provide:

- for attention only layers: `--lora_layers="attn.to_k,attn.to_q,attn.to_v,attn.to_out.0"`

- to train the same modules as in the fal trainer: `--lora_layers="attn.to_k,attn.to_q,attn.to_v,attn.to_out.0,attn.add_k_proj,attn.add_q_proj,attn.add_v_proj,attn.to_add_out,ff.net.0.proj,ff.net.2,ff_context.net.0.proj,ff_context.net.2"`

- to train the same modules as in ostris ai-toolkit / replicate trainer: `--lora_blocks="attn.to_k,attn.to_q,attn.to_v,attn.to_out.0,attn.add_k_proj,attn.add_q_proj,attn.add_v_proj,attn.to_add_out,ff.net.0.proj,ff.net.2,ff_context.net.0.proj,ff_context.net.2,norm1_context.linear, norm1.linear,norm.linear,proj_mlp,proj_out"`

> [!NOTE]

-> `--lora_layers` can also be used to specify which **blocks** to apply LoRA training to. To do so, simply add a block prefix to each layer in the comma seperated string:

+> `--lora_layers` can also be used to specify which **blocks** to apply LoRA training to. To do so, simply add a block prefix to each layer in the comma separated string:

> **single DiT blocks**: to target the ith single transformer block, add the prefix `single_transformer_blocks.i`, e.g. - `single_transformer_blocks.i.attn.to_k`

> **MMDiT blocks**: to target the ith MMDiT block, add the prefix `transformer_blocks.i`, e.g. - `transformer_blocks.i.attn.to_k`

> [!NOTE]

diff --git a/examples/advanced_diffusion_training/train_dreambooth_lora_flux_advanced.py b/examples/advanced_diffusion_training/train_dreambooth_lora_flux_advanced.py

index 3db6896228de..b8194507d822 100644

--- a/examples/advanced_diffusion_training/train_dreambooth_lora_flux_advanced.py

+++ b/examples/advanced_diffusion_training/train_dreambooth_lora_flux_advanced.py

@@ -1,6 +1,6 @@

#!/usr/bin/env python

# coding=utf-8

-# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

+# Copyright 2025 The HuggingFace Inc. team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

@@ -74,7 +74,7 @@

import wandb

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

-check_min_version("0.31.0.dev0")

+check_min_version("0.33.0.dev0")

logger = get_logger(__name__)

@@ -227,7 +227,7 @@ def log_validation(

pipeline.set_progress_bar_config(disable=True)

# run inference

- generator = torch.Generator(device=accelerator.device).manual_seed(args.seed) if args.seed else None

+ generator = torch.Generator(device=accelerator.device).manual_seed(args.seed) if args.seed is not None else None

autocast_ctx = nullcontext()

with autocast_ctx:

@@ -378,7 +378,7 @@ def parse_args(input_args=None):

default=None,

help="the concept to use to initialize the new inserted tokens when training with "

"--train_text_encoder_ti = True. By default, new tokens (

) are initialized with random value. "

- "Alternatively, you could specify a different word/words whos value will be used as the starting point for the new inserted tokens. "

+ "Alternatively, you could specify a different word/words whose value will be used as the starting point for the new inserted tokens. "

"--num_new_tokens_per_abstraction is ignored when initializer_concept is provided",

)

parser.add_argument(

@@ -662,7 +662,7 @@ def parse_args(input_args=None):

type=str,

default=None,

help=(

- "The transformer modules to apply LoRA training on. Please specify the layers in a comma seperated. "

+ "The transformer modules to apply LoRA training on. Please specify the layers in a comma separated. "

'E.g. - "to_k,to_q,to_v,to_out.0" will result in lora training of attention layers only. For more examples refer to https://github.com/huggingface/diffusers/blob/main/examples/advanced_diffusion_training/README_flux.md'

),

)

@@ -880,9 +880,7 @@ def save_embeddings(self, file_path: str):

idx_to_text_encoder_name = {0: "clip_l", 1: "t5"}

for idx, text_encoder in enumerate(self.text_encoders):

train_ids = self.train_ids if idx == 0 else self.train_ids_t5

- embeds = (

- text_encoder.text_model.embeddings.token_embedding if idx == 0 else text_encoder.encoder.embed_tokens

- )

+ embeds = text_encoder.text_model.embeddings.token_embedding if idx == 0 else text_encoder.shared

assert embeds.weight.data.shape[0] == len(self.tokenizers[idx]), "Tokenizers should be the same."

new_token_embeddings = embeds.weight.data[train_ids]

@@ -904,9 +902,7 @@ def device(self):

@torch.no_grad()

def retract_embeddings(self):

for idx, text_encoder in enumerate(self.text_encoders):

- embeds = (

- text_encoder.text_model.embeddings.token_embedding if idx == 0 else text_encoder.encoder.embed_tokens

- )

+ embeds = text_encoder.text_model.embeddings.token_embedding if idx == 0 else text_encoder.shared

index_no_updates = self.embeddings_settings[f"index_no_updates_{idx}"]

embeds.weight.data[index_no_updates] = (

self.embeddings_settings[f"original_embeddings_{idx}"][index_no_updates]

@@ -1650,6 +1646,8 @@ def save_model_hook(models, weights, output_dir):

elif isinstance(model, type(unwrap_model(text_encoder_one))):

if args.train_text_encoder: # when --train_text_encoder_ti we don't save the layers

text_encoder_one_lora_layers_to_save = get_peft_model_state_dict(model)

+ elif isinstance(model, type(unwrap_model(text_encoder_two))):

+ pass # when --train_text_encoder_ti and --enable_t5_ti we don't save the layers

else:

raise ValueError(f"unexpected save model: {model.__class__}")

@@ -1747,7 +1745,7 @@ def load_model_hook(models, input_dir):

if args.enable_t5_ti: # whether to do pivotal tuning/textual inversion for T5 as well

text_lora_parameters_two = []

for name, param in text_encoder_two.named_parameters():

- if "token_embedding" in name:

+ if "shared" in name:

# ensure that dtype is float32, even if rest of the model that isn't trained is loaded in fp16

param.data = param.to(dtype=torch.float32)

param.requires_grad = True

@@ -1776,15 +1774,10 @@ def load_model_hook(models, input_dir):

if not args.enable_t5_ti:

# pure textual inversion - only clip

if pure_textual_inversion:

- params_to_optimize = [

- text_parameters_one_with_lr,

- ]

+ params_to_optimize = [text_parameters_one_with_lr]

te_idx = 0

else: # regular te training or regular pivotal for clip

- params_to_optimize = [

- transformer_parameters_with_lr,

- text_parameters_one_with_lr,

- ]

+ params_to_optimize = [transformer_parameters_with_lr, text_parameters_one_with_lr]

te_idx = 1

elif args.enable_t5_ti:

# pivotal tuning of clip & t5

@@ -1807,9 +1800,7 @@ def load_model_hook(models, input_dir):

]

te_idx = 1

else:

- params_to_optimize = [

- transformer_parameters_with_lr,

- ]

+ params_to_optimize = [transformer_parameters_with_lr]

# Optimizer creation

if not (args.optimizer.lower() == "prodigy" or args.optimizer.lower() == "adamw"):

@@ -1869,7 +1860,6 @@ def load_model_hook(models, input_dir):

params_to_optimize[-1]["lr"] = args.learning_rate

optimizer = optimizer_class(

params_to_optimize,

- lr=args.learning_rate,

betas=(args.adam_beta1, args.adam_beta2),

beta3=args.prodigy_beta3,

weight_decay=args.adam_weight_decay,

@@ -2160,6 +2150,7 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

# encode batch prompts when custom prompts are provided for each image -

if train_dataset.custom_instance_prompts:

+ elems_to_repeat = 1

if freeze_text_encoder:

prompt_embeds, pooled_prompt_embeds, text_ids = compute_text_embeddings(

prompts, text_encoders, tokenizers

@@ -2174,17 +2165,21 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

max_sequence_length=args.max_sequence_length,

add_special_tokens=add_special_tokens_t5,

)

+ else:

+ elems_to_repeat = len(prompts)

if not freeze_text_encoder:

prompt_embeds, pooled_prompt_embeds, text_ids = encode_prompt(

text_encoders=[text_encoder_one, text_encoder_two],

tokenizers=[None, None],

- text_input_ids_list=[tokens_one, tokens_two],

+ text_input_ids_list=[

+ tokens_one.repeat(elems_to_repeat, 1),

+ tokens_two.repeat(elems_to_repeat, 1),

+ ],

max_sequence_length=args.max_sequence_length,

device=accelerator.device,

prompt=prompts,

)

-

# Convert images to latent space

if args.cache_latents:

model_input = latents_cache[step].sample()

@@ -2198,8 +2193,8 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

latent_image_ids = FluxPipeline._prepare_latent_image_ids(

model_input.shape[0],

- model_input.shape[2],

- model_input.shape[3],

+ model_input.shape[2] // 2,

+ model_input.shape[3] // 2,

accelerator.device,

weight_dtype,

)

@@ -2253,8 +2248,8 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

)[0]

model_pred = FluxPipeline._unpack_latents(

model_pred,

- height=int(model_input.shape[2] * vae_scale_factor / 2),

- width=int(model_input.shape[3] * vae_scale_factor / 2),

+ height=model_input.shape[2] * vae_scale_factor,

+ width=model_input.shape[3] * vae_scale_factor,

vae_scale_factor=vae_scale_factor,

)

@@ -2377,6 +2372,9 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

epoch=epoch,

torch_dtype=weight_dtype,

)

+ images = None

+ del pipeline

+

if freeze_text_encoder:

del text_encoder_one, text_encoder_two

free_memory()

@@ -2454,6 +2452,8 @@ def get_sigmas(timesteps, n_dim=4, dtype=torch.float32):

commit_message="End of training",

ignore_patterns=["step_*", "epoch_*"],

)

+ images = None

+ del pipeline

accelerator.end_training()

diff --git a/examples/advanced_diffusion_training/train_dreambooth_lora_sd15_advanced.py b/examples/advanced_diffusion_training/train_dreambooth_lora_sd15_advanced.py

index 7e1a0298ba1d..8cd1d777c00c 100644

--- a/examples/advanced_diffusion_training/train_dreambooth_lora_sd15_advanced.py

+++ b/examples/advanced_diffusion_training/train_dreambooth_lora_sd15_advanced.py

@@ -1,6 +1,6 @@

#!/usr/bin/env python

# coding=utf-8

-# Copyright 2024 The HuggingFace Inc. team. All rights reserved.

+# Copyright 2025 The HuggingFace Inc. team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

@@ -39,7 +39,7 @@

from accelerate.utils import DistributedDataParallelKwargs, ProjectConfiguration, set_seed

from huggingface_hub import create_repo, upload_folder

from packaging import version

-from peft import LoraConfig

+from peft import LoraConfig, set_peft_model_state_dict

from peft.utils import get_peft_model_state_dict

from PIL import Image

from PIL.ImageOps import exif_transpose

@@ -59,19 +59,21 @@

)

from diffusers.loaders import StableDiffusionLoraLoaderMixin

from diffusers.optimization import get_scheduler

-from diffusers.training_utils import compute_snr

+from diffusers.training_utils import _set_state_dict_into_text_encoder, cast_training_params, compute_snr

from diffusers.utils import (

check_min_version,

convert_all_state_dict_to_peft,

convert_state_dict_to_diffusers,

convert_state_dict_to_kohya,

+ convert_unet_state_dict_to_peft,

is_wandb_available,

)

+from diffusers.utils.hub_utils import load_or_create_model_card, populate_model_card

from diffusers.utils.import_utils import is_xformers_available

# Will error if the minimal version of diffusers is not installed. Remove at your own risks.

-check_min_version("0.31.0.dev0")

+check_min_version("0.33.0.dev0")

logger = get_logger(__name__)

@@ -79,30 +81,27 @@

def save_model_card(

repo_id: str,

use_dora: bool,

- images=None,

- base_model=str,

+ images: list = None,

+ base_model: str = None,

train_text_encoder=False,

train_text_encoder_ti=False,

token_abstraction_dict=None,

- instance_prompt=str,

- validation_prompt=str,

+ instance_prompt=None,

+ validation_prompt=None,

repo_folder=None,

vae_path=None,

):

- img_str = "widget:\n"

lora = "lora" if not use_dora else "dora"

- for i, image in enumerate(images):

- image.save(os.path.join(repo_folder, f"image_{i}.png"))

- img_str += f"""

- - text: '{validation_prompt if validation_prompt else ' ' }'

- output:

- url:

- "image_{i}.png"

- """

- if not images:

- img_str += f"""

- - text: '{instance_prompt}'

- """

+

+ widget_dict = []

+ if images is not None:

+ for i, image in enumerate(images):

+ image.save(os.path.join(repo_folder, f"image_{i}.png"))

+ widget_dict.append(

+ {"text": validation_prompt if validation_prompt else " ", "output": {"url": f"image_{i}.png"}}

+ )

+ else:

+ widget_dict.append({"text": instance_prompt})

embeddings_filename = f"{repo_folder}_emb"

instance_prompt_webui = re.sub(r"", "", re.sub(r"", embeddings_filename, instance_prompt, count=1))

ti_keys = ", ".join(f'"{match}"' for match in re.findall(r"", instance_prompt))

@@ -137,24 +136,7 @@ def save_model_card(

trigger_str += f"""

to trigger concept `{key}` → use `{tokens}` in your prompt \n

"""

-

- yaml = f"""---

-tags:

-- stable-diffusion

-- stable-diffusion-diffusers

-- diffusers-training

-- text-to-image

-- diffusers

-- {lora}

-- template:sd-lora

-{img_str}

-base_model: {base_model}

-instance_prompt: {instance_prompt}

-license: openrail++

----

-"""

-

- model_card = f"""

+ model_description = f"""

# SD1.5 LoRA DreamBooth - {repo_id}

", "", re.sub(r"", embeddings_filename, instance_prompt, count=1))

ti_keys = ", ".join(f'"{match}"' for match in re.findall(r"", instance_prompt))

@@ -169,23 +167,7 @@ def save_model_card(

to trigger concept `{key}` → use `{tokens}` in your prompt \n

"""

- yaml = f"""---

-tags:

-- stable-diffusion-xl

-- stable-diffusion-xl-diffusers

-- diffusers-training

-- text-to-image

-- diffusers

-- {lora}

-- template:sd-lora

-{img_str}

-base_model: {base_model}

-instance_prompt: {instance_prompt}

-license: openrail++

----

-"""

-

- model_card = f"""

+ model_description = f"""

# SDXL LoRA DreamBooth - {repo_id}

) | [One Step U-Net](#one-step-unet) | - | [Patrick von Platen](https://github.com/patrickvonplaten/) |

-| Stable Diffusion Interpolation | Interpolate the latent space of Stable Diffusion between different prompts/seeds | [Stable Diffusion Interpolation](#stable-diffusion-interpolation) | - | [Nate Raw](https://github.com/nateraw/) |

-| Stable Diffusion Mega | **One** Stable Diffusion Pipeline with all functionalities of [Text2Image](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion.py), [Image2Image](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_img2img.py) and [Inpainting](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_inpaint.py) | [Stable Diffusion Mega](#stable-diffusion-mega) | - | [Patrick von Platen](https://github.com/patrickvonplaten/) |

-| Long Prompt Weighting Stable Diffusion | **One** Stable Diffusion Pipeline without tokens length limit, and support parsing weighting in prompt. | [Long Prompt Weighting Stable Diffusion](#long-prompt-weighting-stable-diffusion) | - | [SkyTNT](https://github.com/SkyTNT) |

-| Speech to Image | Using automatic-speech-recognition to transcribe text and Stable Diffusion to generate images | [Speech to Image](#speech-to-image) | - | [Mikail Duzenli](https://github.com/MikailINTech)

-| Wild Card Stable Diffusion | Stable Diffusion Pipeline that supports prompts that contain wildcard terms (indicated by surrounding double underscores), with values instantiated randomly from a corresponding txt file or a dictionary of possible values | [Wildcard Stable Diffusion](#wildcard-stable-diffusion) | - | [Shyam Sudhakaran](https://github.com/shyamsn97) |

-| [Composable Stable Diffusion](https://energy-based-model.github.io/Compositional-Visual-Generation-with-Composable-Diffusion-Models/) | Stable Diffusion Pipeline that supports prompts that contain "|" in prompts (as an AND condition) and weights (separated by "|" as well) to positively / negatively weight prompts. | [Composable Stable Diffusion](#composable-stable-diffusion) | - | [Mark Rich](https://github.com/MarkRich) |

-| Seed Resizing Stable Diffusion | Stable Diffusion Pipeline that supports resizing an image and retaining the concepts of the 512 by 512 generation. | [Seed Resizing](#seed-resizing) | - | [Mark Rich](https://github.com/MarkRich) |

-| Imagic Stable Diffusion | Stable Diffusion Pipeline that enables writing a text prompt to edit an existing image | [Imagic Stable Diffusion](#imagic-stable-diffusion) | - | [Mark Rich](https://github.com/MarkRich) |

-| Multilingual Stable Diffusion | Stable Diffusion Pipeline that supports prompts in 50 different languages. | [Multilingual Stable Diffusion](#multilingual-stable-diffusion-pipeline) | - | [Juan Carlos Piñeros](https://github.com/juancopi81) |

-| GlueGen Stable Diffusion | Stable Diffusion Pipeline that supports prompts in different languages using GlueGen adapter. | [GlueGen Stable Diffusion](#gluegen-stable-diffusion-pipeline) | - | [Phạm Hồng Vinh](https://github.com/rootonchair) |

-| Image to Image Inpainting Stable Diffusion | Stable Diffusion Pipeline that enables the overlaying of two images and subsequent inpainting | [Image to Image Inpainting Stable Diffusion](#image-to-image-inpainting-stable-diffusion) | - | [Alex McKinney](https://github.com/vvvm23) |

-| Text Based Inpainting Stable Diffusion | Stable Diffusion Inpainting Pipeline that enables passing a text prompt to generate the mask for inpainting | [Text Based Inpainting Stable Diffusion](#text-based-inpainting-stable-diffusion) | - | [Dhruv Karan](https://github.com/unography) |

+| Stable Diffusion Interpolation | Interpolate the latent space of Stable Diffusion between different prompts/seeds | [Stable Diffusion Interpolation](#stable-diffusion-interpolation) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_interpolation.ipynb) | [Nate Raw](https://github.com/nateraw/) |

+| Stable Diffusion Mega | **One** Stable Diffusion Pipeline with all functionalities of [Text2Image](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion.py), [Image2Image](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_img2img.py) and [Inpainting](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/pipeline_stable_diffusion_inpaint.py) | [Stable Diffusion Mega](#stable-diffusion-mega) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_mega.ipynb) | [Patrick von Platen](https://github.com/patrickvonplaten/) |

+| Long Prompt Weighting Stable Diffusion | **One** Stable Diffusion Pipeline without tokens length limit, and support parsing weighting in prompt. | [Long Prompt Weighting Stable Diffusion](#long-prompt-weighting-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/long_prompt_weighting_stable_diffusion.ipynb) | [SkyTNT](https://github.com/SkyTNT) |

+| Speech to Image | Using automatic-speech-recognition to transcribe text and Stable Diffusion to generate images | [Speech to Image](#speech-to-image) |[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/speech_to_image.ipynb) | [Mikail Duzenli](https://github.com/MikailINTech)

+| Wild Card Stable Diffusion | Stable Diffusion Pipeline that supports prompts that contain wildcard terms (indicated by surrounding double underscores), with values instantiated randomly from a corresponding txt file or a dictionary of possible values | [Wildcard Stable Diffusion](#wildcard-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/wildcard_stable_diffusion.ipynb) | [Shyam Sudhakaran](https://github.com/shyamsn97) |

+| [Composable Stable Diffusion](https://energy-based-model.github.io/Compositional-Visual-Generation-with-Composable-Diffusion-Models/) | Stable Diffusion Pipeline that supports prompts that contain "|" in prompts (as an AND condition) and weights (separated by "|" as well) to positively / negatively weight prompts. | [Composable Stable Diffusion](#composable-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/composable_stable_diffusion.ipynb) | [Mark Rich](https://github.com/MarkRich) |

+| Seed Resizing Stable Diffusion | Stable Diffusion Pipeline that supports resizing an image and retaining the concepts of the 512 by 512 generation. | [Seed Resizing](#seed-resizing) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/seed_resizing.ipynb) | [Mark Rich](https://github.com/MarkRich) |

+| Imagic Stable Diffusion | Stable Diffusion Pipeline that enables writing a text prompt to edit an existing image | [Imagic Stable Diffusion](#imagic-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/imagic_stable_diffusion.ipynb) | [Mark Rich](https://github.com/MarkRich) |

+| Multilingual Stable Diffusion | Stable Diffusion Pipeline that supports prompts in 50 different languages. | [Multilingual Stable Diffusion](#multilingual-stable-diffusion-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/multilingual_stable_diffusion.ipynb) | [Juan Carlos Piñeros](https://github.com/juancopi81) |

+| GlueGen Stable Diffusion | Stable Diffusion Pipeline that supports prompts in different languages using GlueGen adapter. | [GlueGen Stable Diffusion](#gluegen-stable-diffusion-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/gluegen_stable_diffusion.ipynb) | [Phạm Hồng Vinh](https://github.com/rootonchair) |

+| Image to Image Inpainting Stable Diffusion | Stable Diffusion Pipeline that enables the overlaying of two images and subsequent inpainting | [Image to Image Inpainting Stable Diffusion](#image-to-image-inpainting-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/image_to_image_inpainting_stable_diffusion.ipynb) | [Alex McKinney](https://github.com/vvvm23) |

+| Text Based Inpainting Stable Diffusion | Stable Diffusion Inpainting Pipeline that enables passing a text prompt to generate the mask for inpainting | [Text Based Inpainting Stable Diffusion](#text-based-inpainting-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/text_based_inpainting_stable_dffusion.ipynb) | [Dhruv Karan](https://github.com/unography) |

| Bit Diffusion | Diffusion on discrete data | [Bit Diffusion](#bit-diffusion) | - | [Stuti R.](https://github.com/kingstut) |

| K-Diffusion Stable Diffusion | Run Stable Diffusion with any of [K-Diffusion's samplers](https://github.com/crowsonkb/k-diffusion/blob/master/k_diffusion/sampling.py) | [Stable Diffusion with K Diffusion](#stable-diffusion-with-k-diffusion) | - | [Patrick von Platen](https://github.com/patrickvonplaten/) |

| Checkpoint Merger Pipeline | Diffusion Pipeline that enables merging of saved model checkpoints | [Checkpoint Merger Pipeline](#checkpoint-merger-pipeline) | - | [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

-| Stable Diffusion v1.1-1.4 Comparison | Run all 4 model checkpoints for Stable Diffusion and compare their results together | [Stable Diffusion Comparison](#stable-diffusion-comparisons) | - | [Suvaditya Mukherjee](https://github.com/suvadityamuk) |

-| MagicMix | Diffusion Pipeline for semantic mixing of an image and a text prompt | [MagicMix](#magic-mix) | - | [Partho Das](https://github.com/daspartho) |

-| Stable UnCLIP | Diffusion Pipeline for combining prior model (generate clip image embedding from text, UnCLIPPipeline `"kakaobrain/karlo-v1-alpha"`) and decoder pipeline (decode clip image embedding to image, StableDiffusionImageVariationPipeline `"lambdalabs/sd-image-variations-diffusers"` ). | [Stable UnCLIP](#stable-unclip) | - | [Ray Wang](https://wrong.wang) |

-| UnCLIP Text Interpolation Pipeline | Diffusion Pipeline that allows passing two prompts and produces images while interpolating between the text-embeddings of the two prompts | [UnCLIP Text Interpolation Pipeline](#unclip-text-interpolation-pipeline) | - | [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

-| UnCLIP Image Interpolation Pipeline | Diffusion Pipeline that allows passing two images/image_embeddings and produces images while interpolating between their image-embeddings | [UnCLIP Image Interpolation Pipeline](#unclip-image-interpolation-pipeline) | - | [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

-| DDIM Noise Comparative Analysis Pipeline | Investigating how the diffusion models learn visual concepts from each noise level (which is a contribution of [P2 weighting (CVPR 2022)](https://arxiv.org/abs/2204.00227)) | [DDIM Noise Comparative Analysis Pipeline](#ddim-noise-comparative-analysis-pipeline) | - | [Aengus (Duc-Anh)](https://github.com/aengusng8) |

-| CLIP Guided Img2Img Stable Diffusion Pipeline | Doing CLIP guidance for image to image generation with Stable Diffusion | [CLIP Guided Img2Img Stable Diffusion](#clip-guided-img2img-stable-diffusion) | - | [Nipun Jindal](https://github.com/nipunjindal/) |

-| TensorRT Stable Diffusion Text to Image Pipeline | Accelerates the Stable Diffusion Text2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Text to Image Pipeline](#tensorrt-text2image-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

-| EDICT Image Editing Pipeline | Diffusion pipeline for text-guided image editing | [EDICT Image Editing Pipeline](#edict-image-editing-pipeline) | - | [Joqsan Azocar](https://github.com/Joqsan) |

-| Stable Diffusion RePaint | Stable Diffusion pipeline using [RePaint](https://arxiv.org/abs/2201.09865) for inpainting. | [Stable Diffusion RePaint](#stable-diffusion-repaint ) | - | [Markus Pobitzer](https://github.com/Markus-Pobitzer) |

+| Stable Diffusion v1.1-1.4 Comparison | Run all 4 model checkpoints for Stable Diffusion and compare their results together | [Stable Diffusion Comparison](#stable-diffusion-comparisons) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_comparison.ipynb) | [Suvaditya Mukherjee](https://github.com/suvadityamuk) |

+| MagicMix | Diffusion Pipeline for semantic mixing of an image and a text prompt | [MagicMix](#magic-mix) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/magic_mix.ipynb) | [Partho Das](https://github.com/daspartho) |

+| Stable UnCLIP | Diffusion Pipeline for combining prior model (generate clip image embedding from text, UnCLIPPipeline `"kakaobrain/karlo-v1-alpha"`) and decoder pipeline (decode clip image embedding to image, StableDiffusionImageVariationPipeline `"lambdalabs/sd-image-variations-diffusers"` ). | [Stable UnCLIP](#stable-unclip) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_unclip.ipynb) | [Ray Wang](https://wrong.wang) |

+| UnCLIP Text Interpolation Pipeline | Diffusion Pipeline that allows passing two prompts and produces images while interpolating between the text-embeddings of the two prompts | [UnCLIP Text Interpolation Pipeline](#unclip-text-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_text_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

+| UnCLIP Image Interpolation Pipeline | Diffusion Pipeline that allows passing two images/image_embeddings and produces images while interpolating between their image-embeddings | [UnCLIP Image Interpolation Pipeline](#unclip-image-interpolation-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/unclip_image_interpolation.ipynb)| [Naga Sai Abhinay Devarinti](https://github.com/Abhinay1997/) |

+| DDIM Noise Comparative Analysis Pipeline | Investigating how the diffusion models learn visual concepts from each noise level (which is a contribution of [P2 weighting (CVPR 2022)](https://arxiv.org/abs/2204.00227)) | [DDIM Noise Comparative Analysis Pipeline](#ddim-noise-comparative-analysis-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/ddim_noise_comparative_analysis.ipynb)| [Aengus (Duc-Anh)](https://github.com/aengusng8) |

+| CLIP Guided Img2Img Stable Diffusion Pipeline | Doing CLIP guidance for image to image generation with Stable Diffusion | [CLIP Guided Img2Img Stable Diffusion](#clip-guided-img2img-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_img2img_stable_diffusion.ipynb) | [Nipun Jindal](https://github.com/nipunjindal/) |

+| TensorRT Stable Diffusion Text to Image Pipeline | Accelerates the Stable Diffusion Text2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Text to Image Pipeline](#tensorrt-text2image-stable-diffusion-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/tensorrt_text2image_stable_diffusion_pipeline.ipynb) | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

+| EDICT Image Editing Pipeline | Diffusion pipeline for text-guided image editing | [EDICT Image Editing Pipeline](#edict-image-editing-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/edict_image_pipeline.ipynb) | [Joqsan Azocar](https://github.com/Joqsan) |

+| Stable Diffusion RePaint | Stable Diffusion pipeline using [RePaint](https://arxiv.org/abs/2201.09865) for inpainting. | [Stable Diffusion RePaint](#stable-diffusion-repaint )|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_repaint.ipynb)| [Markus Pobitzer](https://github.com/Markus-Pobitzer) |

| TensorRT Stable Diffusion Image to Image Pipeline | Accelerates the Stable Diffusion Image2Image Pipeline using TensorRT | [TensorRT Stable Diffusion Image to Image Pipeline](#tensorrt-image2image-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

| Stable Diffusion IPEX Pipeline | Accelerate Stable Diffusion inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion on IPEX](#stable-diffusion-on-ipex) | - | [Yingjie Han](https://github.com/yingjie-han/) |

-| CLIP Guided Images Mixing Stable Diffusion Pipeline | Сombine images using usual diffusion models. | [CLIP Guided Images Mixing Using Stable Diffusion](#clip-guided-images-mixing-with-stable-diffusion) | - | [Karachev Denis](https://github.com/TheDenk) |

+| CLIP Guided Images Mixing Stable Diffusion Pipeline | Сombine images using usual diffusion models. | [CLIP Guided Images Mixing Using Stable Diffusion](#clip-guided-images-mixing-with-stable-diffusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/clip_guided_images_mixing_with_stable_diffusion.ipynb) | [Karachev Denis](https://github.com/TheDenk) |

| TensorRT Stable Diffusion Inpainting Pipeline | Accelerates the Stable Diffusion Inpainting Pipeline using TensorRT | [TensorRT Stable Diffusion Inpainting Pipeline](#tensorrt-inpainting-stable-diffusion-pipeline) | - | [Asfiya Baig](https://github.com/asfiyab-nvidia) |

| IADB Pipeline | Implementation of [Iterative α-(de)Blending: a Minimalist Deterministic Diffusion Model](https://arxiv.org/abs/2305.03486) | [IADB Pipeline](#iadb-pipeline) | - | [Thomas Chambon](https://github.com/tchambon)

| Zero1to3 Pipeline | Implementation of [Zero-1-to-3: Zero-shot One Image to 3D Object](https://arxiv.org/abs/2303.11328) | [Zero1to3 Pipeline](#zero1to3-pipeline) | - | [Xin Kong](https://github.com/kxhit) |

| Stable Diffusion XL Long Weighted Prompt Pipeline | A pipeline support unlimited length of prompt and negative prompt, use A1111 style of prompt weighting | [Stable Diffusion XL Long Weighted Prompt Pipeline](#stable-diffusion-xl-long-weighted-prompt-pipeline) | [](https://colab.research.google.com/drive/1LsqilswLR40XLLcp6XFOl5nKb_wOe26W?usp=sharing) | [Andrew Zhu](https://xhinker.medium.com/) |

-| FABRIC - Stable Diffusion with feedback Pipeline | pipeline supports feedback from liked and disliked images | [Stable Diffusion Fabric Pipeline](#stable-diffusion-fabric-pipeline) | - | [Shauray Singh](https://shauray8.github.io/about_shauray/) |

+| Stable Diffusion Mixture Tiling Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending | [Stable Diffusion Mixture Tiling Pipeline SD 1.5](#stable-diffusion-mixture-tiling-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

+| Stable Diffusion Mixture Canvas Pipeline SD 1.5 | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending. Works by defining a list of Text2Image region objects that detail the region of influence of each diffuser. | [Stable Diffusion Mixture Canvas Pipeline SD 1.5](#stable-diffusion-mixture-canvas-pipeline-sd-15) | [](https://huggingface.co/spaces/albarji/mixture-of-diffusers) | [Álvaro B Jiménez](https://github.com/albarji/) |

+| Stable Diffusion Mixture Tiling Pipeline SDXL | A pipeline generates cohesive images by integrating multiple diffusion processes, each focused on a specific image region and considering boundary effects for smooth blending | [Stable Diffusion Mixture Tiling Pipeline SDXL](#stable-diffusion-mixture-tiling-pipeline-sdxl) | [](https://huggingface.co/spaces/elismasilva/mixture-of-diffusers-sdxl-tiling) | [Eliseu Silva](https://github.com/DEVAIEXP/) |

+| Stable Diffusion MoD ControlNet Tile SR Pipeline SDXL | This is an advanced pipeline that leverages ControlNet Tile and Mixture-of-Diffusers techniques, integrating tile diffusion directly into the latent space denoising process. Designed to overcome the limitations of conventional pixel-space tile processing, this pipeline delivers Super Resolution (SR) upscaling for higher-quality images, reduced processing time, and greater adaptability. | [Stable Diffusion MoD ControlNet Tile SR Pipeline SDXL](#stable-diffusion-mod-controlnet-tile-sr-pipeline-sdxl) | [](https://huggingface.co/spaces/elismasilva/mod-control-tile-upscaler-sdxl) | [Eliseu Silva](https://github.com/DEVAIEXP/) |

+| FABRIC - Stable Diffusion with feedback Pipeline | pipeline supports feedback from liked and disliked images | [Stable Diffusion Fabric Pipeline](#stable-diffusion-fabric-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/stable_diffusion_fabric.ipynb)| [Shauray Singh](https://shauray8.github.io/about_shauray/) |

| sketch inpaint - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion Pipeline](#stable-diffusion-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

| sketch inpaint xl - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion XL Pipeline](#stable-diffusion-xl-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

-| prompt-to-prompt | change parts of a prompt and retain image structure (see [paper page](https://prompt-to-prompt.github.io/)) | [Prompt2Prompt Pipeline](#prompt2prompt-pipeline) | - | [Umer H. Adil](https://twitter.com/UmerHAdil) |

+| prompt-to-prompt | change parts of a prompt and retain image structure (see [paper page](https://prompt-to-prompt.github.io/)) | [Prompt2Prompt Pipeline](#prompt2prompt-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/prompt_2_prompt_pipeline.ipynb) | [Umer H. Adil](https://twitter.com/UmerHAdil) |

| Latent Consistency Pipeline | Implementation of [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://arxiv.org/abs/2310.04378) | [Latent Consistency Pipeline](#latent-consistency-pipeline) | - | [Simian Luo](https://github.com/luosiallen) |

| Latent Consistency Img2img Pipeline | Img2img pipeline for Latent Consistency Models | [Latent Consistency Img2Img Pipeline](#latent-consistency-img2img-pipeline) | - | [Logan Zoellner](https://github.com/nagolinc) |

| Latent Consistency Interpolation Pipeline | Interpolate the latent space of Latent Consistency Models with multiple prompts | [Latent Consistency Interpolation Pipeline](#latent-consistency-interpolation-pipeline) | [](https://colab.research.google.com/drive/1pK3NrLWJSiJsBynLns1K1-IDTW9zbPvl?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

-| SDE Drag Pipeline | The pipeline supports drag editing of images using stochastic differential equations | [SDE Drag Pipeline](#sde-drag-pipeline) | - | [NieShen](https://github.com/NieShenRuc) [Fengqi Zhu](https://github.com/Monohydroxides) |

+| SDE Drag Pipeline | The pipeline supports drag editing of images using stochastic differential equations | [SDE Drag Pipeline](#sde-drag-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/sde_drag.ipynb) | [NieShen](https://github.com/NieShenRuc) [Fengqi Zhu](https://github.com/Monohydroxides) |

| Regional Prompting Pipeline | Assign multiple prompts for different regions | [Regional Prompting Pipeline](#regional-prompting-pipeline) | - | [hako-mikan](https://github.com/hako-mikan) |

| LDM3D-sr (LDM3D upscaler) | Upscale low resolution RGB and depth inputs to high resolution | [StableDiffusionUpscaleLDM3D Pipeline](https://github.com/estelleafl/diffusers/tree/ldm3d_upscaler_community/examples/community#stablediffusionupscaleldm3d-pipeline) | - | [Estelle Aflalo](https://github.com/estelleafl) |

| AnimateDiff ControlNet Pipeline | Combines AnimateDiff with precise motion control using ControlNets | [AnimateDiff ControlNet Pipeline](#animatediff-controlnet-pipeline) | [](https://colab.research.google.com/drive/1SKboYeGjEQmQPWoFC0aLYpBlYdHXkvAu?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) and [Edoardo Botta](https://github.com/EdoardoBotta) |

-| DemoFusion Pipeline | Implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://arxiv.org/abs/2311.16973) | [DemoFusion Pipeline](#demofusion) | - | [Ruoyi Du](https://github.com/RuoyiDu) |

-| Instaflow Pipeline | Implementation of [InstaFlow! One-Step Stable Diffusion with Rectified Flow](https://arxiv.org/abs/2309.06380) | [Instaflow Pipeline](#instaflow-pipeline) | - | [Ayush Mangal](https://github.com/ayushtues) |

+| DemoFusion Pipeline | Implementation of [DemoFusion: Democratising High-Resolution Image Generation With No $$$](https://arxiv.org/abs/2311.16973) | [DemoFusion Pipeline](#demofusion) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/demo_fusion.ipynb) | [Ruoyi Du](https://github.com/RuoyiDu) |

+| Instaflow Pipeline | Implementation of [InstaFlow! One-Step Stable Diffusion with Rectified Flow](https://arxiv.org/abs/2309.06380) | [Instaflow Pipeline](#instaflow-pipeline) | [Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/insta_flow.ipynb) | [Ayush Mangal](https://github.com/ayushtues) |

| Null-Text Inversion Pipeline | Implement [Null-text Inversion for Editing Real Images using Guided Diffusion Models](https://arxiv.org/abs/2211.09794) as a pipeline. | [Null-Text Inversion](https://github.com/google/prompt-to-prompt/) | - | [Junsheng Luan](https://github.com/Junsheng121) |

| Rerender A Video Pipeline | Implementation of [[SIGGRAPH Asia 2023] Rerender A Video: Zero-Shot Text-Guided Video-to-Video Translation](https://arxiv.org/abs/2306.07954) | [Rerender A Video Pipeline](#rerender-a-video) | - | [Yifan Zhou](https://github.com/SingleZombie) |

| StyleAligned Pipeline | Implementation of [Style Aligned Image Generation via Shared Attention](https://arxiv.org/abs/2312.02133) | [StyleAligned Pipeline](#stylealigned-pipeline) | [](https://drive.google.com/file/d/15X2E0jFPTajUIjS0FzX50OaHsCbP2lQ0/view?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

| AnimateDiff Image-To-Video Pipeline | Experimental Image-To-Video support for AnimateDiff (open to improvements) | [AnimateDiff Image To Video Pipeline](#animatediff-image-to-video-pipeline) | [](https://drive.google.com/file/d/1TvzCDPHhfFtdcJZe4RLloAwyoLKuttWK/view?usp=sharing) | [Aryan V S](https://github.com/a-r-r-o-w) |

-| IP Adapter FaceID Stable Diffusion | Stable Diffusion Pipeline that supports IP Adapter Face ID | [IP Adapter Face ID](#ip-adapter-face-id) | - | [Fabio Rigano](https://github.com/fabiorigano) |

+| IP Adapter FaceID Stable Diffusion | Stable Diffusion Pipeline that supports IP Adapter Face ID | [IP Adapter Face ID](#ip-adapter-face-id) |[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/ip_adapter_face_id.ipynb)| [Fabio Rigano](https://github.com/fabiorigano) |

| InstantID Pipeline | Stable Diffusion XL Pipeline that supports InstantID | [InstantID Pipeline](#instantid-pipeline) | [](https://huggingface.co/spaces/InstantX/InstantID) | [Haofan Wang](https://github.com/haofanwang) |

| UFOGen Scheduler | Scheduler for UFOGen Model (compatible with Stable Diffusion pipelines) | [UFOGen Scheduler](#ufogen-scheduler) | - | [dg845](https://github.com/dg845) |

| Stable Diffusion XL IPEX Pipeline | Accelerate Stable Diffusion XL inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [Stable Diffusion XL on IPEX](#stable-diffusion-xl-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

| Stable Diffusion BoxDiff Pipeline | Training-free controlled generation with bounding boxes using [BoxDiff](https://github.com/showlab/BoxDiff) | [Stable Diffusion BoxDiff Pipeline](#stable-diffusion-boxdiff) | - | [Jingyang Zhang](https://github.com/zjysteven/) |

| FRESCO V2V Pipeline | Implementation of [[CVPR 2024] FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation](https://arxiv.org/abs/2403.12962) | [FRESCO V2V Pipeline](#fresco) | - | [Yifan Zhou](https://github.com/SingleZombie) |

| AnimateDiff IPEX Pipeline | Accelerate AnimateDiff inference pipeline with BF16/FP32 precision on Intel Xeon CPUs with [IPEX](https://github.com/intel/intel-extension-for-pytorch) | [AnimateDiff on IPEX](#animatediff-on-ipex) | - | [Dan Li](https://github.com/ustcuna/) |

+PIXART-α Controlnet pipeline | Implementation of the controlnet model for pixart alpha and its diffusers pipeline | [PIXART-α Controlnet pipeline](#pixart-α-controlnet-pipeline) | - | [Raul Ciotescu](https://github.com/raulc0399/) |

| HunyuanDiT Differential Diffusion Pipeline | Applies [Differential Diffusion](https://github.com/exx8/differential-diffusion) to [HunyuanDiT](https://github.com/huggingface/diffusers/pull/8240). | [HunyuanDiT with Differential Diffusion](#hunyuandit-with-differential-diffusion) | [](https://colab.research.google.com/drive/1v44a5fpzyr4Ffr4v2XBQ7BajzG874N4P?usp=sharing) | [Monjoy Choudhury](https://github.com/MnCSSJ4x) |

| [🪆Matryoshka Diffusion Models](https://huggingface.co/papers/2310.15111) | A diffusion process that denoises inputs at multiple resolutions jointly and uses a NestedUNet architecture where features and parameters for small scale inputs are nested within those of the large scales. See [original codebase](https://github.com/apple/ml-mdm). | [🪆Matryoshka Diffusion Models](#matryoshka-diffusion-models) | [](https://huggingface.co/spaces/pcuenq/mdm) [](https://colab.research.google.com/gist/tolgacangoz/1f54875fc7aeaabcf284ebde64820966/matryoshka_hf.ipynb) | [M. Tolga Cangöz](https://github.com/tolgacangoz) |

-

+| Stable Diffusion XL Attentive Eraser Pipeline |[[AAAI2025 Oral] Attentive Eraser](https://github.com/Anonym0u3/AttentiveEraser) is a novel tuning-free method that enhances object removal capabilities in pre-trained diffusion models.|[Stable Diffusion XL Attentive Eraser Pipeline](#stable-diffusion-xl-attentive-eraser-pipeline)|-|[Wenhao Sun](https://github.com/Anonym0u3) and [Benlei Cui](https://github.com/Benny079)|

+| Perturbed-Attention Guidance |StableDiffusionPAGPipeline is a modification of StableDiffusionPipeline to support Perturbed-Attention Guidance (PAG).|[Perturbed-Attention Guidance](#perturbed-attention-guidance)|[Notebook](https://github.com/huggingface/notebooks/blob/main/diffusers/perturbed_attention_guidance.ipynb)|[Hyoungwon Cho](https://github.com/HyoungwonCho)|

+| CogVideoX DDIM Inversion Pipeline | Implementation of DDIM inversion and guided attention-based editing denoising process on CogVideoX. | [CogVideoX DDIM Inversion Pipeline](#cogvideox-ddim-inversion-pipeline) | - | [LittleNyima](https://github.com/LittleNyima) |

+| FaithDiff Stable Diffusion XL Pipeline | Implementation of [(CVPR 2025) FaithDiff: Unleashing Diffusion Priors for Faithful Image Super-resolutionUnleashing Diffusion Priors for Faithful Image Super-resolution](https://arxiv.org/abs/2411.18824) - FaithDiff is a faithful image super-resolution method that leverages latent diffusion models by actively adapting the diffusion prior and jointly fine-tuning its components (encoder and diffusion model) with an alignment module to ensure high fidelity and structural consistency. | [FaithDiff Stable Diffusion XL Pipeline](#faithdiff-stable-diffusion-xl-pipeline) | [](https://huggingface.co/jychen9811/FaithDiff) | [Junyang Chen, Jinshan Pan, Jiangxin Dong, IMAG Lab, (Adapted by Eliseu Silva)](https://github.com/JyChen9811/FaithDiff) |

To load a custom pipeline you just need to pass the `custom_pipeline` argument to `DiffusionPipeline`, as one of the files in `diffusers/examples/community`. Feel free to send a PR with your own pipelines, we will merge them quickly.

```py

@@ -84,6 +94,210 @@ pipe = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion

## Example usages

+### Spatiotemporal Skip Guidance

+

+**Junha Hyung\*, Kinam Kim\*, Susung Hong, Min-Jung Kim, Jaegul Choo**

+

+**KAIST AI, University of Washington**

+

+[*Spatiotemporal Skip Guidance (STG) for Enhanced Video Diffusion Sampling*](https://arxiv.org/abs/2411.18664) (CVPR 2025) is a simple training-free sampling guidance method for enhancing transformer-based video diffusion models. STG employs an implicit weak model via self-perturbation, avoiding the need for external models or additional training. By selectively skipping spatiotemporal layers, STG produces an aligned, degraded version of the original model to boost sample quality without compromising diversity or dynamic degree.

+

+Following is the example video of STG applied to Mochi.

+

+

+https://github.com/user-attachments/assets/148adb59-da61-4c50-9dfa-425dcb5c23b3

+

+More examples and information can be found on the [GitHub repository](https://github.com/junhahyung/STGuidance) and the [Project website](https://junhahyung.github.io/STGuidance/).

+

+#### Usage example

+```python

+import torch

+from pipeline_stg_mochi import MochiSTGPipeline

+from diffusers.utils import export_to_video

+

+# Load the pipeline

+pipe = MochiSTGPipeline.from_pretrained("genmo/mochi-1-preview", variant="bf16", torch_dtype=torch.bfloat16)

+

+# Enable memory savings

+pipe = pipe.to("cuda")

+

+#--------Option--------#

+prompt = "A close-up of a beautiful woman's face with colored powder exploding around her, creating an abstract splash of vibrant hues, realistic style."

+stg_applied_layers_idx = [34]

+stg_mode = "STG"

+stg_scale = 1.0 # 0.0 for CFG

+#----------------------#

+

+# Generate video frames

+frames = pipe(

+ prompt,

+ height=480,

+ width=480,

+ num_frames=81,

+ stg_applied_layers_idx=stg_applied_layers_idx,

+ stg_scale=stg_scale,

+ generator = torch.Generator().manual_seed(42),

+ do_rescaling=do_rescaling,

+).frames[0]

+

+export_to_video(frames, "output.mp4", fps=30)

+```

+

+### Adaptive Mask Inpainting

+

+**Hyeonwoo Kim\*, Sookwan Han\*, Patrick Kwon, Hanbyul Joo**

+

+**Seoul National University, Naver Webtoon**

+

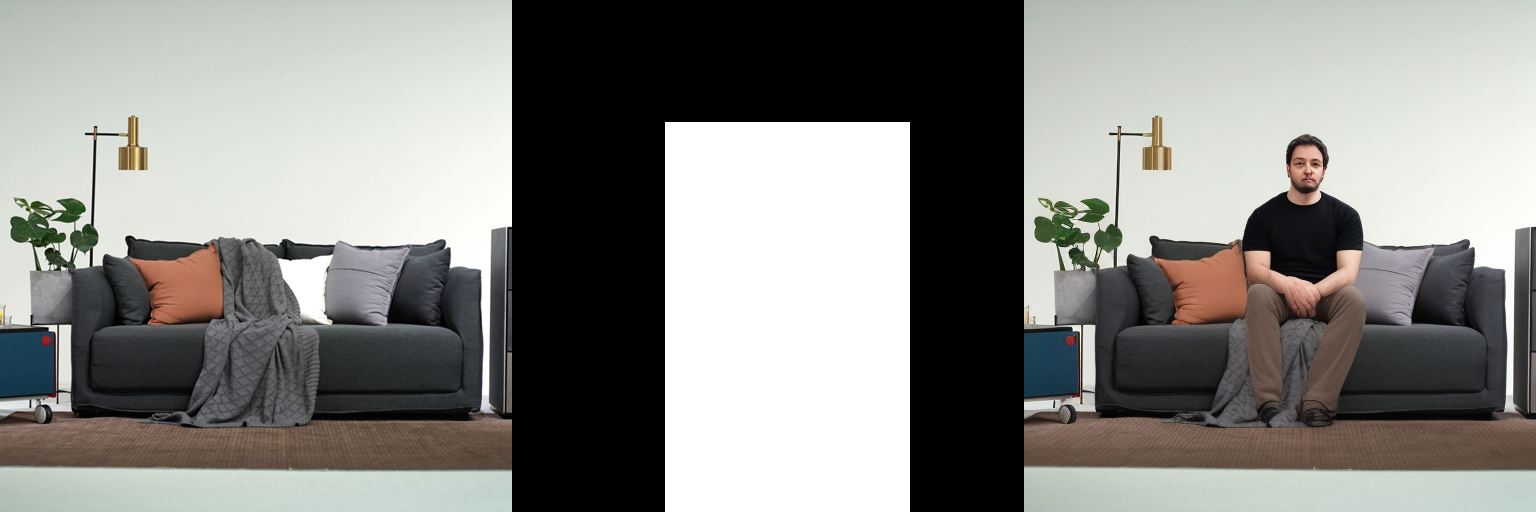

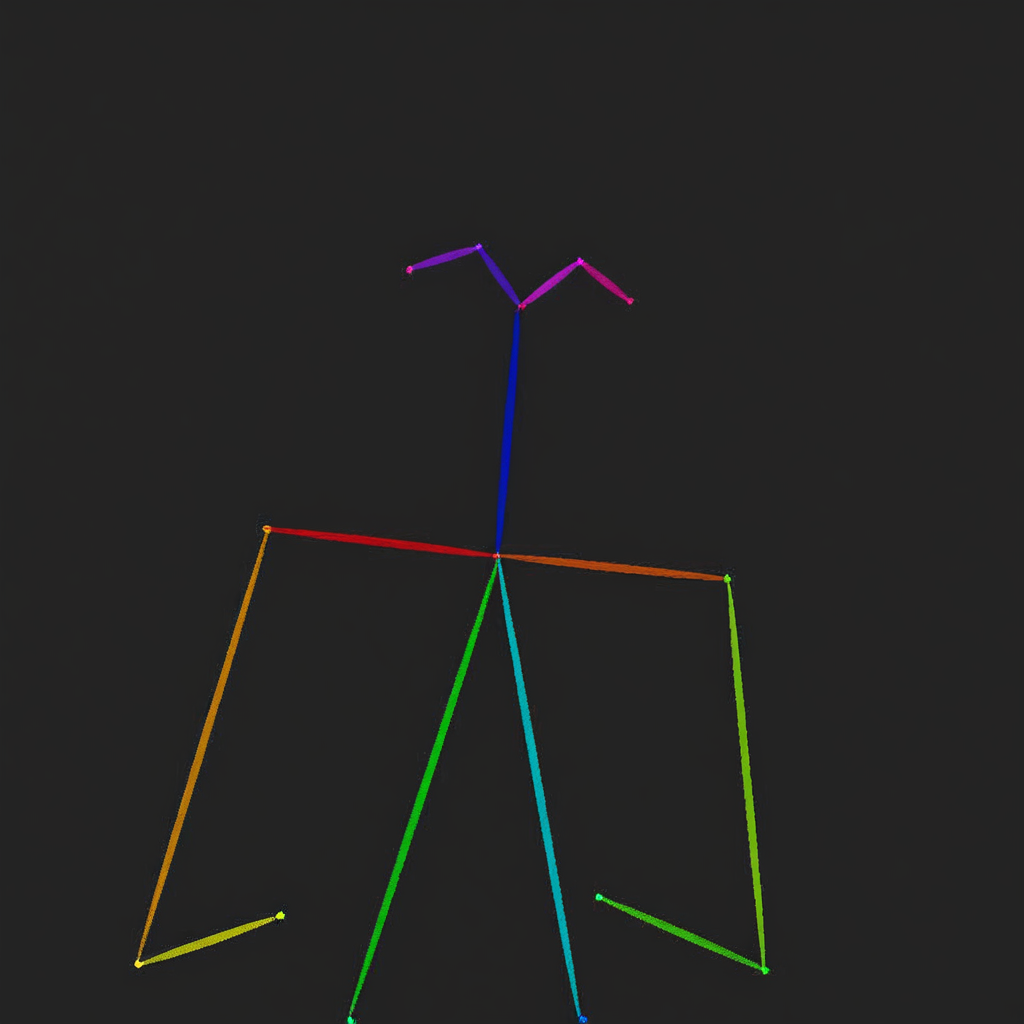

+Adaptive Mask Inpainting, presented in the ECCV'24 oral paper [*Beyond the Contact: Discovering Comprehensive Affordance for 3D Objects from Pre-trained 2D Diffusion Models*](https://snuvclab.github.io/coma), is an algorithm designed to insert humans into scene images without altering the background. Traditional inpainting methods often fail to preserve object geometry and details within the masked region, leading to false affordances. Adaptive Mask Inpainting addresses this issue by progressively specifying the inpainting region over diffusion timesteps, ensuring that the inserted human integrates seamlessly with the existing scene.

+

+Here is the demonstration of Adaptive Mask Inpainting:

+

+

+

+ Your browser does not support the video tag.

+

+

+

+

+

+You can find additional information about Adaptive Mask Inpainting in the [paper](https://arxiv.org/pdf/2401.12978) or in the [project website](https://snuvclab.github.io/coma).

+

+#### Usage example

+First, clone the diffusers github repository, and run the following command to set environment.

+```Shell

+git clone https://github.com/huggingface/diffusers.git

+cd diffusers

+

+conda create --name ami python=3.9 -y

+conda activate ami

+

+conda install pytorch==1.10.1 torchvision==0.11.2 torchaudio==0.10.1 cudatoolkit=11.3 -c pytorch -c conda-forge -y

+python -m pip install detectron2==0.6 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu113/torch1.10/index.html

+pip install easydict

+pip install diffusers==0.20.2 accelerate safetensors transformers

+pip install setuptools==59.5.0

+pip install opencv-python

+pip install numpy==1.24.1

+```

+Then, run the below code under 'diffusers' directory.

+```python

+import numpy as np

+import torch

+from PIL import Image

+

+from diffusers import DDIMScheduler

+from diffusers import DiffusionPipeline

+from diffusers.utils import load_image

+

+from examples.community.adaptive_mask_inpainting import download_file, AdaptiveMaskInpaintPipeline, AMI_INSTALL_MESSAGE

+

+print(AMI_INSTALL_MESSAGE)

+

+from easydict import EasyDict

+

+

+

+if __name__ == "__main__":

+ """

+ Download Necessary Files

+ """

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/model_final_edd263.pkl?download=true",

+ output_file = "model_final_edd263.pkl",

+ exist_ok=True,

+ )

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/pointrend_rcnn_R_50_FPN_3x_coco.yaml?download=true",

+ output_file = "pointrend_rcnn_R_50_FPN_3x_coco.yaml",

+ exist_ok=True,

+ )

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/input_img.png?download=true",

+ output_file = "input_img.png",

+ exist_ok=True,

+ )

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/input_mask.png?download=true",

+ output_file = "input_mask.png",

+ exist_ok=True,

+ )

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/Base-PointRend-RCNN-FPN.yaml?download=true",

+ output_file = "Base-PointRend-RCNN-FPN.yaml",

+ exist_ok=True,

+ )

+ download_file(

+ url = "https://huggingface.co/datasets/jellyheadnadrew/adaptive-mask-inpainting-test-images/resolve/main/Base-RCNN-FPN.yaml?download=true",

+ output_file = "Base-RCNN-FPN.yaml",

+ exist_ok=True,

+ )

+

+ """

+ Prepare Adaptive Mask Inpainting Pipeline

+ """

+ # device

+ device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

+ num_steps = 50

+

+ # Scheduler

+ scheduler = DDIMScheduler(

+ beta_start=0.00085,

+ beta_end=0.012,

+ beta_schedule="scaled_linear",

+ clip_sample=False,

+ set_alpha_to_one=False

+ )

+ scheduler.set_timesteps(num_inference_steps=num_steps)

+

+ ## load models as pipelines

+ pipeline = AdaptiveMaskInpaintPipeline.from_pretrained(

+ "Uminosachi/realisticVisionV51_v51VAE-inpainting",

+ scheduler=scheduler,

+ torch_dtype=torch.float16,

+ requires_safety_checker=False

+ ).to(device)

+

+ ## disable safety checker

+ enable_safety_checker = False

+ if not enable_safety_checker:

+ pipeline.safety_checker = None

+

+ """

+ Run Adaptive Mask Inpainting

+ """

+ default_mask_image = Image.open("./input_mask.png").convert("L")

+ init_image = Image.open("./input_img.png").convert("RGB")

+

+

+ seed = 59

+ generator = torch.Generator(device=device)

+ generator.manual_seed(seed)

+

+ image = pipeline(

+ prompt="a man sitting on a couch",

+ negative_prompt="worst quality, normal quality, low quality, bad anatomy, artifacts, blurry, cropped, watermark, greyscale, nsfw",

+ image=init_image,

+ default_mask_image=default_mask_image,

+ guidance_scale=11.0,

+ strength=0.98,

+ use_adaptive_mask=True,

+ generator=generator,

+ enforce_full_mask_ratio=0.0,

+ visualization_save_dir="./ECCV2024_adaptive_mask_inpainting_demo", # DON'T CHANGE THIS!!!

+ human_detection_thres=0.015,

+ ).images[0]

+

+

+ image.save(f'final_img.png')

+```

+#### [Troubleshooting]

+

+If you run into an error `cannot import name 'cached_download' from 'huggingface_hub'` (issue [1851](https://github.com/easydiffusion/easydiffusion/issues/1851)), remove `cached_download` from the import line in the file `diffusers/utils/dynamic_modules_utils.py`.

+

+For example, change the import line from `.../env/lib/python3.8/site-packages/diffusers/utils/dynamic_modules_utils.py`.

+

+

### Flux with CFG

Know more about Flux [here](https://blackforestlabs.ai/announcing-black-forest-labs/). Since Flux doesn't use CFG, this implementation provides one, inspired by the [PuLID Flux adaptation](https://github.com/ToTheBeginning/PuLID/blob/main/docs/pulid_for_flux.md).

@@ -94,24 +308,30 @@ Example usage:

from diffusers import DiffusionPipeline

import torch

+model_name = "black-forest-labs/FLUX.1-dev"

+prompt = "a watercolor painting of a unicorn"

+negative_prompt = "pink"

+

+# Load the diffusion pipeline

pipeline = DiffusionPipeline.from_pretrained(

- "black-forest-labs/FLUX.1-dev",

+ model_name,

torch_dtype=torch.bfloat16,

custom_pipeline="pipeline_flux_with_cfg"

)

pipeline.enable_model_cpu_offload()

-prompt = "a watercolor painting of a unicorn"

-negative_prompt = "pink"

+# Generate the image

img = pipeline(

prompt=prompt,

negative_prompt=negative_prompt,

true_cfg=1.5,

guidance_scale=3.5,

- num_images_per_prompt=1,

generator=torch.manual_seed(0)

).images[0]

+

+# Save the generated image

img.save("cfg_flux.png")

+print("Image generated and saved successfully.")

```

### Differential Diffusion

@@ -684,6 +904,8 @@ out = pipe(

wildcard_files=["object.txt", "animal.txt"],

num_prompt_samples=1

)

+out.images[0].save("image.png")

+torch.cuda.empty_cache()

```

### Composable Stable diffusion

@@ -732,6 +954,7 @@ for i in range(args.num_images):

images.append(th.from_numpy(np.array(image)).permute(2, 0, 1) / 255.)

grid = tvu.make_grid(th.stack(images, dim=0), nrow=4, padding=0)

tvu.save_image(grid, f'{prompt}_{args.weights}' + '.png')

+print("Image saved successfully!")

```

### Imagic Stable Diffusion

@@ -782,10 +1005,15 @@ image.save('./imagic/imagic_image_alpha_2.png')

Test seed resizing. Originally generate an image in 512 by 512, then generate image with same seed at 512 by 592 using seed resizing. Finally, generate 512 by 592 using original stable diffusion pipeline.

```python

+import os

import torch as th

import numpy as np

from diffusers import DiffusionPipeline

+# Ensure the save directory exists or create it

+save_dir = './seed_resize/'

+os.makedirs(save_dir, exist_ok=True)

+

has_cuda = th.cuda.is_available()

device = th.device('cpu' if not has_cuda else 'cuda')

@@ -799,7 +1027,6 @@ def dummy(images, **kwargs):

pipe.safety_checker = dummy

-

images = []

th.manual_seed(0)