From 2939ddb8e0834b492155e195eb3272ae5c73ea02 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Wed, 24 Jul 2024 18:33:25 +0200

Subject: [PATCH 01/22] Create zero-shot-vqa

This is the blogpost about trying VLM for zero-shot VQA on Docmatix

---

zero-shot-vqa | 107 ++++++++++++++++++++++++++++++++++++++++++++++++++

1 file changed, 107 insertions(+)

create mode 100644 zero-shot-vqa

diff --git a/zero-shot-vqa b/zero-shot-vqa

new file mode 100644

index 0000000000..5a8db1101f

--- /dev/null

+++ b/zero-shot-vqa

@@ -0,0 +1,107 @@

+# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

+

+

+

+

+

+

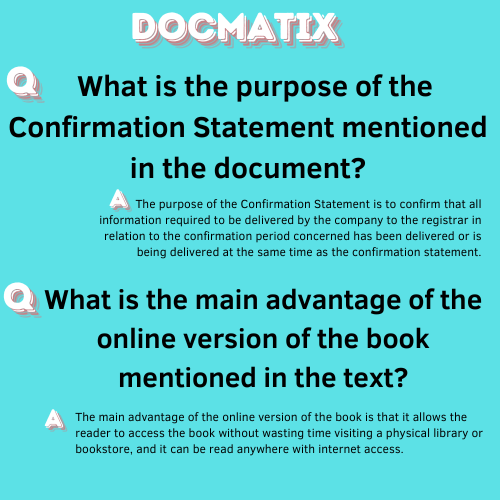

+ Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

+

+

+## Method

+

+[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [FIXME: add the link to the dataset].

+

+Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

+

+For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

+

+## About LAVE

+

+We followed the procedure outlined in the [paper](https://arxiv.org/html/2310.02567v2). The VQA evaluation is framed as an answer-rating task suitable for in-context learning with LLMs. We used a rating scale from 1 to 3 to account for ambiguous questions or incomplete answers. The prompt included a task description, several demonstrations of input/output, and the input for a test example.

+

+We structured our task description and included the instruction **"Give the rationale before rating"** to showcase a justification for the assigned rating. Each demonstration comprised a question, a set of reference answers, the candidate answer, the answer rating, and an explanation for the rating. We also include the **"Provide only one rating"** to avoid sentence-by-sentence analysis, which sometimes resulted in several ratings.

+

+```py

+task_description = """You are given a question, a set of gold-standard reference answers written by

+experts, and a candidate answer. Please rate the accuracy of the candidate answer for the question

+considering the reference answers. Use a scale of 1-3, with 1 indicating an incorrect or irrelevant

+answer, 2 indicating an ambiguous or incomplete answer, and 3 indicating a correct answer.

+Give the rationale before rating. Provide only one rating.

+THIS IS VERY IMPORTANT:

+A binary question should only be answered with 'yes' or 'no',

+otherwise the candidate answer is incorrect."""

+

+demonstrations = [

+ {

+ "question": "What's the weather like?",

+ "reference_answer": ["sunny", "clear", "bright", "sunny", "sunny"],

+ "generated_answer": "cloudy"

+ }

+]

+```

+

+#### Scoring Function

+

+Given the LLM’s generated text for the test example, we extracted the rating from the last character (either 1, 2, or 3) and mapped it to a score in the range [0, 1]: $$ s = \frac{r - 1}{2} $$

+

+#### Table of Results

+

+The results of our evaluation are summarized in the table below:

+

+

+

+ | Metric |

+ CIDER |

+ BLEU |

+ ANLS |

+ LAVE |

+

+

+ | Score |

+ 0.1411 |

+ 0.0032 |

+ 0.002 |

+ 0.58 |

+

+

+

+

+## Qualitative Examples

+

+

+

+

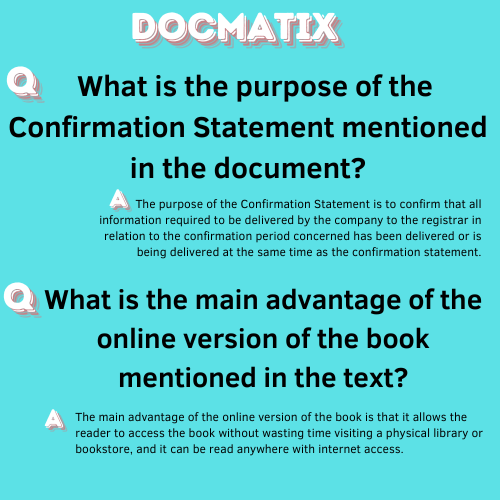

+ Figure 2: Llama rating and rationale.

+

+

+

+

+

+ Figure 3: Llama rating and rationale.

+

+

+

+## Are we too strict in evaluating VQA systems and do we need finetuning?

+

+We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

+

+## References

+

+[FIXME: add bibtex refs]

From 16c384572a6730f6523859ee500da430f8e57f36 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Wed, 24 Jul 2024 18:34:59 +0200

Subject: [PATCH 02/22] Delete zero-shot-vqa

---

zero-shot-vqa | 107 --------------------------------------------------

1 file changed, 107 deletions(-)

delete mode 100644 zero-shot-vqa

diff --git a/zero-shot-vqa b/zero-shot-vqa

deleted file mode 100644

index 5a8db1101f..0000000000

--- a/zero-shot-vqa

+++ /dev/null

@@ -1,107 +0,0 @@

-# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

-

-

-

-

-

-

- Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

-

-

-## Method

-

-[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [FIXME: add the link to the dataset].

-

-Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

-

-For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

-

-## About LAVE

-

-We followed the procedure outlined in the [paper](https://arxiv.org/html/2310.02567v2). The VQA evaluation is framed as an answer-rating task suitable for in-context learning with LLMs. We used a rating scale from 1 to 3 to account for ambiguous questions or incomplete answers. The prompt included a task description, several demonstrations of input/output, and the input for a test example.

-

-We structured our task description and included the instruction **"Give the rationale before rating"** to showcase a justification for the assigned rating. Each demonstration comprised a question, a set of reference answers, the candidate answer, the answer rating, and an explanation for the rating. We also include the **"Provide only one rating"** to avoid sentence-by-sentence analysis, which sometimes resulted in several ratings.

-

-```py

-task_description = """You are given a question, a set of gold-standard reference answers written by

-experts, and a candidate answer. Please rate the accuracy of the candidate answer for the question

-considering the reference answers. Use a scale of 1-3, with 1 indicating an incorrect or irrelevant

-answer, 2 indicating an ambiguous or incomplete answer, and 3 indicating a correct answer.

-Give the rationale before rating. Provide only one rating.

-THIS IS VERY IMPORTANT:

-A binary question should only be answered with 'yes' or 'no',

-otherwise the candidate answer is incorrect."""

-

-demonstrations = [

- {

- "question": "What's the weather like?",

- "reference_answer": ["sunny", "clear", "bright", "sunny", "sunny"],

- "generated_answer": "cloudy"

- }

-]

-```

-

-#### Scoring Function

-

-Given the LLM’s generated text for the test example, we extracted the rating from the last character (either 1, 2, or 3) and mapped it to a score in the range [0, 1]: $$ s = \frac{r - 1}{2} $$

-

-#### Table of Results

-

-The results of our evaluation are summarized in the table below:

-

-

-

- | Metric |

- CIDER |

- BLEU |

- ANLS |

- LAVE |

-

-

- | Score |

- 0.1411 |

- 0.0032 |

- 0.002 |

- 0.58 |

-

-

-

-

-## Qualitative Examples

-

-

-

-

- Figure 2: Llama rating and rationale.

-

-

-

-

-

- Figure 3: Llama rating and rationale.

-

-

-

-## Are we too strict in evaluating VQA systems and do we need finetuning?

-

-We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

-

-## References

-

-[FIXME: add bibtex refs]

From 964c55447c2271ae65043b8fd81a8f3ac504fca5 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Wed, 24 Jul 2024 18:35:40 +0200

Subject: [PATCH 03/22] Create zero-shot-vqa.md

---

zero-shot-vqa.md | 107 +++++++++++++++++++++++++++++++++++++++++++++++

1 file changed, 107 insertions(+)

create mode 100644 zero-shot-vqa.md

diff --git a/zero-shot-vqa.md b/zero-shot-vqa.md

new file mode 100644

index 0000000000..5a8db1101f

--- /dev/null

+++ b/zero-shot-vqa.md

@@ -0,0 +1,107 @@

+# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

+

+

+

+

+

+

+ Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

+

+

+## Method

+

+[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [FIXME: add the link to the dataset].

+

+Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

+

+For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

+

+## About LAVE

+

+We followed the procedure outlined in the [paper](https://arxiv.org/html/2310.02567v2). The VQA evaluation is framed as an answer-rating task suitable for in-context learning with LLMs. We used a rating scale from 1 to 3 to account for ambiguous questions or incomplete answers. The prompt included a task description, several demonstrations of input/output, and the input for a test example.

+

+We structured our task description and included the instruction **"Give the rationale before rating"** to showcase a justification for the assigned rating. Each demonstration comprised a question, a set of reference answers, the candidate answer, the answer rating, and an explanation for the rating. We also include the **"Provide only one rating"** to avoid sentence-by-sentence analysis, which sometimes resulted in several ratings.

+

+```py

+task_description = """You are given a question, a set of gold-standard reference answers written by

+experts, and a candidate answer. Please rate the accuracy of the candidate answer for the question

+considering the reference answers. Use a scale of 1-3, with 1 indicating an incorrect or irrelevant

+answer, 2 indicating an ambiguous or incomplete answer, and 3 indicating a correct answer.

+Give the rationale before rating. Provide only one rating.

+THIS IS VERY IMPORTANT:

+A binary question should only be answered with 'yes' or 'no',

+otherwise the candidate answer is incorrect."""

+

+demonstrations = [

+ {

+ "question": "What's the weather like?",

+ "reference_answer": ["sunny", "clear", "bright", "sunny", "sunny"],

+ "generated_answer": "cloudy"

+ }

+]

+```

+

+#### Scoring Function

+

+Given the LLM’s generated text for the test example, we extracted the rating from the last character (either 1, 2, or 3) and mapped it to a score in the range [0, 1]: $$ s = \frac{r - 1}{2} $$

+

+#### Table of Results

+

+The results of our evaluation are summarized in the table below:

+

+

+

+ | Metric |

+ CIDER |

+ BLEU |

+ ANLS |

+ LAVE |

+

+

+ | Score |

+ 0.1411 |

+ 0.0032 |

+ 0.002 |

+ 0.58 |

+

+

+

+

+## Qualitative Examples

+

+

+

+

+ Figure 2: Llama rating and rationale.

+

+

+

+

+

+ Figure 3: Llama rating and rationale.

+

+

+

+## Are we too strict in evaluating VQA systems and do we need finetuning?

+

+We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

+

+## References

+

+[FIXME: add bibtex refs]

From 0a8c409f70190978c4ddc85893fb7efed253aa20 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Wed, 24 Jul 2024 18:36:25 +0200

Subject: [PATCH 04/22] Delete zero-shot-vqa.md

---

zero-shot-vqa.md | 107 -----------------------------------------------

1 file changed, 107 deletions(-)

delete mode 100644 zero-shot-vqa.md

diff --git a/zero-shot-vqa.md b/zero-shot-vqa.md

deleted file mode 100644

index 5a8db1101f..0000000000

--- a/zero-shot-vqa.md

+++ /dev/null

@@ -1,107 +0,0 @@

-# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

-

-

-

-

-

-

- Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

-

-

-## Method

-

-[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [FIXME: add the link to the dataset].

-

-Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

-

-For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

-

-## About LAVE

-

-We followed the procedure outlined in the [paper](https://arxiv.org/html/2310.02567v2). The VQA evaluation is framed as an answer-rating task suitable for in-context learning with LLMs. We used a rating scale from 1 to 3 to account for ambiguous questions or incomplete answers. The prompt included a task description, several demonstrations of input/output, and the input for a test example.

-

-We structured our task description and included the instruction **"Give the rationale before rating"** to showcase a justification for the assigned rating. Each demonstration comprised a question, a set of reference answers, the candidate answer, the answer rating, and an explanation for the rating. We also include the **"Provide only one rating"** to avoid sentence-by-sentence analysis, which sometimes resulted in several ratings.

-

-```py

-task_description = """You are given a question, a set of gold-standard reference answers written by

-experts, and a candidate answer. Please rate the accuracy of the candidate answer for the question

-considering the reference answers. Use a scale of 1-3, with 1 indicating an incorrect or irrelevant

-answer, 2 indicating an ambiguous or incomplete answer, and 3 indicating a correct answer.

-Give the rationale before rating. Provide only one rating.

-THIS IS VERY IMPORTANT:

-A binary question should only be answered with 'yes' or 'no',

-otherwise the candidate answer is incorrect."""

-

-demonstrations = [

- {

- "question": "What's the weather like?",

- "reference_answer": ["sunny", "clear", "bright", "sunny", "sunny"],

- "generated_answer": "cloudy"

- }

-]

-```

-

-#### Scoring Function

-

-Given the LLM’s generated text for the test example, we extracted the rating from the last character (either 1, 2, or 3) and mapped it to a score in the range [0, 1]: $$ s = \frac{r - 1}{2} $$

-

-#### Table of Results

-

-The results of our evaluation are summarized in the table below:

-

-

-

- | Metric |

- CIDER |

- BLEU |

- ANLS |

- LAVE |

-

-

- | Score |

- 0.1411 |

- 0.0032 |

- 0.002 |

- 0.58 |

-

-

-

-

-## Qualitative Examples

-

-

-

-

- Figure 2: Llama rating and rationale.

-

-

-

-

-

- Figure 3: Llama rating and rationale.

-

-

-

-## Are we too strict in evaluating VQA systems and do we need finetuning?

-

-We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

-

-## References

-

-[FIXME: add bibtex refs]

From 7de22236489a5dca78286a327356dd10e79bebfd Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Wed, 24 Jul 2024 18:39:40 +0200

Subject: [PATCH 05/22] Create zero-shot-vqa-docmatix.md

---

zero-shot-vqa-docmatix.md | 107 ++++++++++++++++++++++++++++++++++++++

1 file changed, 107 insertions(+)

create mode 100644 zero-shot-vqa-docmatix.md

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

new file mode 100644

index 0000000000..5a8db1101f

--- /dev/null

+++ b/zero-shot-vqa-docmatix.md

@@ -0,0 +1,107 @@

+# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

+

+

+

+

+

+

+ Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

+

+

+## Method

+

+[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [FIXME: add the link to the dataset].

+

+Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

+

+For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

+

+## About LAVE

+

+We followed the procedure outlined in the [paper](https://arxiv.org/html/2310.02567v2). The VQA evaluation is framed as an answer-rating task suitable for in-context learning with LLMs. We used a rating scale from 1 to 3 to account for ambiguous questions or incomplete answers. The prompt included a task description, several demonstrations of input/output, and the input for a test example.

+

+We structured our task description and included the instruction **"Give the rationale before rating"** to showcase a justification for the assigned rating. Each demonstration comprised a question, a set of reference answers, the candidate answer, the answer rating, and an explanation for the rating. We also include the **"Provide only one rating"** to avoid sentence-by-sentence analysis, which sometimes resulted in several ratings.

+

+```py

+task_description = """You are given a question, a set of gold-standard reference answers written by

+experts, and a candidate answer. Please rate the accuracy of the candidate answer for the question

+considering the reference answers. Use a scale of 1-3, with 1 indicating an incorrect or irrelevant

+answer, 2 indicating an ambiguous or incomplete answer, and 3 indicating a correct answer.

+Give the rationale before rating. Provide only one rating.

+THIS IS VERY IMPORTANT:

+A binary question should only be answered with 'yes' or 'no',

+otherwise the candidate answer is incorrect."""

+

+demonstrations = [

+ {

+ "question": "What's the weather like?",

+ "reference_answer": ["sunny", "clear", "bright", "sunny", "sunny"],

+ "generated_answer": "cloudy"

+ }

+]

+```

+

+#### Scoring Function

+

+Given the LLM’s generated text for the test example, we extracted the rating from the last character (either 1, 2, or 3) and mapped it to a score in the range [0, 1]: $$ s = \frac{r - 1}{2} $$

+

+#### Table of Results

+

+The results of our evaluation are summarized in the table below:

+

+

+

+ | Metric |

+ CIDER |

+ BLEU |

+ ANLS |

+ LAVE |

+

+

+ | Score |

+ 0.1411 |

+ 0.0032 |

+ 0.002 |

+ 0.58 |

+

+

+

+

+## Qualitative Examples

+

+

+

+

+ Figure 2: Llama rating and rationale.

+

+

+

+

+

+ Figure 3: Llama rating and rationale.

+

+

+

+## Are we too strict in evaluating VQA systems and do we need finetuning?

+

+We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

+

+## References

+

+[FIXME: add bibtex refs]

From 74bde5c17b1e87b540921befaaf4ff43e4361919 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 10:17:36 +0200

Subject: [PATCH 06/22] Update zero-shot-vqa-docmatix.md

---

zero-shot-vqa-docmatix.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index 5a8db1101f..a4cabe75af 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -1,12 +1,12 @@

# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

-

+

+

+

+

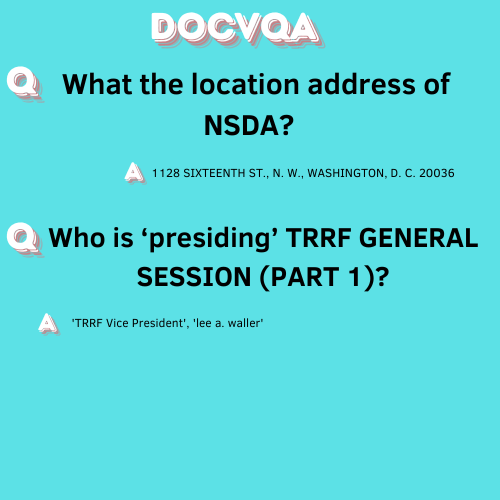

+ Figure 2: The examples of Q&A pairs from Docmatix and DocVQA test set. Note: the corresponding images are not shown here.

+

+

Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

@@ -78,6 +87,15 @@ The results of our evaluation are summarized in the table below:

+

+

+

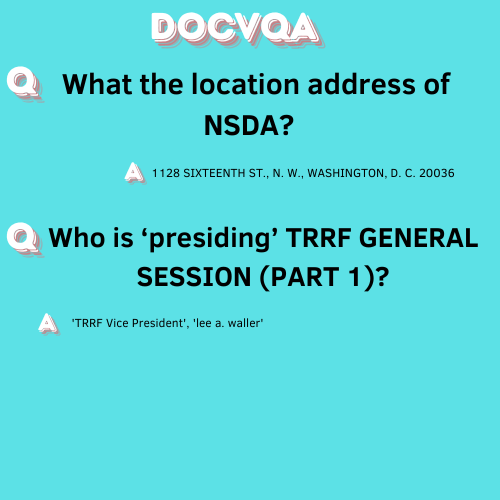

+ Figure 5: t-SNE visualization of Question, Answer and Image features from Docmatix and DocVQA datasets

+

## Qualitative Examples

From ba639f4e4aacd467678eff9d208d5101068705d6 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 10:11:26 +0000

Subject: [PATCH 09/22] added refs

---

zero-shot-vqa-docmatix.md | 58 +++++++++++++++++++++++++++++++++++----

1 file changed, 53 insertions(+), 5 deletions(-)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index c5733c03f8..d334429794 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -88,9 +88,9 @@ The results of our evaluation are summarized in the table below:

@@ -118,8 +118,56 @@ The results of our evaluation are summarized in the table below:

## Are we too strict in evaluating VQA systems and do we need finetuning?

-We have approximately 50% accuracy when using LLMs to evaluate responses, indicating that answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

+We have approximately 50% accuracy gain when using LLMs to evaluate responses, indicating that the answers can be correct despite not adhering to a strict format. This suggests that our current evaluation metrics may be too rigid. It’s important to note that this is not a comprehensive research paper, and more ablation studies are needed to fully understand the effectiveness of different metrics on the evaluation of zero-shot performance on synthetic dataset. We hope this work serves as a starting point to broaden the current research focus on improving the evaluation of zero-shot vision-language models within the context of synthetic datasets and to explore more efficient approaches beyond prompt learning.

## References

-[FIXME: add bibtex refs]

+```

+@inproceedings{cascante2022simvqa,

+ title={Simvqa: Exploring simulated environments for visual question answering},

+ author={Cascante-Bonilla, Paola and Wu, Hui and Wang, Letao and Feris, Rogerio S and Ordonez, Vicente},

+ booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

+ pages={5056--5066},

+ year={2022}

+}

+

+@article{hu2024mplug,

+ title={mplug-docowl 1.5: Unified structure learning for ocr-free document understanding},

+ author={Hu, Anwen and Xu, Haiyang and Ye, Jiabo and Yan, Ming and Zhang, Liang and Zhang, Bo and Li, Chen and Zhang, Ji and Jin, Qin and Huang, Fei and others},

+ journal={arXiv preprint arXiv:2403.12895},

+ year={2024}

+}

+

+@article{agrawal2022reassessing,

+ title={Reassessing evaluation practices in visual question answering: A case study on out-of-distribution generalization},

+ author={Agrawal, Aishwarya and Kaji{\'c}, Ivana and Bugliarello, Emanuele and Davoodi, Elnaz and Gergely, Anita and Blunsom, Phil and Nematzadeh, Aida},

+ journal={arXiv preprint arXiv:2205.12191},

+ year={2022}

+}

+

+@inproceedings{li2023blip,

+ title={Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models},

+ author={Li, Junnan and Li, Dongxu and Savarese, Silvio and Hoi, Steven},

+ booktitle={International conference on machine learning},

+ pages={19730--19742},

+ year={2023},

+ organization={PMLR}

+}

+@inproceedings{manas2024improving,

+ title={Improving automatic vqa evaluation using large language models},

+ author={Ma{\~n}as, Oscar and Krojer, Benno and Agrawal, Aishwarya},

+ booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

+ volume={38},

+ number={5},

+ pages={4171--4179},

+ year={2024}

+}

+

+@article{li2023scigraphqa,

+ title={Scigraphqa: A large-scale synthetic multi-turn question-answering dataset for scientific graphs},

+ author={Li, Shengzhi and Tajbakhsh, Nima},

+ journal={arXiv preprint arXiv:2308.03349},

+ year={2023}

+}

+

+```

From f70530e90236f7cd55d6256ff3746fd5351f1509 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 10:23:10 +0000

Subject: [PATCH 10/22] resolved major content related comment

---

zero-shot-vqa-docmatix.md | 23 ++++++++++++++---------

1 file changed, 14 insertions(+), 9 deletions(-)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index d334429794..40d24a9262 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -4,13 +4,9 @@

-## Introduction

-Our community has recently focused on out-of-distribution (OOD) evaluation, utilizing methods like zero-shot transfer to unseen VQA tasks or fine-tuning on one VQA dataset and evaluating on another. This shift is increasingly relevant with the rise of synthetic datasets such as Docmatix, SciGraphQA, SimVQA used to fine-tune Vision Language Models (VLMs).

+What happens when we apply zero-shot VQA to a synthetic dataset? As illustrated in the Figure 1, the generated answers often semantically align with the reference answers. However, most traditional VQA metrics fall into the 'very low' range. This raises the question: Should we fine-tune the models, or should we develop new metrics that account for distribution shifts and focus on capturing the core meaning of answers?

-Traditionally, VQA Accuracy has been the main metric for evaluating model performance. It relies on exact string matching between a model's predicted answer and a set of reference answers annotated by humans. This metric worked well because VQA evaluation followed an independent and identically distributed (IID) paradigm, where training and testing data distributions were similar, allowing models to adapt effectively [See details here](https://arxiv.org/pdf/2205.12191).

-

-In OOD settings, generated answers might not match reference answers despite being correct due to differences in format, specificity, or interpretation. This paradigm is perfectly illustrated in the Figure 1, where we compare the zero-shot generated captions vs the reference captions from the synthetic dataset. This is particularly true for instruction-generated datasets and their human-curated counterparts. Some [methods](https://proceedings.mlr.press/v202/li23q.html) have attempted to align answer formats with references, but this only addresses the symptom, not the root cause of flawed evaluation metrics. While human evaluation is reliable, it is costly and not scalable, highlighting the need for metrics that better align with human judgment.

-## Introduction

-Our community has recently focused on out-of-distribution (OOD) evaluation, utilizing methods like zero-shot transfer to unseen VQA tasks or fine-tuning on one VQA dataset and evaluating on another. This shift is increasingly relevant with the rise of synthetic datasets such as Docmatix, SciGraphQA, SimVQA used to fine-tune Vision Language Models (VLMs).

+What happens when we apply zero-shot VQA to a synthetic dataset? As illustrated in the Figure 1, the generated answers often semantically align with the reference answers. However, most traditional VQA metrics fall into the 'very low' range. This raises the question: Should we fine-tune the models, or should we develop new metrics that account for distribution shifts and focus on capturing the core meaning of answers?

-Traditionally, VQA Accuracy has been the main metric for evaluating model performance. It relies on exact string matching between a model's predicted answer and a set of reference answers annotated by humans. This metric worked well because VQA evaluation followed an independent and identically distributed (IID) paradigm, where training and testing data distributions were similar, allowing models to adapt effectively [See details here](https://arxiv.org/pdf/2205.12191).

-

-In OOD settings, generated answers might not match reference answers despite being correct due to differences in format, specificity, or interpretation. This paradigm is perfectly illustrated in the Figure 1, where we compare the zero-shot generated captions vs the reference captions from the synthetic dataset. This is particularly true for instruction-generated datasets and their human-curated counterparts. Some [methods](https://proceedings.mlr.press/v202/li23q.html) have attempted to align answer formats with references, but this only addresses the symptom, not the root cause of flawed evaluation metrics. While human evaluation is reliable, it is costly and not scalable, highlighting the need for metrics that better align with human judgment.

@@ -20,9 +16,18 @@ In OOD settings, generated answers might not match reference answers despite bei

Figure 1: t-SNE visualization of Zero-Shot Generated and Reference Answers from Docmatix dataset

+## Introduction

+

+Our community has recently focused on out-of-distribution (OOD) evaluation, utilizing methods like zero-shot transfer to unseen VQA tasks or fine-tuning on one VQA dataset and evaluating on another. This shift is increasingly relevant with the rise of synthetic datasets such as Docmatix, SciGraphQA, SimVQA used to fine-tune Vision Language Models (VLMs).

+

+Traditionally, VQA Accuracy has been the main metric for evaluating model performance. It relies on exact string matching between a model's predicted answer and a set of reference answers annotated by humans. This metric worked well because VQA evaluation followed an independent and identically distributed (IID) paradigm, where training and testing data distributions were similar, allowing models to adapt effectively [See details here](https://arxiv.org/pdf/2205.12191).

+

+In OOD settings, generated answers might not match reference answers despite being correct due to differences in format, specificity, or interpretation. This paradigm is perfectly illustrated in the Figure 1, where we compare the zero-shot generated captions vs the reference captions from the synthetic dataset. This is particularly true for instruction-generated datasets and their human-curated counterparts. Some [methods](https://proceedings.mlr.press/v202/li23q.html) have attempted to align answer formats with references, but this only addresses the symptom, not the root cause of flawed evaluation metrics. While human evaluation is reliable, it is costly and not scalable, highlighting the need for metrics that better align with human judgment.

+

+

## Method

-[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA] (https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [Docmatix-zero-shot-exp](https://huggingface.co/datasets/HuggingFaceM4/Docmatix/viewer/zero-shot-exp).

+[Docmatix](https://huggingface.co/blog/docmatix) is the largest synthetic DocVQA dataset, generated from the curated document dataset, [PDFA](https://huggingface.co/datasets/pixparse/pdfa-eng-wds). It is 100x larger than previously available datasets. The human-curated counterpart is DocVQA, which serves as an evaluation benchmark for VQA models for Document Understanding. In this post, we are going to use **the subset of Docmatix** which consists areound of 1700 train and 200 test samples, which can be downloaded here [Docmatix-zero-shot-exp](https://huggingface.co/datasets/HuggingFaceM4/Docmatix/viewer/zero-shot-exp).

@@ -88,9 +93,9 @@ The results of our evaluation are summarized in the table below:

From 6c8685312dc908e286fd1754e985a9ed81d29f1d Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 10:29:09 +0000

Subject: [PATCH 11/22] formatting

---

zero-shot-vqa-docmatix.md | 9 +++++++++

1 file changed, 9 insertions(+)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index 40d24a9262..920c88b7f0 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -1,3 +1,12 @@

+---

+title: "LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?"

+authors:

+- user: danaaubakirova

+- user: andito

+ guest: true

+

+---

+

# LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need Fine-Tuning?

From 9edabe69d6c9dd5992dea68dc8617bd8b8615857 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 12:55:49 +0200

Subject: [PATCH 12/22] Update zero-shot-vqa-docmatix.md

---

zero-shot-vqa-docmatix.md | 21 +++++++++++----------

1 file changed, 11 insertions(+), 10 deletions(-)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index 920c88b7f0..a98011ed5b 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -49,6 +49,17 @@ In OOD settings, generated answers might not match reference answers despite bei

Although the content of the question and answer pairs in Docmatix and DocVQA is similar, their styles differ significantly. Traditional metrics like CIDER, ANLS, and BLEU can be overly restrictive for zero-shot evaluation in this context. Motivated by the similarity of the embeddings observed in t-SNE (Figure 1), we decided to use a different evaluation metric. In this post, we consider the LAVE metric to better assess generalization on this unseen but semantically similar dataset.

+

+

+

+

+ Figure 5: t-SNE visualization of Question, Answer and Image features from Docmatix and DocVQA datasets

+

+

For our evaluation, we chose [MPLUGDocOwl1.5](https://arxiv.org/pdf/2403.12895) as a baseline model. This model achieves an 84% ANLS score on the test subset of the original DocVQA dataset. We then ran a zero-shot generation on a subset of Docmatix, consisting of 200 images. We used [Llama-2-Chat-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf) for rating the answers.

## About LAVE

@@ -101,16 +112,6 @@ The results of our evaluation are summarized in the table below:

-

-

-

- Figure 5: t-SNE visualization of Question, Answer and Image features from Docmatix and DocVQA datasets

-

-

## Qualitative Examples

From f93f966711124707534ba42acc58f8c0bdfcd307 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 14:05:59 +0200

Subject: [PATCH 13/22] Update zero-shot-vqa-docmatix.md

---

zero-shot-vqa-docmatix.md | 1 -

1 file changed, 1 deletion(-)

diff --git a/zero-shot-vqa-docmatix.md b/zero-shot-vqa-docmatix.md

index a98011ed5b..eb9e1abea1 100644

--- a/zero-shot-vqa-docmatix.md

+++ b/zero-shot-vqa-docmatix.md

@@ -3,7 +3,6 @@ title: "LAVE: Zero-shot VQA Evaluation on Docmatix with LLMs - Do We Still Need

authors:

- user: danaaubakirova

- user: andito

- guest: true

---

From f59fbc7a8c751e6714a7d2c5152ba7615a8d9547 Mon Sep 17 00:00:00 2001

From: Dana Aubakirova <118912928+danaaubakirova@users.noreply.github.com>

Date: Thu, 25 Jul 2024 15:40:21 +0200

Subject: [PATCH 14/22] added thumbnail

---

assets/184_zero_shot_docmatix/thumb.001.jpeg | Bin 0 -> 492572 bytes

1 file changed, 0 insertions(+), 0 deletions(-)

create mode 100644 assets/184_zero_shot_docmatix/thumb.001.jpeg

diff --git a/assets/184_zero_shot_docmatix/thumb.001.jpeg b/assets/184_zero_shot_docmatix/thumb.001.jpeg

new file mode 100644

index 0000000000000000000000000000000000000000..e3a10282c96adcd9ddbef98591cfd74da50d9a6e

GIT binary patch

literal 492572

zcmeFZ2Ut_vx;7d`ML

1^c~y3(??W(-aH@zR35m(d|^JWFwYA&<*xx$?&ujF`B?*N`x`C(H}dq*`B!7{

zfQlo_PdVPKQSTrQW)Dm1-MtHXU}~(RcVGK&FLhYpk*BBo&xfHNzTT#~cP>1%wzP_Px{}FKk2`3l#zccJ1F!n!q?>}jmf1`7M(vSa*uK8OT

zbJM%5GGAEq1xMFMPgwLCi1r0>A(t

zfG5Bm@CXnDxBz$nxcQ%ySNvP~y8sxgbP&Ls^_&a972wNy<~Hlf&l;Rqv>w0%;0TZh

z$g=1gfNQM%#?N+UJlgGg)z?tJmRM?-g9XbOzeC80_nL`I{05Mie9X<57

z?Qa#az78E`J96|G`*99VE>;1|X~5w_Y;1>*upK@6vqK&VW!(oHIdk;v#p}0^@t8bf

zzvRVxK_T(05|UEVib}VXRaDjP{;I8`drwc_

z%-q7#ip8$u6DMaESFoFpub+QFU{LUju<(e;mr>CPiAl*Rsc+tBib

ztnYv0?El3Vs}T+zK5~TZ2>VaI4jm5o$@rNgM=xGKcJ{Uj`y(%&OE;b!=e_g#eR(s-

z<(sA?zQ^AEoae4662!?rS^FDj{~Tk_|9^4z|1kDbw|=az8TxWU;foUw#dKk8QZ~M{?)&(bpG+p*be>>|B7uZ

zp3=)`Y`9C4hCilqlhRv&mAL2wfUEieAll&sb&#yvk)m~!Y?+=%U2G9Mfnc|xYSG&3

zMxVp^3&B=r=q6-oaFbNiFo6UYIsk;1izLM7U@fw$gfrD6V?P

zGFfHN)9RId(vOKIeibNL1_gq1Qg=v^lvJt{Vfq!2t-&vwewP|D`%=hI*}mU7(XVkr

z#g@TuJ1C!n9fqsV8j5R!aEX!KIA2*#(F5dct^!`WF38(`<&~uhS^MPKr{1HjDAzrfW4K~h?4N}mM*@e

zgad#DRXx$No9fZz=wM>{wOCf0Gq~x#ra*VHpgDfv1EXZXuFEW0dA;wgSQ5ERO^Lis

z`WR93a8p$ezmHB094L8Dma0-r8Mm|?qKUB_0SQZU72BN

zZ7I(uBGo8;H#LjLK98#k4-Q$@flQgC>Fa}vk>#Vr08%PtoXSs#kHZ~DFED$D@7L+Z

zXk0E@d(n51T`i{IoW;mmG=3-4~Ehf;<8HC)+IXUOc5OwNv1I#GAY^d%{EFGhU{#BpdPQGN)d5cLn(<~

z-Xd)oH9z;-nb~o#$^bpaTkX|`rd%{&{jW6R|ISP+@ZgUKI6Pli8R)>zQkVl+(tyv{e~3TStYL*45codgBC@Wm%6i~na1SL5#muWnRRv5BT<~8>Ctci4X7SDXwza@_bks<>0jv%B0z}pJ^EK

zaKwhoD|j49J)3JH^ZCxzKCs2Eqp%iOH*~Om!*{TsDAq{pOzgW#yFy#u(>Vb6*y5)l

zd)GrujED?kiJbxRgnz)tRUt~`EG%Jm>U2*{y+WXd)w-8&MiJq9

zf3)8o_r)Rs$Y;VxEcw*gUf;n*M{hUg`(d0i_-QD)@_MT2%_oM}HY

z)(gZ%K~X&_IR2MyvwUuzg&eo*6m#56atSJoCM(rK9V7Z^z$<7^2nuV1iKIB^g%m_-

z5Fnmp*_w_WkzzjPIcXij_aFY~mYX6HL)X8Th^c@0xRYmSSY{Hnlo|6?#5^buAs!!f^|q$wT%nw1X#Zs?bk

zfa%}J$MQ%ETZDozr?Xegl%o*Ref-bl@FTi`Et6opGp1;@oOtz@v|r<*#|4y^90Z(z

z_zc@;-tkHzO*cD-jGdP^2eZ+Wmc^V=4su1_mHjAS+jms4lhQz{Y;jG0%m@XsO~j4G

z*c59)Aig1sbYU5qN*7HX*?a-%Oc`=-FL=pRQI28)10#J!?b~u@uc8&1{NTP5y<*Qx

zG!&nzItr+${AP(OFNLbRBCbu2hmN~!5D#BUxv?7lSU{xM<%H((%kM=*Z!-*}7)bjU

zxSf6UYIPj2H=fSRM$sp4tQ{p6v;#?IpzpY5wfLwezQDSB>j!|D_oxcw^HLMrjwzAq

zE4?%W`lW9ZGGP%i49LmfJQOThD`s5AxJYYnx8s{fK8dvG)HU3

zU!lc5Hu1tOs6pgTLgx#FJY16OkUWQsbO3%^qiYj@ysiUP)oS^vfdycxOWHn8p;&A~-`=lPQYa61I6?-*J^H{Cnoqx6qc4`6Xp;Qyv

zHTWemx>F%GOSXapYK=bxHY|eF^?na>di{1}s#R~GaX

zA$d5}Z>QpZs#u#~ikM7KVd1RT$+53?Rm+2MmBp5psd6fEwtCh0SLV4xM)&=1MSvm-

zquUHpGSyv6dsQ_rA1}yBLWNvOOiI&V{ELzvfwhhlJf2*R=

zdOXD?;zJ7$-#v#sr2FKrlnb6e1FX;?otu2C>2aY|!^kS80;=<>

z2(CPmiMMs=Rn@91zMANA%-+PT^yrjV?BqrOj8WI~uzGtkq@P*d5a37=J7YKJG5#UD

zR+ux1{TTcA&+8?n;`J&Q-#n!~OgS=2?mI_lLT8L5cziE1FPB2ffCrL7=;pb@`v%__

zs4K-p&(m?KIjW|ErKL*KxdU66?eBAt1!KFv;C=sO(46}ZL2ogiAZLE0PeSInBXEyE

z-wi(1)$7fn@rjh2Zx=;*tDjrTD^z9PI78q%>AXU?mNH`FfSGH{y!d-cW1vLZ^-6;*

zTqsGM-D0%wI+{CJTELB$Y-BQk0YcQ?_;D4646U@}7Ob~>H;44%foz>hb3%b?qN7uZ

zg{t4M?Z;+wOnS6)b0qUZ)`v^_h|@C%fYwYd0(VP%1d6laI_x|;5+NMO-GVswZ6;bN

zTBWLwbIDRoJT3cz1>5IJ)4(p-hco4T4BpIvfX0D1XQuBuSfP*tu{t*L3r|

zN=R}Nln~U;9kc}7bgrIBauN7?QvZg&Ns|4XB*u5p%225Y%$VPQ7S6)IKREQu(f{Qk

z5KTNuy9>X_NNze!ZJjIVG#0R?iV@Jy*0SqHc8WiEz$6kv>fe<3_Dbjt%08|cQi_E=

z9FmvYW+>oWOF9IdYyn@Mz$_b89<%J#U@+$}Hmq$rz&T|C-rny1(Gq2+XR

zJuJYrUA0@ZDQlNN`m#8444Uzao=GM3k!7KW^hS;cYDl}H%|M>8Xy5zr@>

ztLyxe^T5^L#=v-w^hfR~ctmQ!_is>BF{7D6nle

z1z3In7-OS^mothF0FO7ufRwO^eVInHS>sb!

z543KwmNZCkgKAmH+v?emsoEx2Ra+Wum`xRLy;y$!Pud}Wo}lLcE=>F%k{6{LkTzQK

z7-g)v2K6GFWaGvJu$+TH#AnvXWsW`H(E%}P**$P}{bF@KD_G*?e*)rp@

z`ftpx*w+p^wNebLnKJH`2AQZ1eV&Ccm9lpWr1VtOd@mZD$UFXF5<%hnZK!Nw4&fwf

zjxg+0vcEr&sU+SL(P~y_V4>P=R=G};?6Ouwm*UGA&n|El<-7xMe{vk(Ls;z3TIxAa

z^gxjD(mJsVnL8Wk9qjz>sqFP-Tca74X?5||NcRCCa)%MXY3u?ezC$bgpc|`F>q#iW

zMkgr3L4~SL2DRX=Vw*(Zx}=2y0#JZzYyC{(XB5v(}R&JMHA!^2TR5bsrle

zrYIv1%YKVC7rPaGW8TK67?dfufhns;-1_KEo0uNm*>tGGDE#Q8*p6yAu>#6yM(GC2

zfk*^a#YZVM+Rk*w$#2jfv2F}f7iY0@j$;PzlQ2YUI9WV`&6)OE7ql{r46$_5KC

zDvyNl=`*G&pPsHEMr35}PQ`>Ojb!fE>V}BbB-6J;ssE+M=RLH^O?*%DM)Xa8bZPnA

zBt;J)^X-%fHRac_KiA+Ugz%0cF2V1S6{E|t&Xa%9MXw{HPBRk0F^T@_Z7M-@Zu2OP;(fDL!Smt}81gGDV#anq@$2&IX6QMdc

zTr%G}zp*QdE{*oCN`DY`*6->-eT8>)MN?^)M?$@qZYQpSn|_u?VP&J9C(|F0Mv09q

zdmdJfBjK7u`E5mSFp`in8L>VWNZ9Awg;B^8f#Y+;5)*6PS(xAi*c6!u#y4Uq$

zDli58?jrbAL8(~EvQYfCk#|m{X``A~h>VPnL8`8*Onp^Ja{LNSZ?IG`UD!~;u&Qcs

zyNAnFw#40C?p;NBc_k+5H%w(^W%a|EY77Q@Z>AdiT$1pd_g+q{39rJ?ZSPHQY!5P2

zncTJVV>)vK5E

z_Q_{o*|ZK#zS_(^dUF4q;fojV@}6WgE?XmSMcJ?BVU~z~bxBPS)glJEj#ye1VAMlb

zwj>4?3|ph#pw2f5FN1`%j?!(ZmqVP~>VR$25lxCDDa;w|^Nm$m;@|wZ)WO+N=65+>

znAS!UIWL7JDZZ0UcWAWJR_PAEw^sWEWiPkVfLVrs8{l6QYh}z}wnhpSjl+|TiUU2>

zsiyRHmWSiqbR#gbL+oQ}T+^YzZvVt~u?nkBV_vvQG~OvfJyX5KLB<+xoCo$kPSKMF

zPbpxj&v*m)WfW&s9V=NdHEv

ztek#AY~gL~NqzsMoL&ZB^TR!><{mC7Rh?I6xoOcRrb&4!1p;NzC#Z&v9D}r8RKEq{

zO6aHmdN};oHqt*~M*aDVi2WhJ4QeIxxF!kpa7aUsHF%{NmGmdX!oV0x5~EH_K2W$B

z-~OXwauW3dSLqVv2MTvMKIp!_e%Dwu#*r1y7zsAaHjpdD#GxR36;~vqrzhY_cj9;=

zDx@FUTyO190lg^ZRGiJuUhR){YgoS?ovdr=ovfcQpRAuTnC_jJggpAyZK(LIW1eMB

zMpLz|sA6+HePb`$)NaB9NsqyZTO@@DrKnP!ShCPYi%@AkwwSIkwC>C!EP%MU

z!otm4vx!et<3xm*ojc68+O{#^f1mL*IcbQa+gs8zFd-R{#ZOH3Q%p#tMw}8Q64po0J~T|sG&NG

zX3rblWPeHLu?oLwWqVQ|^nEOB(TZKESCZg7kwH?rvg6Qa{b*vR@UB6(Z_zzQWJKln

z1**1C+4l*xR}k$nwb$5s8<{@0XyAzhK)s|`Y4Tq4_WS?Bw?aUW&3AD#`P1yc-xN-|

zs%bNm3+cZxvYW2-o_foQ>wA%|EPKtNh27G^Hot@GA`18#gu4e+V9=we{I89JW4v{*uhh3n&~>@DFD8sBmg*!T;R;B(om|}M(!1i5J5%rc

zxH7pcQfz6fM8^)ffHM36z?`&u%89O&YW8WqDl}6KmWBg1+6r5C-zaq`7%!AZosjB~

z<|PQ|NYW#3_FjKmCT&rEYI*WD)F{L1dl*cdw@x9btG>6Z35?Xg7p>(E?@Tb32=Gbl

z+E3^_zdzb|WWzsgfD)>K9+@T?D{T?cJ)2v5`xTAbt5B$7Tli*<%Y1plv0wHxa~HEB

z0a;c>8NBlX1;we_NSrnXWs3}&_zIt>wrU*r?=QwrY6bfs~

zS(Y`r+_VgrhS;d%c

z)$NJBM3MAHzWp?|n{9A2rMQZ@alJ>tHlhImN0gX3e)OY35L}%Se;P#nAp1E+l>7ny

zs9(E85YSuz>N-AtVwnD7`&l={NY%(mhbLL&yq%M*t*zNZTv3SNc-o3|9*3ECRYLN;

zt84wV)JTCP@5k2$6U<6EU}LWW|arEc@f$#1H?o;ora9u0H@a

zr?EA=*p|sQGuncVLh{3{6kZeJ!>10Bk!=d64eG)6!p(I@4*)OyE<~QVUkWbJe=S|C

z{<-_*tF;f4L5Ndjj&aR1k}@M5vmdGY2F$F-(j7@5OBsU*{q>RB0{%qe2$a

zJ#@v{A1rSqlr=*g>85IafS+JIM>@qv;%DQj20R3XNH}P2^m>&-0GVf2R&MS9AUbtF

z&bke&B}=|qex-g4<+T?_h-Ok-O>^#Y>4!b8y~YaE=U2~%OM17p|kGTE%_

zc8bjt>yz>%MmC|>Q^kU#E1!)cdz`$>^O9XJLd-+9G_J(s)uqG@I

z?NP)G4$WK|T<~D*mvu92?6LL%R=_hFx5-@A*oL6XLuF@rg6c=COwpO$D?RlP8G!k-

z=H@+IWKk8%Hnz>{$Bh0uZd{{!ovRtQ36(dwhj=gTJ1s;-nkI@Ch+*?`*)N

z+*z3GxXxf>{5qBuXKlwtPM>EAP=)8XThP4VtQ%AxvT&=7Rg8w%EcB9ZZ8^(zbskZ#

zR}cIB%zXD*W9iw+P88HLVZ2wwyJguN&ib_=+R0C5&->LDzwxqynsXXk+nO@LXBtwA

z_T(7~XoKx#pR61s&JBWb{v_@Q@;r`Y2OC3miXCCzq|34R?q+r)MEQ}zI42s-7KS%&

zwsE!9ruNZ*x<~NmumK-ROuDyL)PNCaSc7V*Ta?oW2YjIat|6KCL1W+ZcL8&=2!Xt|

zxAN*Th3A?oBhq5;m4OF7H11nC@;#Z9E{`?RVbHwQrD&K$7oMI-8DoOtB^8sU|^J>@*M19Ti_aiV$FAYVlH$#zG74&(#<8GM?p8

z4*+d=bFT>liAcrF;1z+YSmCvb$-vdNa|Nv_hq)x-w@Y1tIiB2y9BYb&b@?q*E4!}M

zM0>Ye6#DIa7)#+&Zde#nVGLl`FH#xsY4;ShCiA!x&|CpC)^s9$WdF(faOs6ew

zuUwwn;Q96>u1271?^74${5922WiKw>?$C??8h7q7eGl#ZOzC+t8m=(nnz&p=xv0>9

z{1q`4>PNgxqGe-jv1QbNg8R6tyr4uDYu5~JR#v88L~&M0c{G@n8hXY+{Jda}xs|Cn

z!_0?ZZ6oDDi-38iy#}@puh*G&Lr>Wc%7a^mH8CvWqu?BK{iR^MA%;@!GkfyRxZz;Q

zj@W7YPUy)6EE3{!f?ut7;+&@~{eM;jGpRqm0^>^3?}#4Oo4VkNQF(Y

z{R(~G73HhXU`AG({sq+xB6(q3xX+FoD3#(mkl#V=l+>3ZlRZvrkT}e5i-F{ET(>7eE%!U_fKc}{`;70AP_i{

zt0lm4AkiccA%1QA9NI6>o;l3w)E3|{|8U%sj#7)fca-9p*3Uoymq%45l(s#MoCuA4C&L3~|P~V8TIzJzPlf~sWYPE22sY8T)

zAC|h$M2z6C2t0g;jeQg$Zr{!47|UmV>M3;T9d>7f6?+AW1Z!be(bMDm8?_qmE@ADU

zeeVwd-{QCE++_2!xcr`d;wD2p9hNDMT>VUsVFA!}DNtkMkJbW`VB6nlZ$Is!ijRHP

z*j*G*;TtLK9fQ^DG2Lh~4!5AnzVR+xr@#)~BbeQhQT%Gf0>WN411Uz=D^l2a;@>J=

z753z5C+zdJL!ZA63;ghFZa1_k1es5!Z$Kc6h)Lys^;;n|YF4`?lX=3*ySCXzc$u>5

zLC3j`D7;71Z0}9mZyF{AHV`5Hm$LHPnJ)T`uMD6;1=Dv(_V_Px#}8%ui@L@3-!uKS

zRT3?Y(eCZfMNdL@cY>kw4R0xmuF~6@sawshPL%Dst?^`=1AsGmG(DZAO?j9s{YK}>

zp;Xtg&aB`Fvc|4hrBz3!H}VYgvzFHAy2#y`K0a#1QUzSIrmp7qyQgAiC-11oNSfX1

z2RkTPyuSPpZe%GZHTnw8wL)A(q_6s?ucL?U#WEIEn&mH9ExRR(XN-%D!lE{z|ZOX`b6@@!_~aRy1Z{c|YZC2;001rm3PPYhdN7odL{C

zI)f*irgkc=K)buErYf_}uPH4*6H6avv>(lEMhXWIiV38aO4WmNiMkMJm!eV3VEvC02$Y6CCPb8r-e)

zk*ufln#LbFwkBhiVr7Y7VqCY>X)DTwM!?x<%4^|nXdaU+#

zskpNcE(%>jlz?d7I0HH5XJ}whUAMo^MX;gUXWUhIRn*@y7*_1)Wxl)>BBZBvuTXKh

z2vgu{X68o8NJzb`o0`6mR8exd%{awE_(7gwy=vDw5hrUgQBp6{!KCoh&)}FGW@L3E

z>a$X0vng&Sw>mB06%b||`^|b)>nKT+lJ=q%S5ffnY9&o8oPa##Y6bHXjh~Z&o*|Uu

zw;VuJowgou0P+JBYeJSshcKcNoCNG51gKK{thlMkxzHu5P1V$~A8GbR%j

z66at2HYdQm`Npx>S)iyUy6^2*t9~2leH-cZF3H@)<>5)xz|t$vhY7mgH}@9@OMX0tUh25Noi-4F;5^`QI(@@K)mi8HcF1JIfDd%d8UGd=R!ek`*ZIN`CCK<

z%dZ#|1;)1pEXV=%KZaXpS>GS1^8aS6p}RA^*|cGdGO!!SlzJ?E=bF_pobv#n$pg#o

zcca-cPiZKTv2(h_Oxhi4D`k(7`!4-HdAdzbwLyw>li)MaIF(9bpE0|?TL+3%q&|sd

zq1KyZtm4hdmPL;Zdd9B8RL`j#iqwix%42L

zuhKoskI#^;!hr6^5klc3K|U}NsLerkfN0>tF7#BooQy?~6Ru511nx#G%zPPuLJVi5

zbr>Vy=P~}v>qeHtJFMgbBA(E$Q&}SGr|;I_PzR*w*Kd^0W>w&{eb*5TD^fY*@Si`(

z{lCUS{9*jD{{f3wv>pv|`!y~ZERk(?OmUABOAiDAtWMVN#4`7IR;BB

zl@N!9PVQ)N$CZn`fuB1MoE<7PDJHJX9dB<`E2=bDXdEw4mJnvhKQ&aS14&7NQ=hQCfaMtA!Syx1I7(`ahf;}u`yP5iv(C;?PHHm8{t=B7ma4v~SgQ`Ss!@H*XVwQF2b3HOA+bM_|V=;jzDwJQeQJxzkOh2J~Z;GU(d{943|2MIw1qL6X*Dz

zowe_)e5~ls_!CEr4&p#(#X}Ym*

zFdJeV5~5YTJ(#+;%~C?;Z~)n=UE!Ifm`KyfOJj&ZTsbPbUr7n3-lhFYJ^Go{*^W8_7L~!42j$e%eN$3@nKs&n;2p|)c|83gDKbjV

z56QK92{@Twoa=DqN%r&Ug(;up*&BAFV#$wFH$JMQy$RC)DxLdyL%+&wG@(&~b7-SM

zf`1q_9C+VQ!&xXoI?;%4xh1=@F(b9{+;H-oO#7Heo#>9kqi_GQQaLn#01#@rL04g9

zA$S9K=Um#Sd8`BP5^$}cQy^+idm3ZQNzRwXD=b$kH+pl$rlT-GygKo{>xka1ywX*x

zY9XC;m=7`p%0`(VLnz$peB`}$RM4t@PUcQ5rwPq0amv`Wg>$P=*|p_N(vKlCXO&ml

zvT~d?(OVDlbWZssE~Wm8X-Kbfo{

zFl5Qr7xC3W{FQM5U5aYpqBJwmBo`QYKfM=QM}|cm8CXrz+)i(2VndL#~!6mqz#Owm?iorna(pYjt_EoKF=j4Zgh2B$ow=InI{muFk~^}-1R89+LvzNitG5xO3;e**YIhcSmtu!ZAFjXTRNDHv#e

z#h}yX<}lJwEDeM`QSd9H0y*GVJ|c7kFz4

zu{pZ8jnw%+G{yf{xXS-{*#9eG4Gtar_ZRxH#+W9~SP0o{0t8190@vM}jw3ojm7~ue

z(o6PTqMPL552!tJ$TKz(fGUL`0_-?ltAzY`RdB4

zS13jiXJa|Ra_fxJ`#ROtDd6cTdT|`YXW>n-)bd@9W20d6VpTop2xmIO&y`V){$6-%

zXAFUSzWSpH37I>Q8BBKfE$)d@<#1oqJjM>lx<0CT04VisokgGu%@ZlnM4NqN;x2Me

zL2Gf#i)KTO-PsU>Yy~xknZ0>>9`RsNWbVjJ@Z1s6w*{6)*DE#x`fWy*5m>7#bYqU=

z%C&1INl;2jwL#991KwUU$S)Y?vq|ev8*kaY{N*=(nLdrU95DrdfyE4$SEr|Bf6QO4

z6Q9GvVv)xuHRS&RT8ZE`(mkS!llg_0I=YG}%TrQtkj0;+3

z_H8Q7J(duc#K?f0tLWd^

z?|Q1nt9UsWlcw=tC4jZQST||T(SdiVtHvnJq&~o?&lUnTs~=z}VfxzP1_b>e=UW03

zS8U(EY}BuK<>Y3XIC67XW)d{nv`%|?0Jy^~Ld|Jqs*_-mbSUAZO%I5a8ckQtr>3^n

zEo3SNoD-^!Dzk1aTn>)SAlK?aVeVzn#qj>Ox61Jok8$^UR13s;DPC^-1?N%nQ5!k7

z`!@Y_{u^PAHm1>T%Ud8sxvB9~T>$aNMo8!E$;W;)yFGK)uGbEN=K6`3v3(*h$1G!;

z$7Jsdir(^dFI3vG%lMYyMv1W;Q+7sIF3}pRj4cCs5@YXeu4iMGeG?6IE3rRi62*>7)DsVE-itx%}qK_|4JPsE#7w-heBLt#9me|

z?dK=w*(zdrI%g-5X0J^JqR?~Px$GRWII&c(t3ojacZG13WapIkeFh7C?>;~0$@h^@nDQooSm>jKbWxXTmm>;pc0oeET2cW5se{!e&_vgCuId@0EE-m#)BJQv{Vz

zkzyw%tCoLo`Tp

z*j?{uz?ajYafyyj`O7JmY5wU2HntDJ`=PN7mMNC!ZavV2xHvh1VIr24k(88vT^6?b

z1eo_)r-)bfrEG<@>mK?<#r56k0CeB{o+{jbe6?VqDFktAxje`G*T(~i&|5?&%6Ux!#smdpVB3~7$g7pY{-7ueb^G}&zn-q(rBwbdM+Y>S(}

zpLnAn84Nz#*gHSi3UyEDmA))Cy2DWD(i`iRU+%>0G!@fV$sSr|;>W{%Pt&48A2Hv>Cz2^GFfB$I;%pL#?D+lFIP!J~v

zUCvoPbyH5oDs=1<;-xESZqd9XkU@Z?n0XtU

zm((?`QE<{*1JfpUGRX;yl2a+LvAtIKFK!IcqDa(j@ro^p>?Dzww#y%u`o

zI*L-~HOjXgs7o7Ol((RkP100J3+rz`MIR~IWy76VCT)%fuc)Xgm!CZ`Ib

zmmJCDFey3_8Oh`Xks!vW)L!nC$~X-EusZ-$i(7c83eNj%At1AxW;10ML0!p?PP>ot

zH!63qh+eFXi&97HJjEJd*aQ=esqHFVcV!vs+Vx(

zaJk%^Pj9cdGj`((Wf*1dg93xwCKX#=TP|}N77{GgBNlpDthhyPH||^vIHa$5vp!%x

z0O-BCEzWm7K2rtxa+eEA(6rPMzlV7f5C64QlUv|2S%dAF&0S>bN|dQrF&D@WYq+yR

zYuNTr-8xBLVZ|4xF4pw*QEETS4aR;~zul*E`GV*kd!DSWWxY%&1-~y-%12qv>#O{f

zC)o;_hNs=l91Z98eq!Qv(I{65e3k#`oQxzT~V-6Im

zFVuzxoVRSiX?MG-C5-3YIhR>J65(q32aqlJoO|UN#o`&{X*`JP_Yn5bHyzF05teg#bag`2-ZJC-

zHoTNxhs&g`QD~rc5re0JB9>Rf@6{NEWr$ossp(VdAO@u&%N2(Dz>451rqho#EA=Pt

z``$mi`ZNOee1`T%C-iT4m>8$@%mq03`+H;R;8g+TBoF%f%81)QQ$syavw^m>qq~5l

z^Xf0m&5WdyE>qS?li^>O7a6Y*vS0^ka^3PrJ+BX|sLJ>@AO6<9_$*PHhOTnQcuU#@

zLZTnKIC(UyKF4Ooq~j{q8P?ZeK$zai3G*6*CYCK5J#;3n&$^-^;f0~9jzogM5cuk{

z+G`Ju&JmKok4CXtYxl+qz2Blv!U!>MSdu$rR~wYJTQa!l6o$6g#V+i4>$;-alhXf>I0MYxvl8lb;T&|mBlmIR*FfIxTKN%&9Dq7

zK^grUF;&@v!-~UcA)4GPM+Wr@dP^{%5%3A<`mohs-`(9Zy0UP$g)}

z6NX|$W13|k)|kph;vinRJ`w0*L;jVYpS2L9I7q|GPA_i>d0b2QVPJ7*K-!Owp>d;D

zgK%J8kA`yERF>F=hAOKuVxm4K6+f`pq;m$YwcIouOqLEd$&pX+D?eKx=Dg8sXpv}i

zI>Tv(Eb6@ZrJ~dZ%6MFC?n#bso4;E7qh8W%0XnYdojWI`o4zwg5oW|P`IybPO0gF7

zSUlleriiF8HGn{s+c9*$XQ2cVq_*r_Vns4yclJ`#OCjw-V>Lq_PQTaRJ%suADh49S

z2ducryHu?EdU;(jrOVf{q5@NGudtp&4r&UuAGekB+gu-?#D+SgW5vpzeqw&df9mH`33eJIKi&y44Mci7;jq}`L>4@v(mQ_@-l

zv6iW-CnAA$j7+o-eTaq3oJCV*j9(&WvXNEVopc@+0vJTTA4z#kRiDL1&c;V?%cKeg

zw4+`+h}I;GTjOf#o|~M@nc)UZvX_5@_9&Ir&OR(t0!iP?9+;{uRPeTRqtHt8S6vqK

z(TfU21b77cjN|g7lx&!Wi6p^&@bnN

zoQib9o{DGL*JiDFH@q!#nv@NDejAz*eh6M$i_M10qq2%tD=qEa(f;u(?pG}gq?;dk

zDh64}UKH#F!vbtEp?)LGItj;>13<)$rq8##6JI4Ral<}2GLL56;L{%bx(~9z5*l~u

zrt!VbBrm9~`BWxxv~(k9+A6!LV4~@Ct2R6EmhlzJ5vb^kry(I3BinUg3a+34?LuPV

zA{1gu$(a0@>f+JSK_fDVe++jtNe{i8!>GA8S9V3jkWvY1~

zLfe=`HSFL73-wB11#lV_fgnY3j{I@Sxih+ZP&zmE^5z0gx3#__HKceIQM^CBUQV?X

zS2fB@*<8YmGlh4S5RR-#>fY~$WCGaaBW>({OwMD4)Euwh*n=%1G(>f4nrV$1RoU2^8A%#Whhg*M7wVDCza9X3KErV!(9bL<(}4->

zoreUE!#bR69e+38eR;kqGBK;&%Z?f~scsMEYn*DBo;0vkcctu!L6TduJPr1enea@O

z)@h*Ekm<8`{R4JB?aavyZXEztZyiTpGTIE?vuCX_!)sdYt;Y{l%y%7UVYN?TS2ydc

z%ceDLwGe4`U$nfrfzOCv*&l>#FDLdl=%;t>uPzrX;LKJxN7u(7e*0h#+5zBGNaqk-

z*z4`Z_{$M}5+b||qY!|mPlxaq*4XQ-8;1U0?7e4DlWVy5%d%kuL`9mwQltn17JAEa

zxc~tH0RgG8AqIpk-nrVEh;6_rG!uu=?X%G0FeX;3B80Qln_YqefFF=GiT;~

zXV0EF`^=mV`vWr!`HC}JH`aOvb!UeRDC>Qp&d}`Ii^A$s@tP&v0^W`xxVzmCi

z3}3h##O%T`U6B*yS+hJ5bt;OURx|~Z09Mo7>#v*&mkhh%N=85F+=OsM;JrF~8

z^i>S1um%?~!%Vl!2m!?Ol)?f=%%!~mdNpZMV9P?rtGxVC4!mc>8k8=Qi)>t*qld%o

zY(VtIa!_Sn^V`zQZ2Y_6RH1;N2%#+%PV|=IpIWQBnRDw?1#F^uYqi%9W4X7(

zT4kanTP<)NTSak@gQd>|AZB>HKq*3@7bMc=;;YHim}MO0#CKrNKmYViX}Ha@#1kSp

zWB#=)Vy5NV-+%SnoOVzYw#OKbwpYs+!}6UPmK#?qc;U|GO;OmsQ%AhU!*RJWQM8=beA8K;;=C>ZTWE&

ze$vV>AO`((V9wyf3$Xc0iz-yqm(>m;6-)=m51(r-#Yidtx}!$

z_O4S|;-~A4PL!7yUHZ`{q`Ln?#&ZMxj*sd~Xy`vVxBp|ceW%KTN$#}1wEw`bxJ_Dq

z=7<`B#o_qhLbW%u{>jEM%d}I1qy$>9@L@9S+hah;Keu!OQYbg3A$M*RE6&qr9x9Rf

zdvCq$Ebf=r*=PApK7XRl-Z8l5LCKIzvDGa&{Yg;sDx0#tH8*t=9YL&cn;Z^Y;2{#~

zpDf(nSyLH|@AOJhE|^uOFx1o$_&)EDDXNqbG;)Al!TT*#h#c6c8BTlWUUTQPDB{#E

z++q_jA`1VF=&%U|{c>R|CCyMeYs4)-34Y?xt*h{T%<7WTsY`|c#YOrTxkI6wB27MR

z8dEUelUf9!Y8+*SeHSgNp7bQ�DT^YH})k*l)*g&was3&7sEm^u<-@BKug&#`Wz9

zP(P!j58V6s<6D>k5#?{1~Ugvc&JrcVAP

zbE)Q_RmwmXzqECknDun58Md9pOIwxyg9o}+7T|Uv2%1nQtDjJPk+}2;5sweyVOMm{n2fPn=8R*#+m|R$qD}}C`BiPUJ

zTSaK#F@pW>yVK_hlF~vIqV2`YFA6W)5#@x>V=L53QQGv79WKGE#%9!+uLshjt)!l)

zn(8TTd0L&bODUmiL?S#w<}Ugj-Y&7nz926x(W;Tf4G>(q-+rY_OF~!H$Px%kNsIVQJ7Le>ejVBs{ASHY3`XFxMAMp)C%qR`@

z-Wce*CVY>lM|khhG#lDHT;VbnZKDm^dPv&biDVn83Gak)iFIpC7XOf)9BMuKW-WC

z_3ZgJ{Nh<8IKGa252n)~=*=Rt>cS{D?s_$T3c4vksBs_m7;|Zl9zYMT^ov=$rd}cL

z+=}EG^eNa6W85{g=fQv`O5ABoOtPxftggno;A*zX!MmaSPqScpLCoO}*MQOE;j(=S

zA%T6iO>5Wg5@XS8*|ihCmD2@=dc5)V^+na-)rQKc>GtVHHxnoy(o*NZH9onREJJXg2t_EjhTuLf

zn`-S(yPxP*mas~6edOFZzA#mkbI

zo5NFH%ZA=-EbI)+W7^eya#Hcog^CMxhLI<()d9Q!qnT

zVg$zFtx(|#rR#d>rea+JVb^h4t}9XhI1+@Cz*|c?9`n5JHF_n4A#H1Id)K+^Z*g0}

zcGx)Gf{i~`A2}XTdC=3KOs#={@XgE(b92wVmI1>E_o%Tuj%SHpfq()Ghic`{aT9Ri2!iUcZH)*Ndim&yrTs?z5#y

z1`Y-j)VGc$GpxX_8#2saaWBg*{w-DW5LD407?vp!0QMOwOg%f_dkbrlvK-

zqpjZ)7SbB#qfM@T4rrZUJNJkJh+S+pDYuVowc9t|HEA_kyrxz@b

z`}uA1@&;k@HV>`@1~kyNi{k0iSE~t$QKX~p2G^0pDBxR!IF3}ri?(x2%GW57oY%$@

zGeA%jF2+R0H~box6_M-$TrwIV1q!$7&7AffGm^b~@5X>B{;D}ddJth}=3Bgt=^B~N

zX>VAg6Yiu9XyFP4F`p

z<#Z?LG#mF^XD40MUYg8mJf6Sp^SEfrbJq-%y-vig`b`wAM+mD3#_(UzXTfzv`a^?W

zl2I#YHyw6CWlID8YWfpJ@8=CiuARGo)ZcmERf+!IBStRjttlVgzLxkgMlmU9px}vB

zdnKqlSp2r&1(|drHOf(!3

zsE-S#P3STLkZFrMzxG_HxpM#VPxzQ@rdr5xdZR=Ga~H;UJWp-%quen?!$q^#5I$(t2(bpEGK=$9jc?Efo~3?`)%~Eho=S;}*iDJkJlyBt?9e^>^=G{K

z{PvoTCD|!t+2J!gdYbKlS`tVPXKp4sPB}O&YUp}(R-VEl0|MP4o4%lC9iAHEdfb1?

zmHPj`|Ka~ACj5W#$3OlX$O@*~z)B6I{1)oO0>88&I&85rUi$F@$@mV&uN`(abGzt^

zU4wfBq7#0OBTPF+zMD39zPf0}`cZ58l`pqkehlOanwarYwZ^C7KB2S0$$eA>KJHDn

zj*0Gks;2s4jbM0ufb5^YKdO?THS@RT>Xm3PE%+HI9kN-8bjF$iOn

zDFIOVeXaHCSYEjO=8jhP

zVk3GPs@Z;N+g%{ix1mSdchzI^(X%7QAx6pZH9~>O&){t?{j~Mws@A|U3QC7Gy+;l$

zB~9|}_WP+@`(wGDdk9ZEp||*VUxN%|;7=8(QoP`8>A}b#8-qr4IH3d>F3p!{3^&E?

zSAZPeTppO9`=i&lcr9{(Oj(_&eQ#F&o<9Dw+}`Y2W|8US!Obh;3oV`@+>xy@P^%~7

zA^qxveuoBcZ)KgGn%5q>{;AQBan{fD!U$HLvuMxsT+e{e2K74k&;X$*0QT1(!;0fi

z*X9fRP3%*88ZV*Vo+P}rN4@;C==@3s>QvPdW%{TvA!Nht7g@ij4R@)}

zInM1_Iddb#q_VQeUIo(S1o0wK+>6sWX&o7WU({jjDdGuZBA8vALoNI`14>5zVeBaX

zb~c*6Z)L?!lxM@S0i2j0BWk)FE-}ed?lx@MlzYEA^jk1F+dK`|^hc%g

z9XE%@Mw<|Hb-_+!P76Yeg+J}{D|tJZmI-fO59rdJUiv?^s;V34h|Z{9

zlux*Kh_e*AgZ4D-kM3q$tv}xVe622$fKm(oXd3#cfU2th-RflW>=8nKq_?f_wO6yM

zrP0mue&CC}j@{HnRjZRWI*^cXut>pZzFVNL{d!))ESPDF=X^y-@FdT&WrBIPIezTj>d<2%dUR4@`d;?S(3pxI|A#a&

zG2a&R_VSL;zRsrd0#P6wT+Wk7K)vZ2s#a9(84D@9Q>8$;??-Y>m2aSBs+AF5m6$g?

zv>Zq;RCzX?PqXffElPocilILG<~IGh{ug^UtXRHYo5=`Izs>zC

zVg-mNxCx6H5Z#Gdp&$l(!c@w6quC&vQ5K=n+u}XA_!_Ik(`zeZrzTHXEmzM3B9GJz>if3x&-7+v`A}^Z02&y

zLPEr?GcwRV{i=}NfEw{_F-d-@zr%vU@tCCkpw!D9<2_8i)O^0h>(bSS5n(5>r>>yn

z{2l~ZfTHqg?aSUfIchr)SM#gLoTHq!x=%%^W!w1&=04syJ61jnrJ|D2wDJ7%_Su-Z

z;<-`8eSsqE4pXHwO~@B{8WvpR4l&(7{BU?Z6eRv3nk;KA7wk+3iIy`f9Q1)`Z-$nW

zEOkcJF9edVO$DDkQxn^Dj|H4ipS_C;h)8Hj)Gka9LLITa-OwDv{oB&438NKmKD_2s

zWt(CE0IOsIE&JVz^DIqsJ*(hRq`r^DD`hNPw*p61crAlD+Y9(B&|*o>f-9VY`(b-w

zB~0=RBhDDg8t8K~EsYC@6rN)eI~XDyiUSk-LGL41FX7|x+|d;}T3Q-je{lw`K2+9;

z1*g+c@wp4HRZcA5`+Vm$Z>yZPXs%O)YquQQD+$Xru5^yfx;M8>>>Y7>Kv%o7TX82T

zszlX3c~XJ13%9;eO29$v#87U;$L

z`Q$hQu33X-@%=FaiLFtX!mMHWMoG?X2ECTK<6m7}V3@Ry!$169*6Vud0k3i;)Dbnz7a7;Jk#3?F}309oJbR$H05kIj?tKK7how>qYNn(jD9q*Mk+(@3+

zX8IBZiYtNBIl|s)zos|tI7iT@oD%1gn0blMLQ34w9$AiwL7CGK+hhml*5Gc!N85{@

zp3NnUkQUu8mX}Kctf43=sJ$6pO~$3aD%TrZ%G($VhL>{Gdq~hUn97HU0gGCeE-*|d

z&Gw~Z-4|BQD}aWtaGG>o-{>CESa#M^@jA;TzUF7F5&=CZ-^n(W{_tywzT12L)t9lA

zpZTAMLQ1I@sjDO1!x^3s;zz^G@}8{%gMO|4ansaOPUoacRLhieB#{6&PCBdtR44ePB>H6Ja+S^Sn

zW}dFjFVY&raTGyo*Jhy;!fXgwRxm8X-vPj$7VN?{0``hv6e-U8i#f$fTs2F+p^QA)b?EWwLc8`6P_I@eQXH!c

z`;!T;VEK74HS0sm;Xl0;St68p_=Skvk#&t@o~=y!R(PRihIQdTDX{C|(3*(UX-`*b

z!M#|BKWxGa64o>5micHjFMANO9I!|6)L8b9#1>tkd3arq@9sNhn_1v=m}_b7HLkmW

zQB109E8dA(&oc>TKWJrP(qQ&9P(tC=QJrHP+7}t!bV9ww!$mJ!C9F(Li+`ea<7?9C

zK$#P_im&a@aMiA)*Jx3~w%tnzXym4e-FTaBs&`TaShsJqM%B5sv_f|Tw?T<9M!P+!

zjQK58^ws;xsP5I923ub3M^*v}#`_7c!utu

z+`LE|SZHIL_~T8>CoiOP=2T(>S1i0<7Ji5h_S}NF*~e95?83td=4fngl>{UDF2@5%

zK~HYjOmBFVRcN*{$|<`KAI)<`xK|>~P4q1E=(5n<%l#f_KRhc6SPP0OJkl3tOk9#S

zQh~pTG+)!g|9TE1ZV~gmNvc)Rigk)w612&

zfU5AizWiSnq|g9pawvDq=BFPz-wyDE-M{LERiX}7p$vq5>4Lj^7LTo@j-xKUB#6H=

zwm@o%i8)A|=T1kooC+tJm5*7oLC3zNIQKqqt9vbf8DpkapOi%6I(){gmNbH%`R%Im

z-~CUn|G%8;|9?Lh4`c-MuS9)?axO{oPN8O@?=FRik*?meT

z8BHkUvlis>TD@ja`m-^G^uRL9C@&`@52TGKJrOL3`YnX)+C3w98HUZlAbCbS0bLA#

zs~~_(j|yR}oxE?k@s0aS!OkmxBXUM8cX@?Q+1lNP!^?bUCBU6yT%C=^Jh)Ma9}R@G

z%-Qv3M`Lx4g{+3E1D*qJzK;Z`;jg8Rs

z{9M51*|IjZrxC@dPFx|Tt-3zS4GapfEZy={5F8u}jqwl3HT7xT4Uh_%mBP=i4hh4K

z?;U^8=3$mm=c&!zi57^i4iQZOMU6Ha8rqMxAA)P)HIA)@PK7QvZ#VndsFXW;;o+cYdy;V357nWgmS{__A|FKNo8UL2}y~EBwOJi~rrXq^TGktk&2J

z%k8}$WZ7eh3XMh)-NDvwjFE94Wbb?5!MfvSGI!to?Cog2omu)(_yBga!I!%+Q9Xs9

z6yqp~(aAvM94HvEi4nd;wj=)w$QwVAM|GhSW@PO+<}gcO@6?SuEKpdE0(ZY}I0pU#

zY%Pu2^UTmEDV`RBoEYk>7C)|@Pg8??!Ick9HN&c-mYrc3u*u$@((s;vLZ5V&%)6*x

z$vQTE_IVzx9Bps)sfj61FjO-Q0`I*G@0&i(F`?2yU%^Ls3dJ0~uPs6?X&qg1flbhJ9aYiLZ+I2*ED

zm6p%$R_`;V2V1>|DYC_nRms3GS$xSE<;tCX)~yd%6^cSGpH(j0leZN;ACuF)U!x^mmNd#pH6kKB`XEEqF%6(lBIZ;mDw8qv(b!oqzxx{=5>Mc`{R1Lwewf+

z`gf`2CkMWK+iQ8$g6ruwP?mQm5n>QML{bV-Ad3Oq<

zxceEn&@7(NDCj;gTnSN2;hnigHGdzhjjLbm))1T7pS(D

z`A*U>b4fNSift=1=y0{!jL{OKm1I2pvOPPvMg>jZ*e%0PZo#j-bI6zHGXZ04^jQ^j

zfrKhrEvQ1OjQicqK2vPsOWXBK+GLTPwC%}62S0HE9%v4~9iL04LnE&b_Jsr2ssYE7

zxWU%pI5E>`S_!7|@Ci!o5Sn$POI~8E4VYbW*cU7XQ=c_LoL*@WXHlx3hF{p9aEMb4QeGHd=}Q)y1pjAU|1fqExGye*LGX$=VR;s|ei

z;!b0@1E<2gtu}*uU!V~2%B*w(c4Nf9$U5oWdPsDPZ13JN$f7%zIsW|LyS{cyfx-s^

zRrWtvejK7=$nnrUFLC*O?-89fU$afeh+Mt=FLhDM<-yeQ;AkB$|NcXFk1uKrE!&CG

zWisM5JT&~zW@hDeDpLSV5iNk*PQ|imp+C=l}sA5hQs)aAZH%G6L0Ysy(AX7G?^O65E!b2IK2AWGwa)>w=8SEBL91y7g0MXTcoTieWM79(f8iT*i?ehR}Qm((TcK?+S6vd>AC-4HzQS3=gC;X

z={e%KqVl4Sm&MTIi0||7E_Eq(H;+1eDs*+59Hh?cZ#b6kS!E>8N}D>ZyI#mVjeCfD

z2u;CB;AUD+Hoj!O=JrwQk!w0kqAjyJi)+L48d>j)H@(6O=MLqy3Il

zUtkJ9Z+IKANRv#vvLN_YuGM{<3DD-4>61q;FSnA{mkN6kSum?tZ)A35U=vnZoOvj&H`gODjZT&Te2>E{5y9c

zFvEK46;ydXVp*zmYBvOYYOHVe;ol{eHQpD4ty~EB(!EX7@4RZb8|M`JZ=mh~%d+F9

zju9dSkne%StYRPXI9%E<*sLnTh<%)2$g}&*GyK_*a&&2zO|t8?ljOi@CP6E-Xy?1z

zS|X5^lA&6uoEYL8p26oTMtl|M(5#qLv#--vW|cn4cy#4)C>1ZP?%Jj#9gQ~dMn?^^

z9niDpA(K8@!&hxjvF)8UCByYA?$5G<&}dhPzu@qyTz&?umJj1da9n

zZbn~M&8s~}v$HN$GnC_ymd90b)u)9l0#7qi3?whxg?{q4HlN>QI}`>RYwlTQ<=

z@3RAQeI(kkK50DJN5W

zWKjg=COEMTK7;iq!j>=c7T`4@u{9NC1%>uPtqs4Z4EiGr9^y-;N

zSB`9M4c+)r!vgqjY=j%s_E8zt^Yk*N#KLKL@B-YR#~_o{TzEcTdFt%7y>|5{NaZZq

z^(ilZC;l`T2(3Ntw?yR0wg<)r9X9Pk)nKF~cG43UlRs4E!UXL`6uf&BQbR=6mN(eS

zW^8Vd1_FRC_fOURG+-wswifvTJ`Jj`Mscr2kbB)~USg#=#NOXRVmgRK+^?kh6}Sc`

z2AGw%JbiT>wjVIKA?o~jp20;BQb0+$#J?m+O4xAsMuYd?8CIllFHGZaq12t-07Mpw

zC+Wy;=76~t@X1_cSK4z!gy>HrNyuwU

zxl7|MMv&%3P`z-{A`2e}rB}g>Ak-=KF3dRTae-)I4}IzaMpE;~qZIG#z-0Utr4r3!

z^WW89x+EpG)K9DwZ2QF!5q!0AP%%eLuPSZ8p)CH6>WK5FB3VzRnyZ8m-WnsU<{=#~

zwj8Kh4%*{+dqo8ti!P~|!g!@sO_$?u

zET;+mRdz|OD9$b;)soUzW7{_SWzFXFOyi~t&t-tMOL@m>8{8Pgt-F$~dDi$C!zha5

zmh8+zDipf*Z)~T<9mW06J#Wq?pESzov%PAy7jgIEo#d1Ln8A?ovW5m{=wkuINF%Mk

zDeVN+JS?1(9=(dLc{J9(suHqYZ?(opbNq@Ck2qmN=M=KuoRhsm@%dq4a>QU6Fz*G|

zZ#}Kc(J*Rn*4~2RLc}l&ZP3ukXO~c+SHbIWgbe*ygPsuCO+3XtW

zwDx>TXGfOsRlZ7rDjs3SdV#7tJ-!g9^oW?AUtWUolvsnWx=^i%}XZsrWqTna?D8B;X

z1AYl=0C$mpF<>gJcCU1mUd79R5y?e5p!

zQl;yTxj*CA|N8t9BTav{i&r?|pZ_?HhJjL%Nx7at15bzU_ZKel?&6pBrpA9kJLewX

zxj%GyMN;b9dhow43s(Z?K96NFh}P<%W2=M+#>gUl_c*^@Cdfv|2aQ>3h=Rgfm$D`{

zw%Pv-#jpzB(TRmOJC@S|dm^$vhF6WZfC_*Z!p>6L?B1b+CH!tU*De+o$Tael(Ti`}PwUjU*VMrIbiT2vt#P=fSnnyR

z&6x;(taoA(U)QM~|B~FlY@hjO{v8#65@Ss7lO+RVp4*P^f|)W7+vpOaLrCLc_Lv_$

zc!1+VnZrqT8Z9VChh)WwR@W={7{|6w3f>{SL2*bK(=)u&Ok@|7q(vLg0a!XTl~<SzE<9m*w*5cipYV#++Q#aU4PV$it6Uv+tJ?aCd

zwETIwxB^05V`D&{Iy;;LT}UTJuVS%Kch8Gj@yn_W9)VNoXehId+0V_>Za;N;;s

zVV}ZF-}o&=FIxO9w4V-}<$A#f1tRTq;-u=FN$uDID~~UxENRb9RZh

z7W+(lKy~%O@t4&-D8Dm9MNu}7ibG2RaKvrYc8I^X--Vo9L5sqW7ViW>ATq*{`++ixoI#l(MV=E%syNs&ix+FcN0}rJ}

z`>1!K>omb(q;k}}@s@l@O8fVyj*^YK`t_EY_Kzq%)KyE3l5$*T0i~Z3)6STry9kZ|

z18ok_h?nMSM{y6dChFXMQg5=mn5|vT4)nKHOo37+a?4>PRj0-wS&7vTx?_!p!%H#e4MEsq64GAv{FbK?uq^Y+uzO+pOuw;

zS@uNU7(gY9AUXu+e(HSo)V!qQF16S)+OXa9r8QMO&mwhtIzrjZFZzx9!Qc?cX!1t~

z@d_*J?Av7wvyKTXO)U#aKWzfe-LuS7X8R((@KkPk&eimtr$nFVsl7dl7j#xjAPR)9

zVz0tSDYg8#8oI?Su<@dt!1;mW*x^_az`+GdEL5$HxzTGX#xw>q|FtQ3q9CpFGSq~~

zMrYR}4yMJ!Y!tzFIjOSTrfm^tymR7oFZ`+eF_Gt}OUhy*H;%o|oOjTEwneqd%`X|E

zTOGY(Q{g#n)9yrgvef-x^?_P>mXBJ-e0`RsL32=rA3S^5L=R%70)cY`fTay%(E;V0

z`z^$iZRW~w7`H}%!@49dieF?b9K#Z(f;yKT^;WS|Xp22D=74mBabwp*p{=(~C78NN

zq108+ApUuX5_)8-U8dg>8hY&RYMC-4WG&CS)sno0ZeR7{jkoHL&1*j1>!%=Q3fcBq

zHB*}knP8?Jj-!O(ohf27IdmYZrYhNMV$rE^pbg4$UJ>!>LNrLyRj58Ji!_*GX+mjk

zuiXpeVI7^eM&{%h@yHV#7sv62yOr7ls^SgrKeaVA;R0ah^4ZFn%Yn=P}Z}xH@~emz}kSxQ#ss+@#Z%

z6_JKHbbG)SA;-tTL95qpD6mTbxOz%%-&gQ!V(`^^tE3sw>mA+P@QLCu)epNthkqXa

zVYOa0H`8<@j(MeBRO);4-kq}8F6T1Ym=cEu6-Pf@iYIA#LdIoi(`_TY;@zx&O|#6l

zqA+>t<*U%Gu=OpU&w4yr;GsCsUof(;L&f*BTRYwkuJToAaOuq}c;I?>Zt^<}_Mn=0

z5F0e3I>oynBuPx&uVp{y8gTRqy4hJKF|O6sv7XyT)JQ&;)MGUgk^B9(P*f$E(F$qj

zF1Ivy@GODt=_FY3(6P|A8{DC>~x`Kd_6_56TMQ1(N6Vy|2MPb-NQe#

z1ZQnR3Xoau70crwqiD(+$)F1G9Re#U!D*wjvVD=$Xt;r1WmS#^P_$asAQW0~IPHnI#AfQj3aDF%BK

z!a%dm!8jp=9)%f1;YsKM>c}?tEU}3;8P&6x?aLR_@a5v=F

zqf@K7b6>v$B4_onZ|i+KnBcjjh@g?l%aeD{w`zuJJxU*=Ow)HU2pc^Y)123J(u)Qu

zYQgZKOeJY6$C0ZJVXKeW(R3`%k9~u>_);4x6cKLCB*xEdG;l91JU+tC`#xv1(9#lm

zeZgA$Reocb*0(&T>y_zsb>ux@K4ziYT>_O!4G*!

zf0w%rYSx!DWFJ1ZyK^A@*=0#%(Ia>jA>xSlJ1E5Kq-8^9^lsx8E+o6G0^9SoYl}E

z+uGf4ogNd4(1sf%!bi#r3ah3`WQ&rV0Ux?nEXH7qX4|3NEhZxmqK<#l!z)=zH}XQ`P`>(iu|rb6A)nY|Pk@@`&6A2)p;Zi3z((9*Fhk3?^Mn

zI06U0>^AAKdqr=Q2^Gi3HN8BT7c^8VuR&#v-e);=xYSiiRrFYekW!-TGmE61N^C4Y

zH{#U|2eSQgTsoDDoN6HP`HgWAeut*=60;_iF67zgg+(b6Kr?S}r?C6|kOxhbdD-k4~6Re^^9^xB*t?buLb;

zX^{(JGnOL5yXj|49RF&u$(xwXauq#laHXJm^02TX2Ili8we?wkUAn^-gX5}&Yg`{U

z@D9}pAg&TH?j)|t#+dc4I=>w&;|lRQH>Q(TpBGI*Tkd~1OI|42Uz;y>(guE)9#B9kiIyOM6gfBio4(3pF~jO_z1TG~O)i;VA@PI_2BQi1t(Z

z;r2XsPNAW!d{be7mu&7?|&^S

z5mF>;F?GAm<4g|MQ@cGAcMmj^hpR{N&bUy27(Svftrd<6#wf=H624WCXgCG^o|*Gn

zaL{+(;(*5IC)iUg?{97PfdStL9vsLeP33RdvqR6MOMeKVpBdu%2pTZJT3L`^vomufZ?G1!Ae^?=&17vZ+*cW}R`(bk^NmiGT;(uM^Co4li(KZ@a_$S(}K

zCsXV$lP6GBIEg*LGauIWJUKKKfR;r{-k=W9tvehbm%r?yqoHShEn%WuJ#J!#uh+(l

zeo#mRGjD2f6c4pi#SXxK;2Idb)BgUZfMoGo=&)cv48(Egf&hrUX2x;s0e%MT7`4Fk

zlsBsHPKd9`uN468J9C&8OSFsXvu4MY

ziU89>u|*#yC{wU#PAkj(I@6_dYTr6QfgjHfQP*o{1)$0xa1SN=^iaR-zjJOj+K+yG

zP*8G*4=OOhT5rCt6dD7Yz!&g-#J=2Z63`09it+Z3^PXtG3dmk9=k)JQXvypRajTqs

zHv3%W>C=Ch^sU(eSEzj$Lx}(jjt(<%-8+HZx1yk_IPU_rKFjRCj-4)qimlX5S6*Pf

z`_gelR`j4y5GjogoZSw#@RMnV7&?e6$GgODgo`i#Q{Y3hSaYvhk@4w{0olIk-0-c3

zDX@YO5Z~9wz=qa6bJp|{U*~L3p+wFa?u6+{

zPF?S8CqhCXw{p|XC`sWAN5S@{l|j35KQ&*nqvJqmPkxFwDCL_|juZ-)E`478g

zUX_lY@k?SSOw7hATLxx82j;!8q@{NM)yIBER4UO+IZ*Yb21q;B3ZKLA2X0}apa;1h

z7-b4RV=Tc$%@BE~_je*1;y!WuZS-i1al9i?&6H}+A=)>RwcJo+i*r#0#4ynVMS7}~

zYGS#rJwgB5`f)6*!Kz@V1sFk%8xkNy^Uy`6FV78Kk$O5HV&#~rYE}Gz9<5qMZ=bFR

z{S;1g9Nk);X-%A78TluBd_lVzovnPx;9ky2vrFF3N)04lD(>&k->`J+v>L2Idf}?j

ztGIs9Xh?Z)qpXOU)9IoC`HTU

ze;-uIc1){jZse1|hbjXq%i)A472!M=O7IHe_3c)ZdVh_Dt8pg5n_`(-XHyF|Ng{$jG94sy)kWS)E=c4HRJuW-*=X6TI&|(wN2`

zr&n^c%EH{`kg?s|?HI=}D}oPs{_nS`U06^VTPSQjjHyn}$edLP$=%h+q3rN*IkS*~

z_m-9+*hw3P{6f

z%~40sJ84UzxOn_@=YKJF`#+Q0RQLZkKu?bfv1;M~P622Q5E#YCNoM>na0S>uL{kaD

zxco`xP);e&lZk(=V|}fd7tFa>cKve%;x7y*jVg=OU_7X68&<9bJUq#jbKJ-8gQ@PG

zrZ>GY4W5)yfyj8p8>%aTomzZZx==Z{cn%kalF`qSsv3Q~I^^#DejuCvgO`!=glkOG

zX?|KkEi)opT4~-Pw-ehk&$qOifA@ux%x{{>T}^rm;MbSQrGdQqaol~o_ZQuW<5M+N

zEp(t|7`fp*Lf+cG=<7`Jt8IC%R&T6%8Z`<@IY-8Up2N#5GgH5#`~~q*a4Y

z5$_)b?T-Z|z1)2C?7GLZzy3@Q&gLX;mC#W@e>W=38r-#r`fjnq!-yX@Q{5iPgPxk)B|2D3)Y)l^KZejR

z+4PauKPABWUQdkPGq@Px=Iiv3t{5>*s?uwvheJVnkKR+d@84KYgWy^(Wr(*2+*5|F0UpJO7LzwBCd=1skD_;F-N66HL5fY<3

znhb6y=Yj{MCY@E}-c*344TjnlCU=Xs{H*=_)9Fx^^>6&upNN?i(61tze35({Q-K}H

zuQN693qR<;HK_g-AsjGmosn-Lf+Iu!?0EY8A`SBP6g@-0QN`*xL{J>v*on3pUEGw;UKNO^

zNV<%oJ&zvyQv>erYoym49oxtPWoUl261V#P1n{j@DEQxYAOsX5KL6k2RrZ}Y{O1M;

zUkbw4SPAWmn>jAFDTsy_f7vJDIArwDM1L_AM;G7HH_Cke;m}tjg&)6#(r{0Ge$M^e

z7q=f(9kpdzL?G<4{t~%TX3%?h>nr1@__ra0-fM*9^$%j-z~nx`5mzcQP5u%A$d^$j

zK;5_D{S@YHyZFMeiW#vqdC>*|epv7){^X^a8HF@2IYM`smoXERa9sSCuZtd=Xo!9l

zq%r9*6_YC)Dzt{1$wx46C)JQXf!=`X0eyjSNb4(wT)}?Ug8qqk0(}uqJoqUjL>vM-

z4VML51=4C>%WEIjYVh|OL~-}qYI7%~yH8pDvFBY@d-!OfzVytFe0x+VgE-Rqq{ljY

zmDk8^`7M;)r{qbguw(GFIO~nZ45xABy0DPr76x*`FYzCxmY*9QpYfSri_=lRkktmv

zIngC>r|2=R$&26eCU^oy4pQqeJCHXAY$alOj{L?4oPYd55A5Q>2Td<=J`!EgoEJ>)

ztYm@?^4ND8;^@?`NReXBjoV++hq_mG{B*`Dm&;ZS9km_1TcYg(nv2gs!peLV`y

zTX=GgS<@&j?<;0+9hh>g%8E}EZ`;BEcY~@d66390a`juSd;4IzZGC0Sjg^n5trtkv

zQ|WoC38Pu?7u0CRKF-3g

z8#GXSF0jLU6#x)TetNmN9}d{!wptQ*f=MRrBW4HQP*%pSHan=NR@YgLH)L&1Md|Lj

zai^9jH-8J6Jxf(Nv4OcECvUZ-xPB*kXi#gt>S6el>q_M)yqdXYXJlU6`=jT%lTg8g

zexLmNp=S{S@o%LcFK+p(Bdpk-M&x$1$#H*RrxA>SCk=cjeK(rshPEMYfxK(tBn1~)

zhf<(A#T~}qif^CxZu~BOL3m~{(qK0EZT|D=YR?y0zN~`kbX&%w>?uZAV*tJt)4nTt

zhIQb}&nipZ8tDs}RiO;^&=L0PL@5Bnm5H#pj$sbHC|zQ&oNyOE$bx>MP~wT~Au7cW$PBKu<=W$y$GR8=>JeVsw2bercZs(Wb;+q93R

zuS{k{xp``j0_)}gp8u}xK42e8ZJuSMJiDPnH{;&s^m1qa7klp&*5ul6{bDbuSdcEL

zH0er}8pR0+2uK$Yq9Rgai1bjSq9A<&0!oWgrGzMugq}$k5D`L=L=p%BQbH1h5J;K(

znQQI$;JdDMt&?}{lW(tMkqg4}-1q+;_ZYu1LOT-MidaNFb*qNY$M4jeO$o0(2Iyek53cnj+)o`Mwrvu?Hibu{k

zY@G?vVkxKTuioO9&eay{>$+N;pq6@-I0vZ(uIQ9WQnrH{qeFgUns>Fpdt?8HMq11pW)qv9n#OV4

z*DeljTQD!xzgpR~#m`lhX9GdGsspnHUq&$+6q3?W`6AJ;u$80GCNs?7T3w!MX6iw$j5vf#Woh}m~|

zh9l2sQ|SB9Tty|LbXIQaj6Xm~jmmAO(vvFIWey+rEUIkc_cEM|R<5y%W71kY<9<8m

zZ&nX$pA>cVW9V^z!K)QZTf6sMvMV9kT6@+iK<=;$CHIFj8h&*}(Wz

zFo)=-Bt#iqkvq9e-uDlTUg>e>#TQ41O72YW;4cX@wiae)(F&a{<$V_Lo0O&P!<+9a

z&*!Sf+BxHy6&Xxd|&I9zJdn3nVg4rVsJ5~+bdtN;5

z5Pwo;52of)42bFb2jMhdNIFKfZTw<(A98=3ir;`*C09OfA5$I4db4Kq4+5@>*xg;T

zd`wzaQo9gq^S6M

zguvv{IL4!b=-8k+&?||__h{~8K(J92-?AYpREC8F1T`BIeb-vX

z@Fo~pDGeVc5BopbgxSE#H%WH7(>#stopy<7HHjSGVRvN9PfYLvz&!&Xl9JO+{DK&1

zcJy8hhR3VHkd8{rvfipO2uJKzv&A`z9$)2sk49HXv3S

zVXZq@>3OlPp4tH`EYN;x>e!t@?&ycj7<8^{d+zXRbR~q-zGb^K(~^s-@RP9NH>1Er

ze*=>|-;fV0`6w>a{f<9N_co??VqxLS!UZh$xfs#SCv)=Zx4;JiJM9KJ-j+^J6

z=IFrd)_=3y7#%48pEo!+xX{r@**49*k}VY_f>w+XJ0#>lZ${Vsi<+y&DsEiIGb9o`

zZYB)*6DGm6c+rj*{K-z#pS;^#aq+bOxA&J;<6T-<-(L-IqOo&{d9rP*de+;;`{!N}

zDlN2o9sRp`Ock;vjN1}d{a-uv{%_s&|IObP@jt*~Ju1Ln

zVgY>h&Wr@W0)|d+i(n#ev9+iumAS>YpdP3YK+J9F2tyy)fYJ?k)Zpc+l>K~`j@6HE-TxEfLT=0e4Y+B43+*gIZ>A4D<;AYSnbQZ>-83

zR_jpq)3loxO1|wDpgG{rlyqS~F{;TpeRqZF(QA_F1Nc+Ac3qI?ac=92t!?>kukXu4

zLrG?JyAe39anX+iN@IQgf+cx?{&Q=E=dhNOu{y<4=jGP@E$|TbJPOohY&|cO!Pg^C

z-_lTT^PK0vjxF2lM?SdJAA2|OH*->$yV!6O=+=TXO{g8jGfAHsCw|h;=4z+mr

zWz$|OU~Bj&_A4l}aWCV1YJBF@E&|jaU!3q%TzR?q^q7QNLO{LPMn?;ADF5ffY-SDOGeAWwxqW~4#-Y#+v0S=`tr6${<(SD_Ka

zjkx4G8KL1gXDo{V%r2r4qfs^G(&FRmMEWGzWo#Rlo3Bmt?%T=`whRxHRlVu^pezgi

zHBv6(bHCK=>8tm>lFQ}KiuLMD`Gf&+G{D(dI%)Jf-<4+&2V)XOXff@aYut;YAU}=7

z7UWYMq%fTR*T#=qI>$x(kjIT&tJ$DlzWCI|1h|?GRL`EEe%=~={_eoia@1>A1L&sN

zeE!+&fqd1~IseJ-@wA(qWp@exoiT`}$=O^8Fyq%1ezEG|7OXIzoLQX{9hP`d8*I{fIAfx9rkn

zo~oz$LPY&S6t)&an(;J{-95}JGC6KdZ4V%B@CT+W8@f%i`W`rpVk7j1`mAy~^#vZz

z7vXQ@_;`(ZjWvShH^gMq6|E(f#VzCrNiDcYqtiT_GalZ0_+rfM~FhyuVqAe

zWY72*%4T>yuk6lW=B0X1RqHad*6k&3P~~w5jHgBABl7&u-yd94m7S~f>;ykk%gTL~

z46Bo;+G%oAU6Z+%t^HGjB~{}~Y5LC9Ki6J|TTMc@8xX^jQwxoF7;tfnG*Ms=Q<2k<

zIo?Pg{Rn$s7jf}VjW(^>Rb+y@^!wSr1rD+abH0gk8CL9wd79)Ls}c%}V5|@Inn>et

ztsfalGf-GXd3sGHX;~r;1%2l)vy>q*7hEWFdNEYj

znjgd#nG;ZkwhoJ_KNdtCz~kaNcWoXw9C18rdNr!7(o?7x=0|M(qZ#V;C`2x557OXUed|#$w

zPsS@5oEjF-bnM&BEwA!Woy>EQ)|^0ZQ4-?Raal^&+_;J#Rwr|-Mf3xE2A9E;JGvkM

z2=Lu%=g@@G!dY33`wrd`or4EJJwyy!C)KFyHjW^%==RWBu&9B%{

zWdkD|Dls`k2SDQ2F0J-2k5r5<>rg^m>p0Aid2S-*C(?129X(4IgrDhRsmWpEBmqwO

zCi>Vfm--)umO-i#wrLRW`@)3Jx3ULwI~Nddzh~+0j=ETa-^%4_a@=9Rf6Q}ZI7Kfn

z8!b_hU7!TKWQ>~3GrlU^(2PlsCwKujT1jP${#PjC_Ko(*7?o-gq*$hTypg&J`_Nkv

zzzWY#b#Waseu2~dXgplob-s2F#e>uhm3q=G{G|7&#WDLE5@y0rx(Y5NUmZ2Ikh;0I

zFZtxfr`3CpH|}}xpU=}i*~*Z0P#*aAn%};DzE0dXnFDxVjr{@m()Z

z%OC_*Mtl`(aQ4OhQ$NhkcnwM3{c-bTtuAX>uECOnx%Tt+q2Jx}NVW#P4?*Ie|xZN{C++8Hy_Bd|+PfsY#Zm$2YVJ%Ui{S73DL{V9UL|V1*42y(bYZXn@>NNUg

z|6|{G?m6JE9?sP0fxV2f>jj;PaRjmshxkO3^FXJ0u@b03gQf&@qkubUqP==~+j+Wb

z8u4)#W&$J>8ho_vAnK?xqA@0|5x{|A>s#_E55MdgWXp6S8D8jk1ctsAK?S9d71%x;

zOa9lWvw*s@mm5q&ST?^Tn$G=!bJmzBit@CU~)4ulPZ>>zJyX`<)Zoq5DaPQ=C`RLiU8Zsy7}AI<9(+*zP*1bRdG(L%ln5FG`VS;EE27PDdKa{c

zUf4#4z$>Q7)2w}&%>zG+n)(dRaJ>?XBL}9J@2$wM53(8vqmb$CKo$j6Sl;PwG|=l`