From 338243666ae16934803898f31437ce6f04a798b0 Mon Sep 17 00:00:00 2001

From: Christina Conrad <114612268+cconrad8@users.noreply.github.com>

Date: Thu, 4 Apr 2024 15:01:33 +0000

Subject: [PATCH 1/2] edit clinical metadata instructions

---

docs/onboarding/annotate.md | 3 +-

docs/onboarding/data-analysis.md | 2 +-

docs/onboarding/data-processing.md | 58 ---------

docs/onboarding/upload-data.md | 66 ----------

docs/onboarding/upload-data.mdx | 191 +++++++++++++++++++++++++++++

5 files changed, 193 insertions(+), 127 deletions(-)

delete mode 100644 docs/onboarding/data-processing.md

delete mode 100644 docs/onboarding/upload-data.md

create mode 100644 docs/onboarding/upload-data.mdx

diff --git a/docs/onboarding/annotate.md b/docs/onboarding/annotate.md

index 34ccc17..4435c0e 100644

--- a/docs/onboarding/annotate.md

+++ b/docs/onboarding/annotate.md

@@ -1,10 +1,9 @@

---

-sidebar_position: 6

+sidebar_position: 5

---

# Applying Metadata to Data

-

After uploading data, contributors can find those files on our [Data Curator App](https://dca.app.sagebionetworks.org/), which is used to help annotate the data. Select **Gray Foundation**, your project name, and the folder of files you want to annotate. Then select a metadata template.

Click download template and go through the steps of populating the template. Export the template as a .csv and reupload it to the data curator app and validate. After it passes validation, you may submit it and your annotations will now appear on Synapse with your data.

diff --git a/docs/onboarding/data-analysis.md b/docs/onboarding/data-analysis.md

index f4fe6fc..76a2bc9 100644

--- a/docs/onboarding/data-analysis.md

+++ b/docs/onboarding/data-analysis.md

@@ -1,5 +1,5 @@

---

-sidebar_position: 7

+sidebar_position: 6

---

# Data Analysis

diff --git a/docs/onboarding/data-processing.md b/docs/onboarding/data-processing.md

deleted file mode 100644

index a8593d6..0000000

--- a/docs/onboarding/data-processing.md

+++ /dev/null

@@ -1,58 +0,0 @@

----

-sidebar_position: 5

----

-

-# Data Organization

-

-Data is organized by its assay type and, logically, its processed type in folders.

-Each top-level folder and all of its subfolders must contain data of the same type (see details below).

-

-The DCC will create empty, common top-level folders as well as subfolders for the expected levels of data.

-This depends on whether both raw or processed data are expected.

-Sometimes only raw data or only processed data is expected.

-If only one level of data is expected, everything is "collapsed" into only one folder and there are no subfolders.

-Subfolders must be of the same data type and level as the root folder they are contained.

-

-```plaintext

-└── single_cell_RNA_seq

- ├── single_cell_RNA_seq_level1

- ├── fileA.fastq

- ├── fileB.fastq

- ├── fileC.fastq

- └── fileD.fastq

- ├── single_cell_RNA_seq_level2

- ├── fileA.bam

- ├── fileB.bam

- ├── fileC.bam

- └── fileD.bam

- ├── single_cell_RNA_seq_level3

- ├── raw_counts.txt

- ├── normalized_counts.txt

- ├── single_cell_RNA_seq_level4

- ├── t-SNE.txt

-```

-

-By understanding the data generation process, the Data Coordination Center (DCC) can effectively collaborate with each team to address the following questions:

-

-- What are the different types of data that will be generated, and how can the data artifacts from this workflow be optimally handled and managed?

-- Are there any recommendations that can be provided to ensure a smooth workflow and avoid potential issues in the downstream analysis?

-- What additional resources can the DCC offer, if available?

-

-### Data Processing by the Project Team

-

-Depending on their funded aims, project teams may have specialized data workflows, which can include:

-

-- Generating sequencing data and deriving data using multiple variant calling pipelines.

-- Producing high-resolution images and extracting summary features from images.

-- Combining different types of data.

-

-During the onboarding process, it is essential to discuss the anticipated workflow, especially if it is complex or deviates from the standard. Project teams should provide information or documentation regarding their workflow.

-

-### Data Processing by the DCC

-

-In addition to the project team's data processing, the DCC also performs data processing on the uploaded data in Synapse. This processing includes:

-

-- Quality control assessments.

-- File format conversions.

-- Other necessary data transformations to facilitate data loading and sharing in cBioPortal or other analysis applications.

-

diff --git a/docs/onboarding/upload-data.md b/docs/onboarding/upload-data.md

deleted file mode 100644

index 922af1e..0000000

--- a/docs/onboarding/upload-data.md

+++ /dev/null

@@ -1,66 +0,0 @@

----

-sidebar_position: 4

----

-

-# Uploading Data

-

-Before you begin, identify the destination for your data. Most data are organized in pre-assigned folders based on assay and data levels. You can create subfolders for additional organization, especially for batch-specific data.

-

-#### Using Synapse User Interface (UI)

-

-The UI is suitable for smaller files less than 100MB. In the designated folder, access the Folder Tools menu for upload options. Refer to the general UI documentation for details.

-

-#### Programmatic Clients

-

-For larger and numerous files, use programmatic clients for efficient uploading. Options include the command-line tool, Python script, or R. Find detailed documentation for each option. Reach out to the DCC for assistance.

-

-#### Typical Workflow with Python Command-Line Client

-

-1. **Install Python Package**: Install the Synapse Python package from [PyPI](https://pypi.org/project/synapseclient/). This will also automatically install the command line utility. Test out that the command line utility is working by typing `synapse help` and feel free to review [docs](https://python-docs.synapse.org/build/html/index.html) for the Python CLI client.

-

-2. **Create Access Token**: For large uploads, it is best to create an access token. Go to your Account profile > Account Settings > Personal Access Tokens > Create new token.

-

-3. **Create Configuration File**: For convenience, copy and paste the token into a `.synapseConfig` text file:

-

- ```plaintext

- [authentication]

- authtoken = sometokenstringxxxxxxxxxxxxxxxxxx

- ```

-

-4. **Create Manifest File**: Create a list of files to transfer (called a manifest). The parent-id is the Synapse folder you are trying to upload files to:

-

- ```bash

- synapse manifest --parent-id syn12345678 --manifest-file manifest.txt PATH_TO_DIR_WITH_FILES

- ```

-

-5. **Certified User Check**: If you are not a Certified User, the tool will output a message. Review and complete the Certified User portion of Account Setup before proceeding.

-

-6. **Execute Sync Command**: Successful execution should create a manifest file `manifest.txt`. Ensure that `.synapseConfig` is present locally to provide authentication:

-

- ```bash

- synapse sync manifest.txt

- ```

-

- Options for retries in case of a poor connection:

-

- ```bash

- synapse sync --retries 3 manifest.txt

- ```

-

-#### One-off Uploads

-

-For just a few files, a more convenient command might be:

-

-```bash

-synapse store my_image.tiff --parentId syn12345678

-```

-

-#### Alternative Methods

-

-Under rare unique circumstances, the DCC can explore the following options:

-

-- Receiving data via physical hard drive

-- Utilizing Globus transfers (if really needed)

-- Transferring from a custom S3 bucket

-

-Feel free to adjust or customize it according to your needs!

\ No newline at end of file

diff --git a/docs/onboarding/upload-data.mdx b/docs/onboarding/upload-data.mdx

new file mode 100644

index 0000000..eb7ff1a

--- /dev/null

+++ b/docs/onboarding/upload-data.mdx

@@ -0,0 +1,191 @@

+---

+sidebar_position: 4

+---

+# Clinical metadata and file upload

+

+import Tabs from '@theme/Tabs';

+import TabItem from '@theme/TabItem';

+

+# Creating a Clinical Metadata Template for BRCA Pre-Cancer Research

+

+

+

+

+This guide provides step-by-step instructions on how to import clinical metadata for the Gray Foundation portal. It explains how to navigate to the Data Curator App, select the project, complete a patient cohort data template, validate the metadata, and submit it. Following this guide will help users efficiently import clinical metadata for the Gray Foundation portal.

+

+1\. Navigate to the [Data Curator App](https://dca.app.sagebionetworks.org/)

+

+

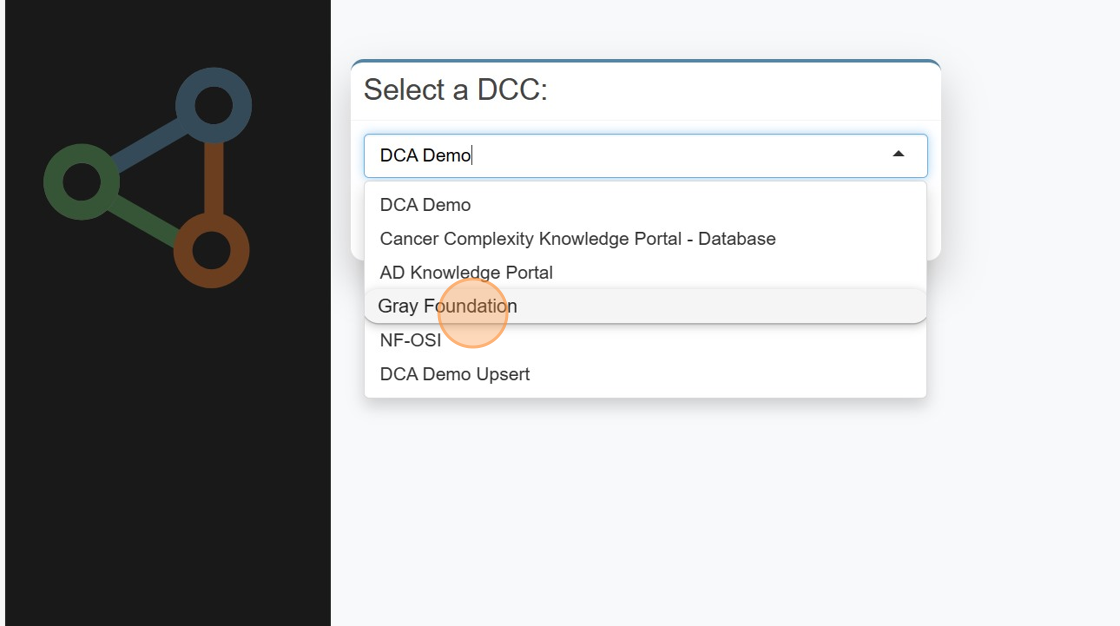

+2\. Select "Gray Foundation"

+

+

+

+

+3\. Select your project

+

+

+

+

+4\. Click the "Patient Cohort Data" folder

+

+

+

+

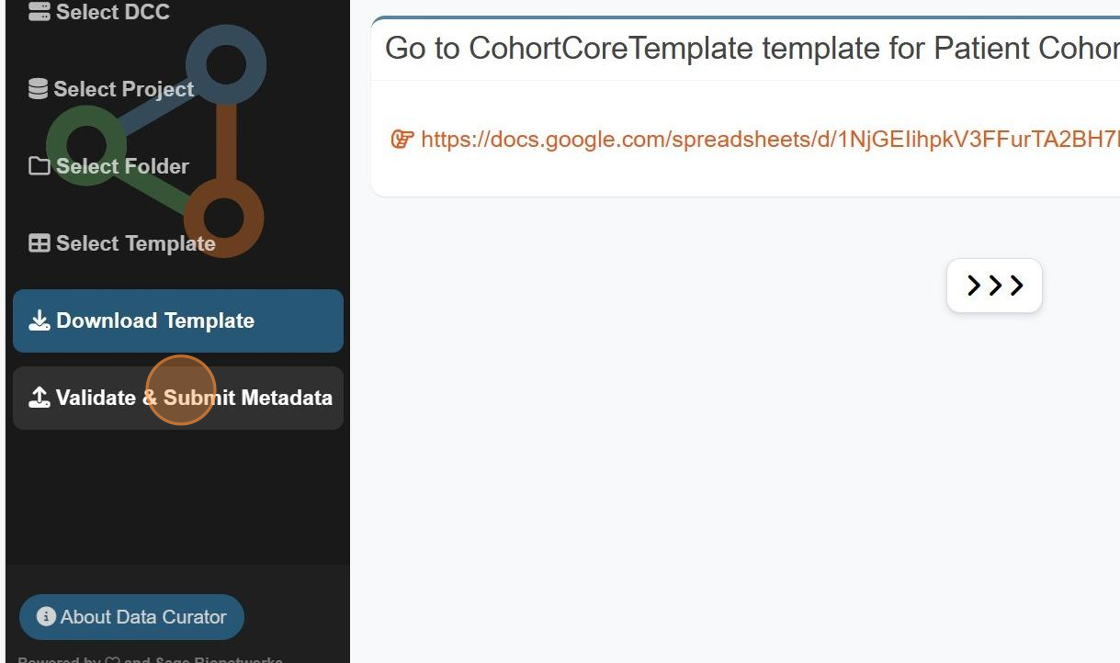

+5\. Click Patient Cohort Data Template and "Download template"

+

+

+

+

+6\. Click the link to the google sheet

+

+

+

+

+7\. Complete the google sheet with your patient cohort data

+

+

+

+

+8\. Ensure all columns required are complete

+

+

+

+

+9\. Download as a .csv file

+

+

+

+

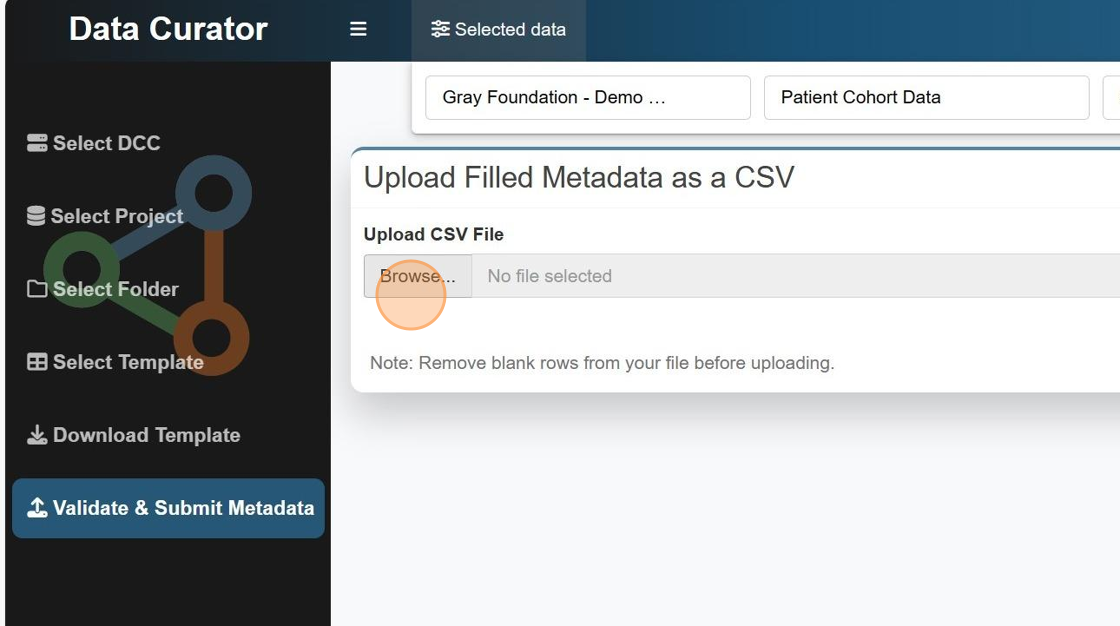

+10\. Click "Validate & Submit Metadata"

+

+

+

+

+11\. Click here

+

+

+

+

+12\. Click "Validate Metadata"

+

+

+#### [Made with Scribe](https://scribehow.com/shared/Importing_clinical_metadata_for_Gray_Foundation_portal___lDabdd0SsqJ8LAZYhYGNQ)

+

+

+

+Before you begin, identify the destination for your data. Most data are organized in pre-assigned folders based on assay and data levels. You can create subfolders for additional organization, especially for batch-specific data.

+

+## Data Organization

+

+Data is organized by its assay type and, logically, its processed type in folders.

+Each top-level folder and all of its subfolders must contain data of the same type (see details below).

+

+The DCC will create empty, common top-level folders as well as subfolders for the expected levels of data.

+This depends on whether both raw or processed data are expected.

+Sometimes only raw data or only processed data is expected.

+If only one level of data is expected, everything is "collapsed" into only one folder and there are no subfolders.

+Subfolders must be of the same data type and level as the root folder they are contained.

+

+```plaintext

+└── single_cell_RNA_seq

+ ├── single_cell_RNA_seq_level1

+ ├── fileA.fastq

+ ├── fileB.fastq

+ ├── fileC.fastq

+ └── fileD.fastq

+ ├── single_cell_RNA_seq_level2

+ ├── fileA.bam

+ ├── fileB.bam

+ ├── fileC.bam

+ └── fileD.bam

+ ├── single_cell_RNA_seq_level3

+ ├── raw_counts.txt

+ ├── normalized_counts.txt

+ ├── single_cell_RNA_seq_level4

+ ├── t-SNE.txt

+```

+

+By understanding the data generation process, the Data Coordination Center (DCC) can effectively collaborate with each team to address the following questions:

+

+- What are the different types of data that will be generated, and how can the data artifacts from this workflow be optimally handled and managed?

+- Are there any recommendations that can be provided to ensure a smooth workflow and avoid potential issues in the downstream analysis?

+- What additional resources can the DCC offer, if available?

+

+### Data processing by the data contributors

+

+Depending on your funded aims, project teams may have specialized data workflows, which can include:

+

+- Generating sequencing data and deriving data using multiple variant calling pipelines.

+- Producing high-resolution images and extracting summary features from images.

+- Combining different types of data.

+

+During the onboarding process, it is essential to discuss the anticipated workflow, especially if it is complex or deviates from the standard. Project teams should provide information or documentation regarding their workflow.

+

+### Data processing by Sage

+

+In addition to the project team's data processing, the DCC also performs data processing on the uploaded data in Synapse. This processing includes:

+

+- Quality control assessments.

+- File format conversions.

+- Other necessary data transformations to facilitate data loading and sharing in cBioPortal or other analysis applications.

+

+## Synapse User Interface (UI)

+

+The UI is suitable for smaller files less than 100MB. In the designated folder, access the Folder Tools menu for upload options. Refer to the general UI documentation for details.

+

+## Programmatic clients

+

+For larger and numerous files, use programmatic clients for efficient uploading. Options include the command-line tool, Python script, or R. Find detailed documentation for each option. Reach out to the DCC for assistance.

+

+#### Typical Workflow with Python Command-Line Client

+

+1. **Install Python Package**: Install the Synapse Python package from [PyPI](https://pypi.org/project/synapseclient/). This will also automatically install the command line utility. Test out that the command line utility is working by typing `synapse help` and feel free to review [docs](https://python-docs.synapse.org/build/html/index.html) for the Python CLI client.

+

+2. **Create Access Token**: For large uploads, it is best to create an access token. Go to your Account profile > Account Settings > Personal Access Tokens > Create new token.

+

+3. **Create Configuration File**: For convenience, copy and paste the token into a `.synapseConfig` text file:

+

+ ```plaintext

+ [authentication]

+ authtoken = sometokenstringxxxxxxxxxxxxxxxxxx

+ ```

+

+4. **Create Manifest File**: Create a list of files to transfer (called a manifest). The parent-id is the Synapse folder you are trying to upload files to:

+

+ ```bash

+ synapse manifest --parent-id syn12345678 --manifest-file manifest.txt PATH_TO_DIR_WITH_FILES

+ ```

+

+5. **Certified User Check**: If you are not a Certified User, the tool will output a message. Review and complete the Certified User portion of Account Setup before proceeding.

+

+6. **Execute Sync Command**: Successful execution should create a manifest file `manifest.txt`. Ensure that `.synapseConfig` is present locally to provide authentication:

+

+ ```bash

+ synapse sync manifest.txt

+ ```

+

+ Options for retries in case of a poor connection:

+

+ ```bash

+ synapse sync --retries 3 manifest.txt

+ ```

+

+#### One-off Uploads

+

+For just a few files, a more convenient command might be:

+

+```bash

+synapse store my_image.tiff --parentId syn12345678

+```

+

+#### Alternative Methods

+

+Under rare unique circumstances, the DCC can explore the following options:

+

+- Receiving data via physical hard drive

+- Utilizing Globus transfers (if really needed)

+- Transferring from a custom S3 bucket

+

+Feel free to adjust or customize it according to your needs!

+

+

From a7cd52292f430c311eaf6b9c03c13569da84658e Mon Sep 17 00:00:00 2001

From: cconrad8

Date: Thu, 4 Apr 2024 12:06:57 -0400

Subject: [PATCH 2/2] update scribe

---

docs/onboarding/upload-data.mdx | 19 +++++++++----------

1 file changed, 9 insertions(+), 10 deletions(-)

diff --git a/docs/onboarding/upload-data.mdx b/docs/onboarding/upload-data.mdx

index eb7ff1a..6c50a84 100644

--- a/docs/onboarding/upload-data.mdx

+++ b/docs/onboarding/upload-data.mdx

@@ -1,6 +1,7 @@

---

sidebar_position: 4

---

+

# Clinical metadata and file upload

import Tabs from '@theme/Tabs';

@@ -75,16 +76,16 @@ This guide provides step-by-step instructions on how to import clinical metadata

Before you begin, identify the destination for your data. Most data are organized in pre-assigned folders based on assay and data levels. You can create subfolders for additional organization, especially for batch-specific data.

-## Data Organization

+#### Data Organization

-Data is organized by its assay type and, logically, its processed type in folders.

+Data is organized by its assay type and, logically, its processed type in folders.

Each top-level folder and all of its subfolders must contain data of the same type (see details below).

-The DCC will create empty, common top-level folders as well as subfolders for the expected levels of data.

-This depends on whether both raw or processed data are expected.

-Sometimes only raw data or only processed data is expected.

+The DCC will create empty, common top-level folders as well as subfolders for the expected levels of data.

+This depends on whether both raw or processed data are expected.

+Sometimes only raw data or only processed data is expected.

If only one level of data is expected, everything is "collapsed" into only one folder and there are no subfolders.

-Subfolders must be of the same data type and level as the root folder they are contained.

+Subfolders must be of the same data type and level as the root folder they are contained.

```plaintext

└── single_cell_RNA_seq

@@ -111,7 +112,6 @@ By understanding the data generation process, the Data Coordination Center (DCC)

- Are there any recommendations that can be provided to ensure a smooth workflow and avoid potential issues in the downstream analysis?

- What additional resources can the DCC offer, if available?

-### Data processing by the data contributors

Depending on your funded aims, project teams may have specialized data workflows, which can include:

@@ -121,8 +121,6 @@ Depending on your funded aims, project teams may have specialized data workflows

During the onboarding process, it is essential to discuss the anticipated workflow, especially if it is complex or deviates from the standard. Project teams should provide information or documentation regarding their workflow.

-### Data processing by Sage

-

In addition to the project team's data processing, the DCC also performs data processing on the uploaded data in Synapse. This processing includes:

- Quality control assessments.

@@ -187,5 +185,6 @@ Under rare unique circumstances, the DCC can explore the following options:

- Transferring from a custom S3 bucket

Feel free to adjust or customize it according to your needs!

-

+

+